Normalized means that you are running the products you are comparing either at the same speed or at the same power draw.

No, it doesn't.

en.wikipedia.org

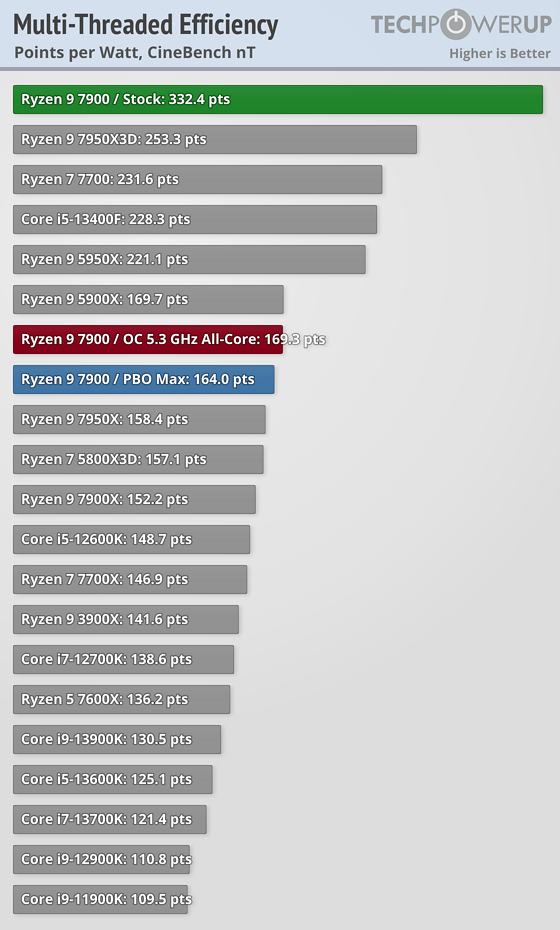

Furthermore, the fact that TechPowerUp put efficiency data from multiple disparate CPUs into a single graph shows they

also disagree with you. If numbers between CPUs with different performance and power draw weren't comparable, why put them in the same graph?

The same way youd compare the fans by normalizing for dba.

When trying to measure a relationship between two quantities, like airflow per dB (A-weighted), one way to do it is to physically control for the independent variable by adjusting the system so they're all equal. This can make sense if it varies a lot from one sample to the next and the relationship is significantly nonlinear. You could also simply measure and divide out the noise, but with limited predictive value if the way that you're measuring them differs substantially from how they behave under typical use.

Both the 12900 and the 13900 score around 15.5k @ 35w when undervolted and around 12k at stock.

Unless Intel is undervolting the T-series CPUs, that test methodology is fundamentally flawed. The point of the test would be to predict how these 35 W CPUs would compare at their respective stock settings.

TPU also has a test like that, at 35w nothing gets nowhere near it in efficiency

...only because the chart contains no other samples tested in the same way.

The PL2 is irrelevant, it lasts for a minute. After that the CPU drops to PL1

Without knowing how long the test is run for, and whether they average in those samples or only take data from after the machine settles at PL1, how can you say PL2 is irrelevant?

I said Intel has better ST efficiency and you said you aren't sure / dont believe that.

Show me the quote. I tend to be pretty careful with my words, whereas you seem given to overstatement and exaggeration. If I expressed doubt, it was almost certainly in the extremity or the precise nature of your characterization.

Really? You think AMD is the only one that has clocked their CPUs beyond their efficiency sweet spot?

No, I think the falloff curve for Zen 4 is steeper than Golden Cove & Raptor Cove. The data I have cited (most recently from Anandtech) supports this.

Here's where a key point I made earlier in the exchange becomes relevant. Remember the table I showed from Chips & Cheese' analysis of Zen 4 vs. Golden Cove? It shows a lot of internal structures that are larger in Golden Cove. Do you have any idea why someone might make a reorder buffer or register file larger? It has a lot to do with the fact that when you run a CPU core at a higher clock speed, code running on that core sees the rest of the world slowing down. In other words, cache and DRAM latency doesn't change in terms of nanoseconds, but because the CPU core is completing more clock cycles per nanosecond, the latencies increase in the number of clock cycles. In order to compensate for that, a CPU core needs to be able to keep more operations in flight, which means all of the out-of-order machinery needs to be scaled up.

When Intel decided to build a really fast core that had "long legs" (i.e. the ability to clock high), they needed to make it bigger and more complex. That comes at a cost in terms of die area and energy usage. The E-cores are there to compensate for these things. Because Ryzen 7000 didn't have any sort of E-core, AMD targeted its efficiency window at a lower clockspeed and sized their out-of-order structures accordingly. That doesn't mean you can't get more performance out of a Zen 4 core at higher clock speeds, but it means that you hit a point of diminishing returns more quickly and the falloff is more rapid.

Still, because AMD used a more efficient manufacturing process, they could afford to set the default clock frequency of these cores above that sweet spot, and still be within power budget. That's why we don't see any AM5 CPUs with a boost limit of 4.6 GHz, like Intel had to do with the i5-13400F.

In this whole exchange, I've never said Zen 4 can match Raptor Cove in single-thread performance. However, when you start talking about efficiency, you're talking perf/W. When applied to an entire microarchitecture, that's not a single datapoint, but rather a curve. So, when you make sweeping statements about Intel being more efficient, I'm pointing out that we don't have those perf/W curves needed to support such a statement, and furthermore that the data we

do have on multicore scaling suggests that the efficiency of Zen 4 is indeed quite competitive at lower power levels.

How else can you explain benchmarks like where Genoa EPYC obliterated Sapphire Rapids Xeon, while using the same or less power?

First of all, the graph is obviously flawed, the author himself says so. He is supposedly setting PPT values on the AMD but the CPU pulls a ton more wattage than it's limits dictate. Go to page 3 of the review and youll find out. Im quoting the reviewer

Starting with the peak power figures, it's worth noting that AMD's figures can be wide off the mark even when restricting the Package Power Tracking (PPT) in the firmware. For example, restricting the socket and 7950X to 125 W yielded a measured power consumption that was still a whopping 33% higher.

<snip>

There's certainly more performance at 65 W from our compute testing on the Ryzen 9 7950X, but it's drawing more power than it should be. It's also running hotter despite using a premium 360mm AIO CPU cooler, which is more than enough even at full load.

It's cute how you omitted the very next sentence (where I marked <snip>), which is:

"By comparison, the 13900K exceeded its set limits by around 14% under full load."

Another key point you're glossing over is how they're talking about

peak power figures. Anandtech has an annoying habit of reporting peak power figures that occurred even for less than a second, which is how they ended up with a graph showing i9-12900K used 272 W, when even the 95th percentile is much lower.

So, unless they give us more detail about average power usage, I have to discount what they're saying about these CPUs excursions beyond the configured limits, especially since what I've seen elsewhere shows that Ryzen 7000 CPUs are pretty good about respecting the configured PPT limits.

BUT, the IMPORTANT part is - it doesn't matter even if the graph is correct. You are constantly flip flopping and changing your argument. The 7950x doesn't have a stock PL of 35 watts. The 13900t does. You were arguing that only STOCK efficiency is important,

When we don't have performance & efficiency data on a i9-13900T, the best we can do is use approximations like the test I cited. The actual data will certainly differ, as I said in my prior post, and that's why it's important to use actual

test data. However, unless/until we have that, tests like the one Anandtech did get us in the ballpark and are a lot closer than we get by simply relying on your imagination.

Dude, just make your mind up and stick with it.

I'm trying to use the available data in the way that best supports the types of conclusions about the claims being made. It has nothing to do with changing my mind about anything. If you don't understand that, then you haven't been paying attention.

The important part from that graph is the STOCK efficiency (you are the one that brought up stock configurations). A supposed 13900t would core 12700 @ 35w, which ends up at 350 pts / watt. The 7950x at stock needs 230 watts for 38.5k points, which translates to 167 pts per watt. So the Intel cpu is literally more than twice as efficient in MT workloads out of the box, LOL

Why are you comparing a 35 W CPU to a 170 W CPU?

In this case, it's like you're comparing a tractor to a big rig truck. Someone who needs a tractor cannot even consider using a truck, instead, and vice versa.

This entire thread can be boiled down to two things:

- You're making nonsensical comparisons between products of different classes.

- You're making lots of invalid assumptions that aren't supported by any data.

Also, it doesn't help that you don't understand the meaning of terms like normalization.

You were talking about PERFORMANCE. You literally said the 7900x justified it's much higher price because it's faster in MT than the 13700k. It isn't.

Yes, I'll apologize for that. I quickly referred to my ISO-power graph, rather than actually looking up benchmarks of each at stock settings.

The 29 watts is from my Intel setup. The 7950x is at 40-45w average with spikes up to 65, WHILE using the GPU. My intel part is at 7 watts while using the GPU.

But how do you know that it's actually using the GPU? All you know is that it's

configured to, but it still might not use it for some codecs. Also, the power management settings should affect how quickly each CPU kicks into "high gear" and how long it stays there before dropping back down. So, I'd also check those.