I don't think it's nearly as easy to scale up from 2-way SMT to n-way SMT without extra resources on the transistor side.

Especially when you're trying to process multiple threads in parallel simultaneously in real time. You need to plan out how many resources can be split for each core into each Virtual core. And that requires alot more transistor area,

Recent estimates I've seen on the additional area needed for HyperThreading in x86 CPUs are about 2-3% of die area. Scaling that to more threads would be a little cheaper, because you've already got the structures in place to manage > 1 thread per core. So, mostly what you need to scale up would be the register files and adding a couple bits to some book keeping structures.

One thing I haven't mentioned about n-way SMT is that Intel has gone down this path at least twice, before. Their first couple generations of Atom CPUs had 4-way SMT. They got rid of it, when they switched from in-order to out-of-order. The second instance was the last generation of Xeon Phi, which had 2-way cores that were

both out-of-order and 4-way SMT. That probably tells us that Intel isn't afraid to go there, when they think it makes sense.

Oh, and the last I heard, their iGPUs had 7-way SMT. That was pre-Xe. I don't know if they've said how many threads per EU Xe uses. And speaking of GPUs, I think AMD's GCN had 12-way SMT, but I could be mistaken.

especially when I want Dynamic SMT from SMT1 -> SMT8 and everything in between.

I didn't pick up on what you meant by "Dynamic SMT", at first. Now that I understand, I'll credit you for an interesting idea... but it has a fatal flaw.

The CPU core cannot decide, on its own, whether or not to simply ignore one of its threads. When the OS schedules a thread to run, it

needs for that thread to get execution time. To violate that presumption will cause system-level performance degradation, if not worse. The reason is that the operating system has way more information about threads than a core can know. The OS will schedule threads to run when an I/O operation completes, or when an exclusive resource becomes available. There are sometimes other threads in line for that same resource, which means a CPU core arbitrarily starving out threads can cause a logjam effect.

Truly, the best approach to this problem is for something like the Thread Director to collect stats on threads, and then the OS can take those performance characteristics into account, when it decides which threads to schedule on which cores and when.

But not everything goes well with a GPU, especially things like Video Encoding when you want to get the smallest possible file size.

But video encoders don't scale well to lots of CPU threads and my point was that things which

do tend to run well on GPUs.

It's not a physics problem, they were solving that part just fine.

No, it wasn't competitive because the number of cells per die area wasn't competitive with 3D NAND.

The physics problem is the question of how small you can make them and in how many layers. I don't know the answer to that, but probably Intel does. Without knowing the answer, we cannot say whether the technology could

ever be price-competitive with NAND.

They could do a bit more and be more experimental, but that would involve a bit more risk, and we all know how risk averse they are.

Hybrid CPUs, chiplets, and tiles don't sound like things risk-averse companies would generally do.

Or we can allow DeskTop consumers to choose for themselves instead of trying to force them to buy Enterprise parts that they can't afford.

If Intel thinks enough people want

only E-cores in a mainstream desktop socket, they'll make such a CPU. There's nothing else keeping them from doing it.

The reason I think it won't happen is that most mainstream users are running some kind of interactive software that depends largely on single-thread performance for its responsiveness. Even if it's just a web browser. That's why the only two markets where you see them selling E-core only CPUs are at the extreme low end (i.e. chromebooks) and for servers that won't be running interactive workloads.

Basically, the same thing applies to AMD and their Zen 4c strategy.

I'd rather just have one machine to handle heavy jobs running at full tilt using every resource possible.

Then I can be on the other machine doing my job. That's how I do things.

You're free to have dedicated machines, if you're willing to pay the price. Modern software technology makes that largely unnecessary, unless you're a hardcore gamer who cannot tolerate the loss of even a couple fps.

In case you haven't noticed, there's been a long trend of virtualization being used to consolidate services onto fewer physical machines, because it's more efficient and cost-effective. Again, do what you want, but be aware that there are other options and an entire trend that you're going against.

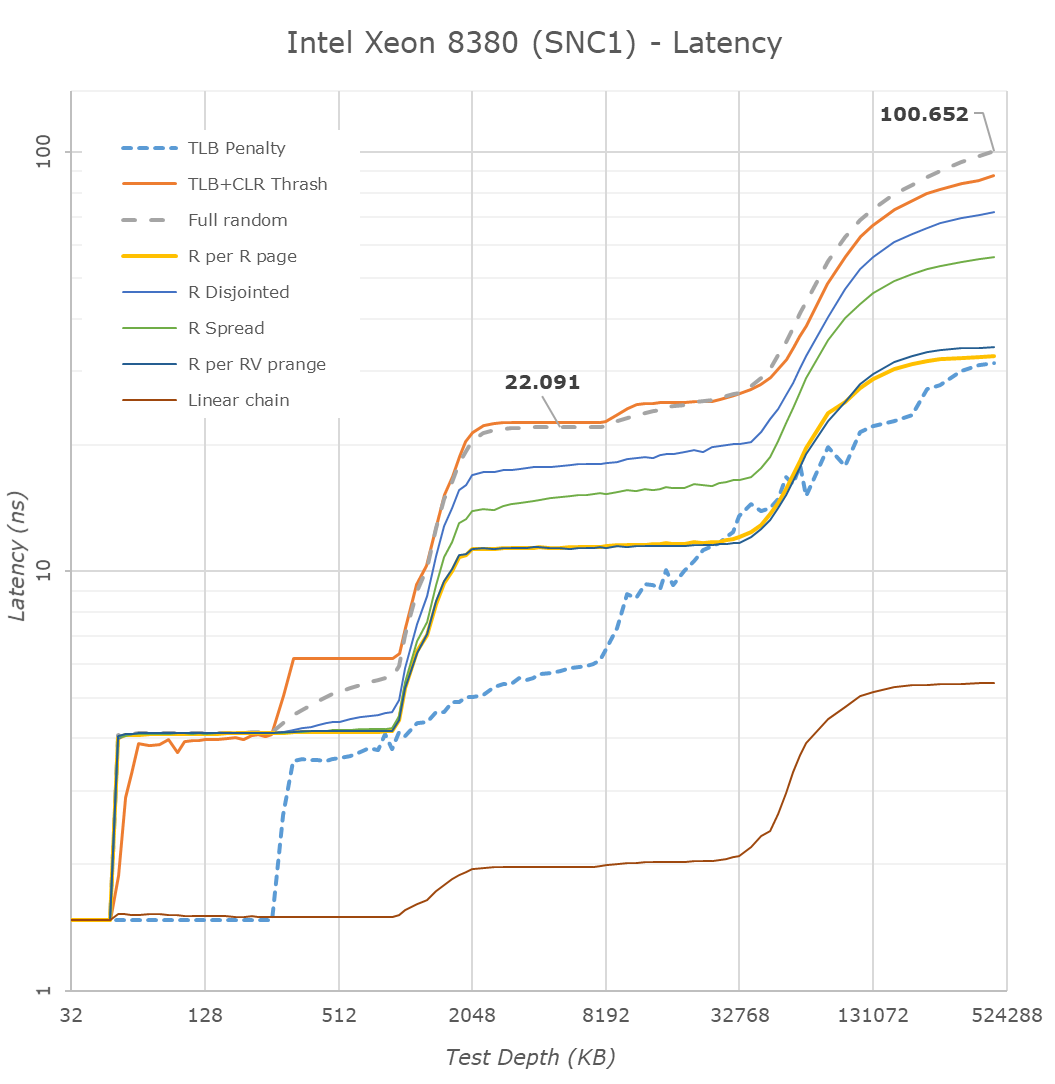

I think they kept it for latency reasons because gaming depends more on latency and the latency penalty from mesh isn't worth it.

If you simply count hops, a mesh is more efficient at even 12 stops. Try drawing a 4x3 grid on a piece of paper. Even if you put the memory controller in one corner (FWIW, I don't see why it couldn't be in the middle), the worst-case distance is only 5 hops. On a ring bus, it would be 6. So, maybe 12 was the point where it makes

more sense to use a mesh than ring bus? I don't know how many stops Alder Lake has, but don't forget to include the iGPU, PCIe interface, and memory controller. That should bring it to at least 13, but then there's other stuff like the security processor... so maybe 14?

Raptor Lake's 24C/32T is 8 P-cores & 16 E-cores.

Those 16 E-cores eats the die area of 4 P-cores.

Sorry to burst your bubble, but 16 P-cores would clobber 24C/32T any day of the week.

First, 8 + 4 = 12. So, you wouldn't

get 16 P-cores in the same die area (i.e. same price). Just so we're clear.

However, the reason I said 16 E-cores added more performance than 8 P-cores is based on the single-thread performance comparison that puts a single E-core at somewhere between 0.54 and 0.6 of a single thread on a P-core. If that extrapolated linearly, you'd get performance equivalent to between 8.64 and 9.6 P-cores. However, we know that performance doesn't scale linearly in either case.

The above stats I computed in post #32 indeed showed that the fully-loaded P-cores with 2 threads provided SPEC bench performance equivalent to a little more than 20 E-cores. That suggests you're right that 16 P-cores would beat a 8P + 16E configuration. But, you're forgetting about a key detail, which is power. In the 2Tx 8P scenario, Alder Lake is using 239 W. In the all E-core scenario, it's using only 48. So, without massively increasing your power budget, a 16 P-core CPU wouldn't get you nearly 20 E-cores worth of performance. And therefore, a 8P + 16E setup is going to vastly outperform a 12P setup.

This is not a controversial statement. Just look at the data.

And Intel does it because they're stingy and doesn't want to give consumers "Too many P-cores".

They're stingy in the way all corporations are stingy. However, they need to stay competitive. If the most cost-effective way to provide a competitive offering were with all P-cores, then they would do it. The fact that they didn't says they believe E-cores are a more cost-effective and power-efficient way to add compute, and the data we have backs them up on that.

The point is that giving Heterogenous Architecture onto DeskTop isn't nearly as necessary when Intel customers and many desktop customers don't care and are willing to OC or pump up the Wattage to get as much performance as the power delivery on their MoBo's allows. Many users on DeskTop, especially enthusiasts unleash the power limits and see how far they can push their systems.

You grossly overestimate the number of such overclockers. Intel caters to this community with special SKUs, but only because they know it's a vocal and high-profile subset of the user community.

They know that if they shipped 300 W desktop CPUs, it would be a market failure, because most reviewers test with non-exotic setups, not to mention that most users don't want extreme cooling solutions and would be disappointed with the products otherwise.

Anyway, they still have the upcoming Sapphire Rapids workstation CPUs as an opportunity to deliver more P-cores to the people who really want them and are willing to spend the money. So, we'll have to see if they release HEDT versions, or simply market the Xeon W 2400 or 3400 CPUs to the gaming community.