1060 6gb is it worth it?

- Thread starter TheSimpleShrimp

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Solution

It's strictly a resolution issue.

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX...

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX...

doubletake

Honorable

The 3GB 1060 will always be a complete waste of money as the 1060 is more than fast enough to actually utilize image quality settings that can eat up 4GB+. Buying a 3GB model is basically paying for an artificially crippled product.

It's strictly a resolution issue.

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX 480 which is much more expensive, and about 6% slower than the reference 1o60

https://www.techpowerup.com/reviews/MSI/GTX_1060_Gaming_X_3_GB/26.html

At 1440p, I'd get the 6 GB... if at 1080p and you could better spend that $30 elsewhere, then the 3 GB will do just fine.

Most folks have a misconception about VRAM usage ... can't count the number of posts posting that they did this or that or read this or that and under this condition the card **used more than x GB**. The fact that no tool exists which can measure VRAM usage doesn't seem to sway anybody. What GPUz and other utilities measure is VRAM allocation. This is very different from usage, They put in a 4 GB card and an 8GB card and see 4.5 GB in GPUz and have that big "aha" moment, not realizing that GPuz isn't saying what they think it is saying.

GPuz is like the credit reporting agencies. If you have $500 on your VISA card and that card has a $5,000 limit, which number do the credit agencies report ... $5,000. The bank you went to for your car loan wants to know your maximum liabilities, and VISA has authorized you to spend $5,000 already so $5k is what gets reported.

GFX cards do the same thing. When you install a game the install routine sees how much RAM is available ... if it sees 3 GB, it might allocate 2 GB...if it sees 6 GB it might allocate 4 GB.

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

Alienbabeltech did the most exhaustive test on this topic on some 40+ games using 2Gb and 4Gb 770s with resolution of 5760 x 1080 Only 5 games showed a significant difference and that was at 5960 when the card was so overloaded both the 2GB and 4GB cards could not deliver 30 fps. The one game that showed an oddity at playable frame rates was Max Payne. They could not install the game at 5950 with the 2Gb card ... so they installed it with th 4Gb card, then ... they swapped in the 2 GB card ... same frame rates, same visual quality, same everything.

You can see the same thing here and here:

http://www.guru3d.com/articles_pages/gigabyte_geforce_gtx_960_g1_gaming_4gb_review,12.html

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

There are games that have issues:

a) Poor console ports like Assassins Creed: Unity

b) Games with hi res textures for use with higher resolutions ... if you try and load the high res textures, the game may not let you ... but if at 1080P, why would you do that

c) Some DX12 titles (Rise of the Tomb Raider and Hitman) take a hit with 3GB, not sure why as yet as some with similar frame rates and textures don't. Not seen an explanation yet

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX 480 which is much more expensive, and about 6% slower than the reference 1o60

https://www.techpowerup.com/reviews/MSI/GTX_1060_Gaming_X_3_GB/26.html

At 1440p, I'd get the 6 GB... if at 1080p and you could better spend that $30 elsewhere, then the 3 GB will do just fine.

Most folks have a misconception about VRAM usage ... can't count the number of posts posting that they did this or that or read this or that and under this condition the card **used more than x GB**. The fact that no tool exists which can measure VRAM usage doesn't seem to sway anybody. What GPUz and other utilities measure is VRAM allocation. This is very different from usage, They put in a 4 GB card and an 8GB card and see 4.5 GB in GPUz and have that big "aha" moment, not realizing that GPuz isn't saying what they think it is saying.

GPuz is like the credit reporting agencies. If you have $500 on your VISA card and that card has a $5,000 limit, which number do the credit agencies report ... $5,000. The bank you went to for your car loan wants to know your maximum liabilities, and VISA has authorized you to spend $5,000 already so $5k is what gets reported.

GFX cards do the same thing. When you install a game the install routine sees how much RAM is available ... if it sees 3 GB, it might allocate 2 GB...if it sees 6 GB it might allocate 4 GB.

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

GPU-Z claims to report how much VRAM the GPU actually uses, but there’s a significant caveat to this metric. GPU-Z doesn’t actually report how much VRAM the GPU is actually using — instead, it reports the amount of VRAM that a game has requested. We spoke to Nvidia’s Brandon Bell on this topic, who told us the following: “None of the GPU tools on the market report memory usage correctly, whether it’s GPU-Z, Afterburner, Precision, etc. They all report the amount of memory requested by the GPU, not the actual memory usage. Cards will larger memory will request more memory, but that doesn’t mean that they actually use it. They simply request it because the memory is available.”

Alienbabeltech did the most exhaustive test on this topic on some 40+ games using 2Gb and 4Gb 770s with resolution of 5760 x 1080 Only 5 games showed a significant difference and that was at 5960 when the card was so overloaded both the 2GB and 4GB cards could not deliver 30 fps. The one game that showed an oddity at playable frame rates was Max Payne. They could not install the game at 5950 with the 2Gb card ... so they installed it with th 4Gb card, then ... they swapped in the 2 GB card ... same frame rates, same visual quality, same everything.

You can see the same thing here and here:

http://www.guru3d.com/articles_pages/gigabyte_geforce_gtx_960_g1_gaming_4gb_review,12.html

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

There are games that have issues:

a) Poor console ports like Assassins Creed: Unity

b) Games with hi res textures for use with higher resolutions ... if you try and load the high res textures, the game may not let you ... but if at 1080P, why would you do that

c) Some DX12 titles (Rise of the Tomb Raider and Hitman) take a hit with 3GB, not sure why as yet as some with similar frame rates and textures don't. Not seen an explanation yet

superninja12

Glorious

to who ?

If I ask my 20 year old son whether he wants to go out for steak at Peter Luger's (figure $60) or Outback ($11) he'll take Outback.

"Is it worth it" is a question each individual will a have a different answer for based upon varying sets of goals. If you have a $xxx budget and getting the 6GB means dropping the SSD, many folks won't wanna do that. Someone with a $2k system budget won't even consider anything but a 1080.

everything has a cost ... depends what each person needs, what resolution, what games are being played and what each person is "willing to pay"

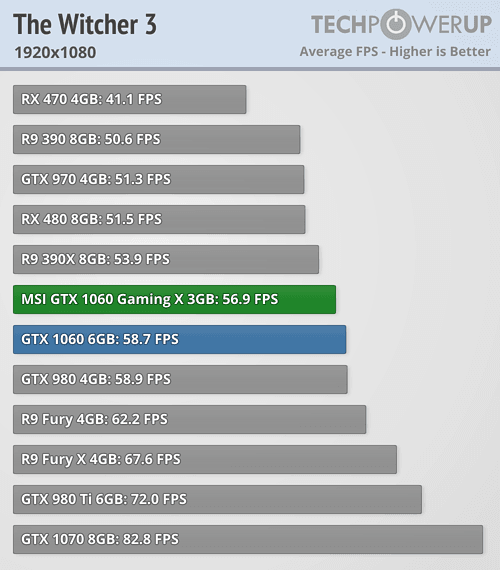

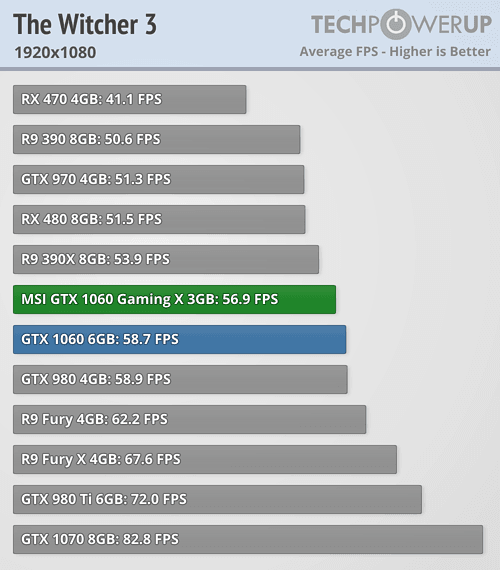

Let's use:

Witcher 3 (1080P)

MSI 1060 3GB = 56.9 fps

Reference 1060 GB = 58.7

MSI 1060 6GB = 59.5

MSI 1070 = 86.2

Witcher 3 (1440P)

MSI 1060 3GB = 42.2 fps

Reference 1060 GB = 43.6

MSI 1060 6GB = 44

MSI 1070 = 64.2

So if Witcher 3 is the main thing.... the no, the 1060 6GB makes no sense at all for $50. Before I'd spend $240 on the 1060, I'd spend $380 on the 1070 @ 1440p.

Rise of the Tomb Raider OTOH shows 41.2 to 54.5 going from 3 GB to 6GB on the 1060 @ 1080p (67.7 for the 1070. Hitman goes from 62.7 w/ 3Gb to 74.6 w/ 6GB ... these are the only 2 games is aw with a big increase.

So it depends on what's more important to you .... Kinda like that song ...

https://www.youtube.com/watch?v=9NF5XU-k2Vk

http://www.lyricsmode.com/lyrics/j/jimmy_soul/if_you_wanna_be_happy.html

If I ask my 20 year old son whether he wants to go out for steak at Peter Luger's (figure $60) or Outback ($11) he'll take Outback.

"Is it worth it" is a question each individual will a have a different answer for based upon varying sets of goals. If you have a $xxx budget and getting the 6GB means dropping the SSD, many folks won't wanna do that. Someone with a $2k system budget won't even consider anything but a 1080.

everything has a cost ... depends what each person needs, what resolution, what games are being played and what each person is "willing to pay"

Let's use:

Witcher 3 (1080P)

MSI 1060 3GB = 56.9 fps

Reference 1060 GB = 58.7

MSI 1060 6GB = 59.5

MSI 1070 = 86.2

Witcher 3 (1440P)

MSI 1060 3GB = 42.2 fps

Reference 1060 GB = 43.6

MSI 1060 6GB = 44

MSI 1070 = 64.2

So if Witcher 3 is the main thing.... the no, the 1060 6GB makes no sense at all for $50. Before I'd spend $240 on the 1060, I'd spend $380 on the 1070 @ 1440p.

Rise of the Tomb Raider OTOH shows 41.2 to 54.5 going from 3 GB to 6GB on the 1060 @ 1080p (67.7 for the 1070. Hitman goes from 62.7 w/ 3Gb to 74.6 w/ 6GB ... these are the only 2 games is aw with a big increase.

So it depends on what's more important to you .... Kinda like that song ...

https://www.youtube.com/watch?v=9NF5XU-k2Vk

http://www.lyricsmode.com/lyrics/j/jimmy_soul/if_you_wanna_be_happy.html

JackNaylorPE :

It's strictly a resolution issue.

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX 480 which is much more expensive, and about 6% slower than the reference 1o60

https://www.techpowerup.com/reviews/MSI/GTX_1060_Gaming_X_3_GB/26.html

At 1440p, I'd get the 6 GB... if at 1080p and you could better spend that $30 elsewhere, then the 3 GB will do just fine.

Most folks have a misconception about VRAM usage ... can't count the number of posts posting that they did this or that or read this or that and under this condition the card **used more than x GB**. The fact that no tool exists which can measure VRAM usage doesn't seem to sway anybody. What GPUz and other utilities measure is VRAM allocation. This is very different from usage, They put in a 4 GB card and an 8GB card and see 4.5 GB in GPUz and have that big "aha" moment, not realizing that GPuz isn't saying what they think it is saying.

GPuz is like the credit reporting agencies. If you have $500 on your VISA card and that card has a $5,000 limit, which number do the credit agencies report ... $5,000. The bank you went to for your car loan wants to know your maximum liabilities, and VISA has authorized you to spend $5,000 already so $5k is what gets reported.

GFX cards do the same thing. When you install a game the install routine sees how much RAM is available ... if it sees 3 GB, it might allocate 2 GB...if it sees 6 GB it might allocate 4 GB.

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

Alienbabeltech did the most exhaustive test on this topic on some 40+ games using 2Gb and 4Gb 770s with resolution of 5760 x 1080 Only 5 games showed a significant difference and that was at 5960 when the card was so overloaded both the 2GB and 4GB cards could not deliver 30 fps. The one game that showed an oddity at playable frame rates was Max Payne. They could not install the game at 5950 with the 2Gb card ... so they installed it with th 4Gb card, then ... they swapped in the 2 GB card ... same frame rates, same visual quality, same everything.

You can see the same thing here and here:

http://www.guru3d.com/articles_pages/gigabyte_geforce_gtx_960_g1_gaming_4gb_review,12.html

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

There are games that have issues:

a) Poor console ports like Assassins Creed: Unity

b) Games with hi res textures for use with higher resolutions ... if you try and load the high res textures, the game may not let you ... but if at 1080P, why would you do that

c) Some DX12 titles (Rise of the Tomb Raider and Hitman) take a hit with 3GB, not sure why as yet as some with similar frame rates and textures don't. Not seen an explanation yet

The 3 GB has less shaders (1152 vs 1280) and obviously less RAM. On average the 3Gb is about 7% slower than the 6Gb version in most games. At 1080p, there's really no need for more than 3 GB and at 1440p, > 4 GB isn't doing anything for ya. So whether it's "worth it" can best be weighed by the difference in cost and that is varying daily.

So whether it's 'worth it" or not clearly depends on the price when you buy it. The 3GB version is sometimes found a slow as $195. The MSI Gaming X is $210 for 3GB... the 6GB is $240, both after $20 MIRs. So spending 14% more to get a 7% increase in performance ... not exactly a good ROI.

The MSI 3GB model has about the same overall performance as the RX 480 which is much more expensive, and about 6% slower than the reference 1o60

https://www.techpowerup.com/reviews/MSI/GTX_1060_Gaming_X_3_GB/26.html

At 1440p, I'd get the 6 GB... if at 1080p and you could better spend that $30 elsewhere, then the 3 GB will do just fine.

Most folks have a misconception about VRAM usage ... can't count the number of posts posting that they did this or that or read this or that and under this condition the card **used more than x GB**. The fact that no tool exists which can measure VRAM usage doesn't seem to sway anybody. What GPUz and other utilities measure is VRAM allocation. This is very different from usage, They put in a 4 GB card and an 8GB card and see 4.5 GB in GPUz and have that big "aha" moment, not realizing that GPuz isn't saying what they think it is saying.

GPuz is like the credit reporting agencies. If you have $500 on your VISA card and that card has a $5,000 limit, which number do the credit agencies report ... $5,000. The bank you went to for your car loan wants to know your maximum liabilities, and VISA has authorized you to spend $5,000 already so $5k is what gets reported.

GFX cards do the same thing. When you install a game the install routine sees how much RAM is available ... if it sees 3 GB, it might allocate 2 GB...if it sees 6 GB it might allocate 4 GB.

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

GPU-Z claims to report how much VRAM the GPU actually uses, but there’s a significant caveat to this metric. GPU-Z doesn’t actually report how much VRAM the GPU is actually using — instead, it reports the amount of VRAM that a game has requested. We spoke to Nvidia’s Brandon Bell on this topic, who told us the following: “None of the GPU tools on the market report memory usage correctly, whether it’s GPU-Z, Afterburner, Precision, etc. They all report the amount of memory requested by the GPU, not the actual memory usage. Cards will larger memory will request more memory, but that doesn’t mean that they actually use it. They simply request it because the memory is available.”

Alienbabeltech did the most exhaustive test on this topic on some 40+ games using 2Gb and 4Gb 770s with resolution of 5760 x 1080 Only 5 games showed a significant difference and that was at 5960 when the card was so overloaded both the 2GB and 4GB cards could not deliver 30 fps. The one game that showed an oddity at playable frame rates was Max Payne. They could not install the game at 5950 with the 2Gb card ... so they installed it with th 4Gb card, then ... they swapped in the 2 GB card ... same frame rates, same visual quality, same everything.

You can see the same thing here and here:

http://www.guru3d.com/articles_pages/gigabyte_geforce_gtx_960_g1_gaming_4gb_review,12.html

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

There are games that have issues:

a) Poor console ports like Assassins Creed: Unity

b) Games with hi res textures for use with higher resolutions ... if you try and load the high res textures, the game may not let you ... but if at 1080P, why would you do that

c) Some DX12 titles (Rise of the Tomb Raider and Hitman) take a hit with 3GB, not sure why as yet as some with similar frame rates and textures don't. Not seen an explanation yet

Does this mean that if I get a new graphics card, or upgrade from a 1060 3GB to a 1060 6GB, I should reinstall games or will the card drivers already re-allocate ram?

TRENDING THREADS

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

-

-

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.