Show me any product and I can tell you how an idiot will break, fry, destroy, or otherwise negligently cause the demise of their product. If you design products to be idiot-proof for the ever growing population of uniquely gifted idiots, there will be no products. This is a supertask. Every product is designed with a "good enough" approach, and anything that "slips through the cracks" is a calculated risk by the designers.

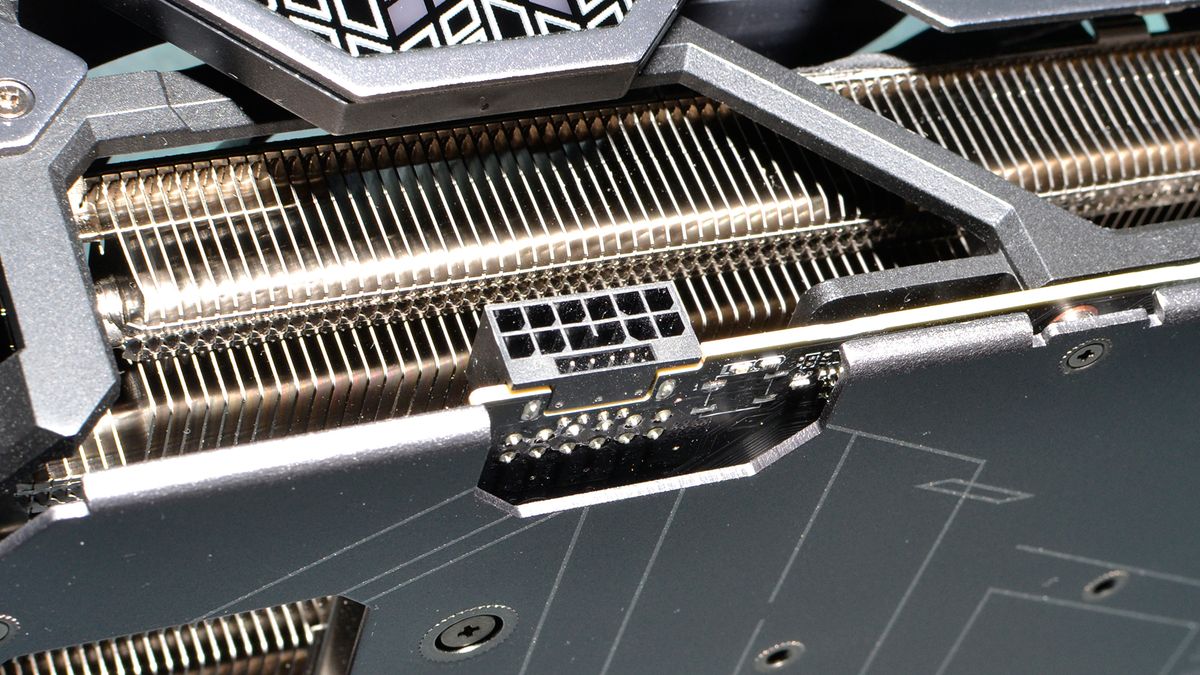

Lets say there are 100 cases where a 4090 fried its connector or port. If 70 of them were user error, 12 of them were bad ports on the 4090, and the remaining 18 were faulty 12-pin connectors, you would be looking at a approximate 12/10,000s rate for bad ports and 18/10,000s for the connector. Seems like a completely reasonable design to me.