Reading some broad tea leaves there are very promising times ahead for the desktop user, and potentially a very large shift in the market makeup, for the better

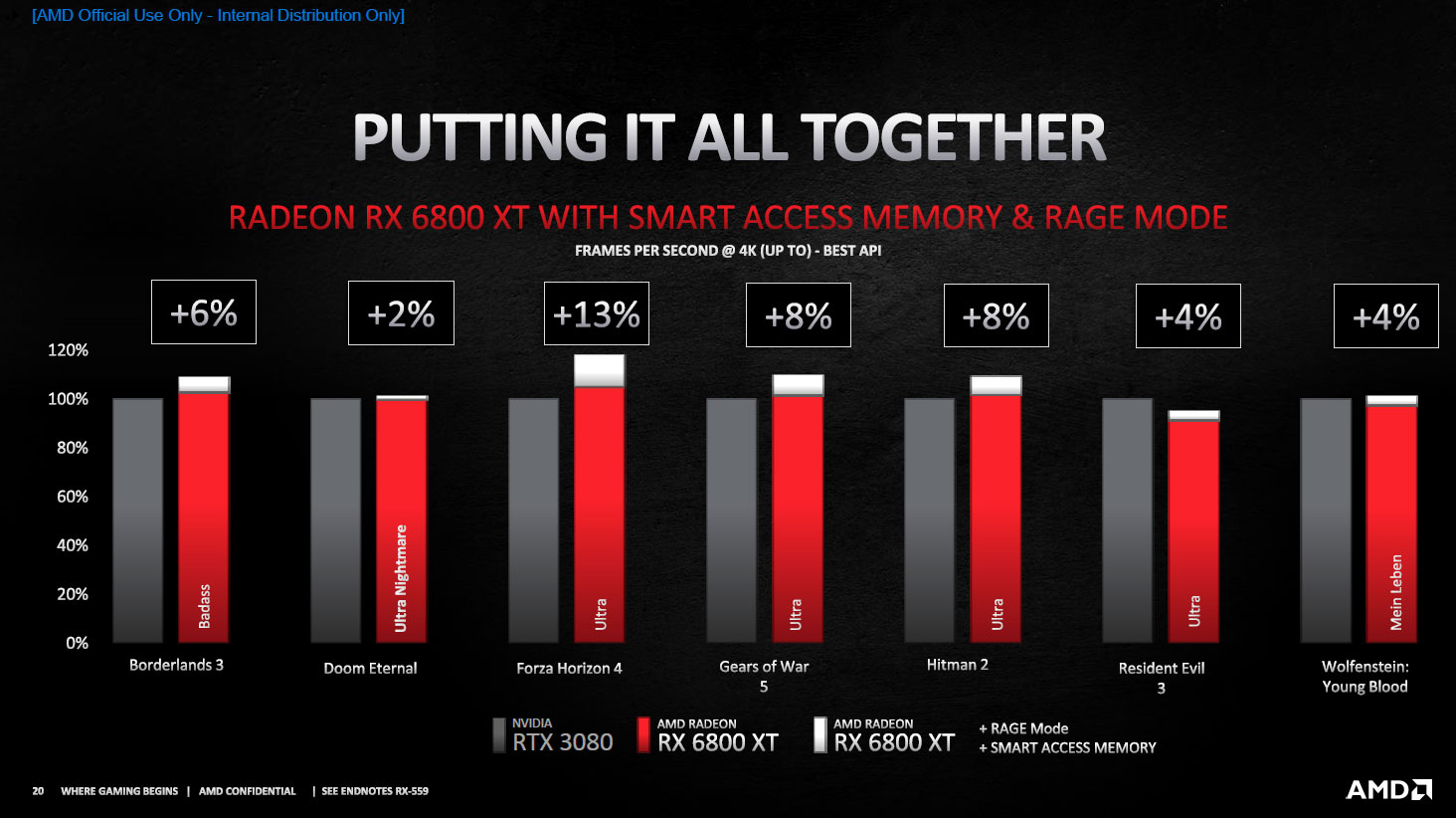

Whilst the raw performance of NVidia's top end still crushes AMD today, AMD is nibbling away at that lead now, and these yet-to-materialise gains will add increasing near future value to the AMD CPU+GPU bundle investment for sure - the fact that both XB and PS platforms are built on this combo should mean rapid uptake of the opportunity by developers, especially if it really does simplify coding.. Just on this, NVidia should see their current flagships progressively overtaken by AMD's top end in the GPU-intensive benchmarks of some titles well within the current hardware cycle.

And from the distant background Intel is wading in - currently a long way back, but if their roadmap is to be believed (yes, with Intel it's been a lot of IF's) then you have another player providing dual silicon, with the ability to also optimise across the combination. Beware the juggernaut when it gets a head of steam, especially with their manufacturing scale...

The overall implications for the market are profound - consider for a moment that these improvements are of absolutely zero use to the miners, who are entirely bound by raw GPU power.

Right now desktop gamers are in a vice between global silicon supply issues and huge demand pressure from miners.

You can easily see a moment in around 18-24 months (maybe even a touch sooner) where both AMD and Intel are providing combo-optimised products with monstrous performance , both in discrete and APU versions, all adressed by DX12U, with readily available production that isn't being instantly bought up by miners.

That leaves NVidia, if not out in the cold, certainely in the middle with maybe a choice to make as to which combined architecture to support, if any, to stay relevant - assuming they are even legally able to do so.

The contrast between that situation and the one the desktop enthusiast and gamer has been in for the last 5 years just couldn't be more stark.

Great news for the little guy paying the bill. Potentially horrible news for NVidia...

For someone who has recently broken the bank to purchase top end NVidia silicon on the basis it'll still be outperforming for a few years, the AMD progress above will slowly but surely chip away at that value over the next twelve months. By the time we get through this cycle in 18 months, with 12th Gen Intel and Zen4 online with both/either offering considerable gains from integration, that splurge you just did for christmas or a week ago is going to look like a LOT of wasted cash. Rushing to buy a top end spec new turbodiesel Audi estate, in a world going electric with ecological barriers increasing - resale value in 5 years in developed countries: close to zero.

Whoever just did that should keep a keen eye on these developments, and get ready to sell on their NVidia silicon quickly to a miner at the right moment before card value goes off a cliff!

Personally, I grabbed a second hand Strix 2080Ti OC around 5 months ago for a reasonable price, from someone making space for the newest cards. I can sell that same card today for €/$100-200 more depending on the day on eBay. I couldn't be happier, and given all the above, it seems to have also been accidentally and luckily the smart choice too.

I'm UTTERLY agnostic between CPU suppliers, I've almost always had Intel, but it's been entirely the result of either my professional setting or a keen value/performance/cost calculation at the time of purchase, with no bias or innate preference. My next GPU and CPU will be AMD, I already know that today since I'm doing the mobo and CPU imminently and the GPU when the current market squeeze is done and I can pay RRP/MSRP.

Happy days ahead!