And the world has been accused of being flat by Nobel Prize winning Physicists. That doesn't make it true. Doesn't make it false either.

*shrug*

There is a big problem here that chips can no longer scale in the ways they previously have, and that we have to do something else to make a difference.

The path you are suggesting of sticking to single core rendering and not looking for alternatives is suggesting that we create a world where there's no longer a reason to upgrade your CPUs because Intel can't make anything faster and AMD is still catching up in single thread.

But as I'm trying to point out, this is the exact same argument made back in the late 70's in favor of distributed computing (really, multi-CPU mainframes). OS's were developed that allowed cooperatively scheduled threading to give the developer fine control of application performance, and significant amounts of time and money were used to developed hard coded assembly to take advantage of those features.

DYI, it didn't work, because it was found after a handful of physical CPU's, performance gains fell off a cliff. And this is more of an issue now since, in a pre-emptively scheduling OS, you have even less control over threading, so you'd expect less scaling then on a cooperatively scheduled OS.

As I've said, there are things that can scale reasonably well. Rendering, obviously, which has been done for about 15 or so years now. Physics, which we see being offloaded from the CPU. You could do AI too. But then you have to manage those threads, keeping the right ones running and keeping the state sane (remember: You don't control what threads run when), so you need some type of locking scheme (locks, mutexs, semaphores, etc) OR perform optimistic processing with a VERY high worst-case outcome.

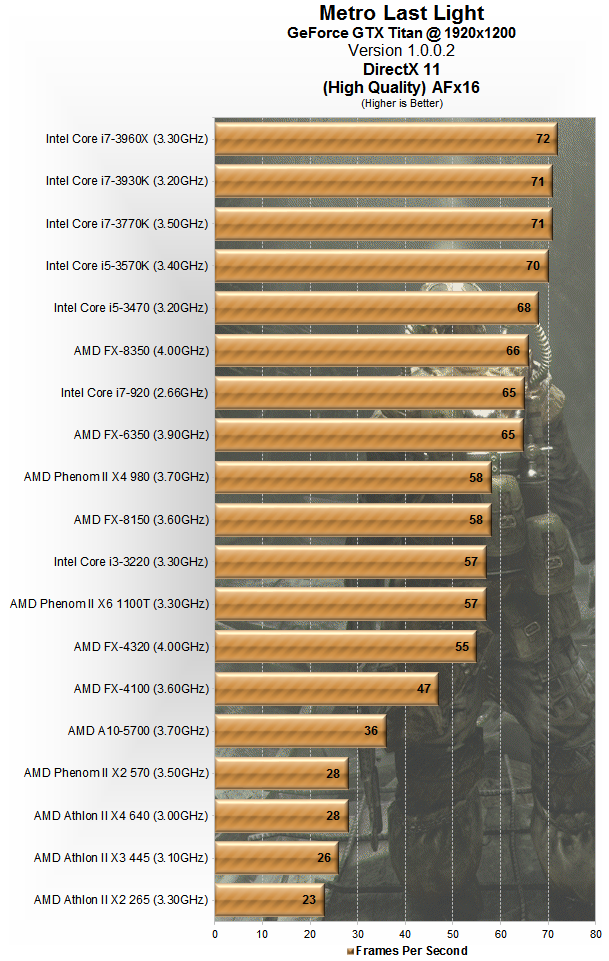

the issue I have with AMD isn't that they are going to more cores, its that they went to more cores while reducing per-core performance. The issue that is doubling the cores is pointless if its competitors cores end up being 50% more powerful [remember, non-perfect scaling + overhead].

The situation with the BD arch has not changed: It lags behind current mid-tier i5 chips in the BEST case. But I still see plenty of people (wrongly) claiming that a 6350 beat an i5-3570k in gaming (it doesn't), or the old BD argument of "It will get better over time" (it hasn't).

And I was one of two or three people here who called this, back in 2009, based SORELY of my knowledge of how the OS/Software works, not even bothering to look at the details of the BD arch. Remember, I said BD would be a benchmark monster, but it games, it would be low-tier i5 level performance? And I nearly got laughed out of the forums.

My primary point being is that you aren't going to see continual scaling as you add more cores, so that approach, much like increasing IPC and clock, is going to come to an end. There's only so much you can do with the way CPU's are currently designed. Maybe quantum computing will change things, but I really think X86 is nearing its limit as a processor arch.