As I said make no difference. You can say whatever you want...i am telling you my experience.

Repeating it doesn't make it real. 1) You are not even using the technology as recommended 2) You don't seem to have an understanding of which to use in what situation and,as a result, your arguments are just plain wrong and 3) You don't seem to have an awareness of ULMB and never even turned on ULMB so how can you speak from "experience" ?

Don't believe everything you read.

I don't, ... and just because you repeated it 3 times offering no documentation or support doesn't make it less so.

Investing money into expensive monitor 144Hz and having $150+ dollar card is rather stupid.

No mention was made of $150 cards; and 144 Hz is outdated tech and not all that expensive ... have a 3 year old 144 Hz Asus 144 Hz here in the office that cost me $209... and I am running with MBR.

I don't know what tests you were reading

Again, we see the source of the problem ... not reading, source was stated in the post.

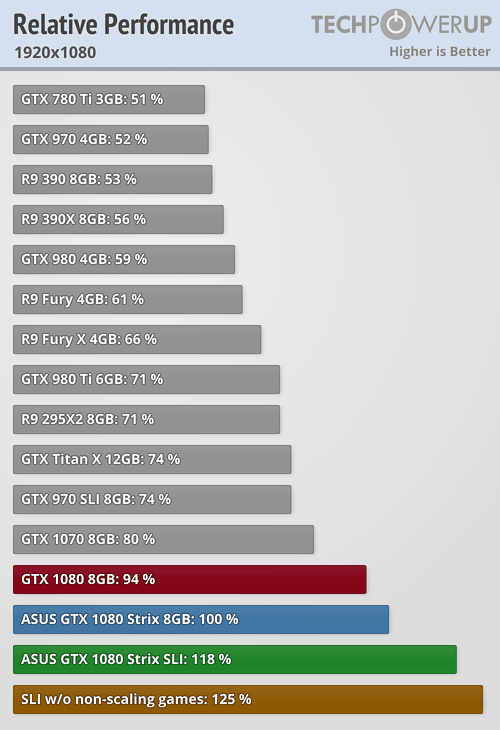

18% @ 1080p across 17 game test suite

33% at 1440p across 17 game test suite

https://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080_SLI/20.html

I don't know what tests you were reading since everyone are using Win10 to test SLI

We don't use Win 10, we have yet to do a Win 10 Build for anyone.

Windows 7 SLI on 1080 scales ~70%, in some games 90% like Battlefield

Yes, I said that.... But it's games like TombRaider that actually get into the 90s.... on 9xx series cards, not 10xx

Battlefileld 4 = 71%

https://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080_SLI/7.html

Battlefield 3 = 32%

https://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080_SLI/6.html

But this statement only reinforces the failure to correctly use the technology.

G-Sync and freesync are intended for games running at 40 (30 for nVidia) fps to 75 fps. Now read that again. Now let's look at what you are doing:

BF3 w/ 1080 SLI = 198.5 fps ... G-Sync / Freesync has no applicability here.

This is where, if you have nVidia G-Sync ... you turn off G-Sync and use ULMB via the hardware module installed in the monitor. . Read that again and try and get an understanding of the technology involved.

https://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080_SLI/6.html

BF4 w/ 1080 SLI = 182.0 fps

G-Sync / Freesync has no applicability here.

This is where, if you have nVidia G-Sync ... you turn off G-Sync and use ULMB via the hardware module installed in the monitor. . Read that again and try and get an understanding of the technology involved.

https://www.techpowerup.com/reviews/NVIDIA/GeForce_GTX_1080_SLI/7.html

You paid a decent cost premium for that G-Sync monitor, more than you would for Freesysnc, because G-Syns addresses the full spectrum of potential experience. It addresses ths 30 - 75 fps range with G-Sync and the > 75 fps with ULMB. That cost premium got you a expensive hardware module that you have never turned on.

The ranting is akin to screaming at the car salesman that you got stuck in the snow with your Jeep Rubicon and that the 4WD technology "makes no difference". In order for it to make a difference, you have to put it in the appropriate mode for the terrain in question. Using regular 4WD is great for driving on paved roads or light snow, but when in deep snow and "stuck", you have one wheel spinning and the other 4 doing squat. Until you put it in "low locked hub" mode, that will continue to happen. Put it in the correct mode and all 4 wheels will spin and you will get out, once out and back on more drivable areas, you take it out 'low locked hub" mode and put it in regular 4WD (really AWD) mode. Don't blame the car manufacturer because you elected not to use available capabilities of the technology.

Similarly, use the appropriate monitor technology for the situation. I again suggest that you go back and do more reading, specifically the linked tftcentral article to learn when and how to use the technology you have in front of you. Going back to SLI, if Battlefield is your yardstick, I can hardly see the value of an investment in SLI. I just don't see the value of a $600 investment so I can go from 106 fps to 182 fps.... especially when the monitor can't reach that number and w/ one card I have the option of running that 144 Hz monitor at 100 Hz in ULMB mode. I can do that with 1 card just as well as 2 cards.