Well, I put up to the 6700 XT just for kicks, so that I didn't have four super low-end GPUs and nothing else. It's good to see what you can get for more money. I could have cut it off at the 6600 and 3050, but it doesn't change the performance offered.

As for integrated graphics, I

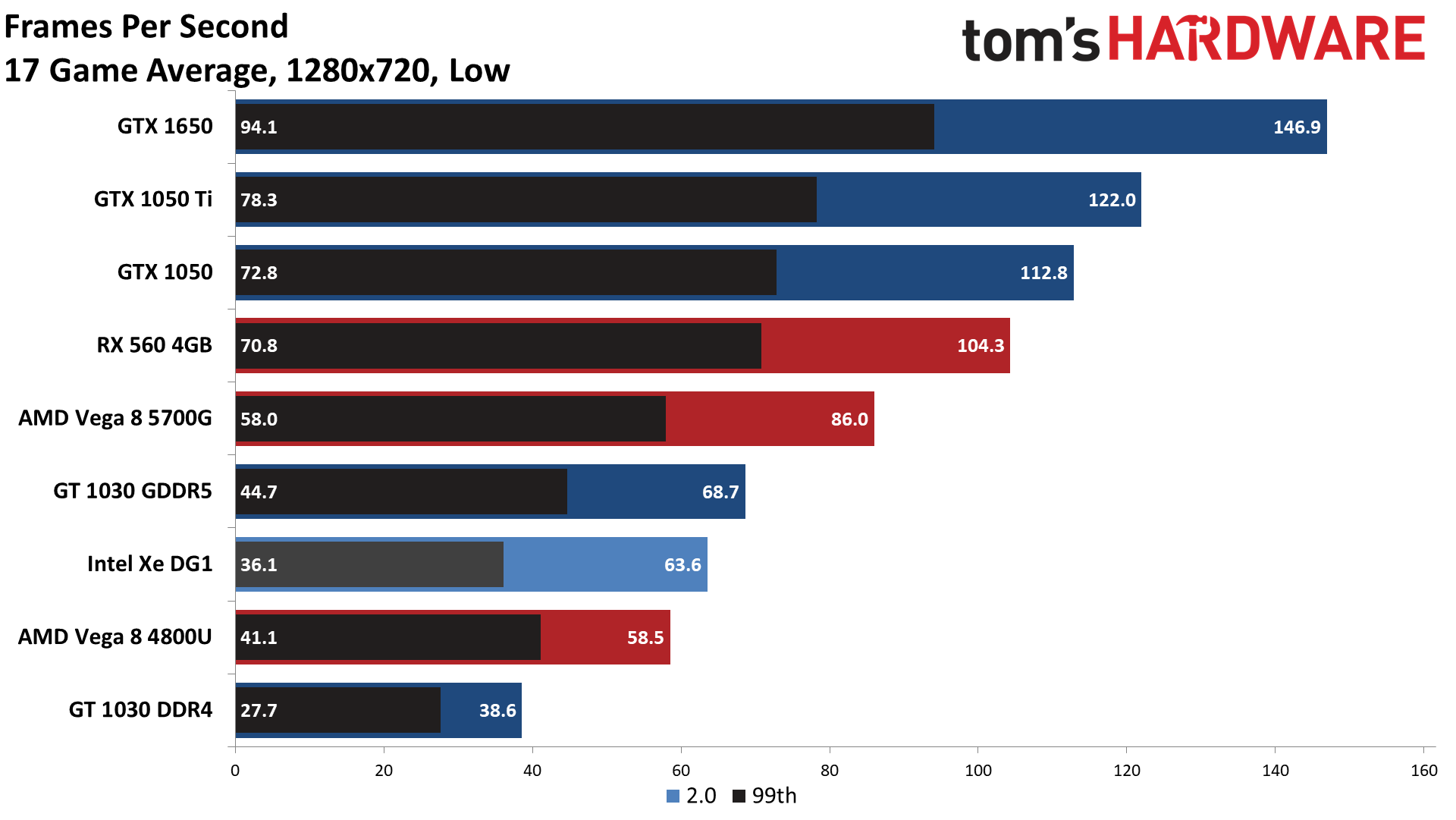

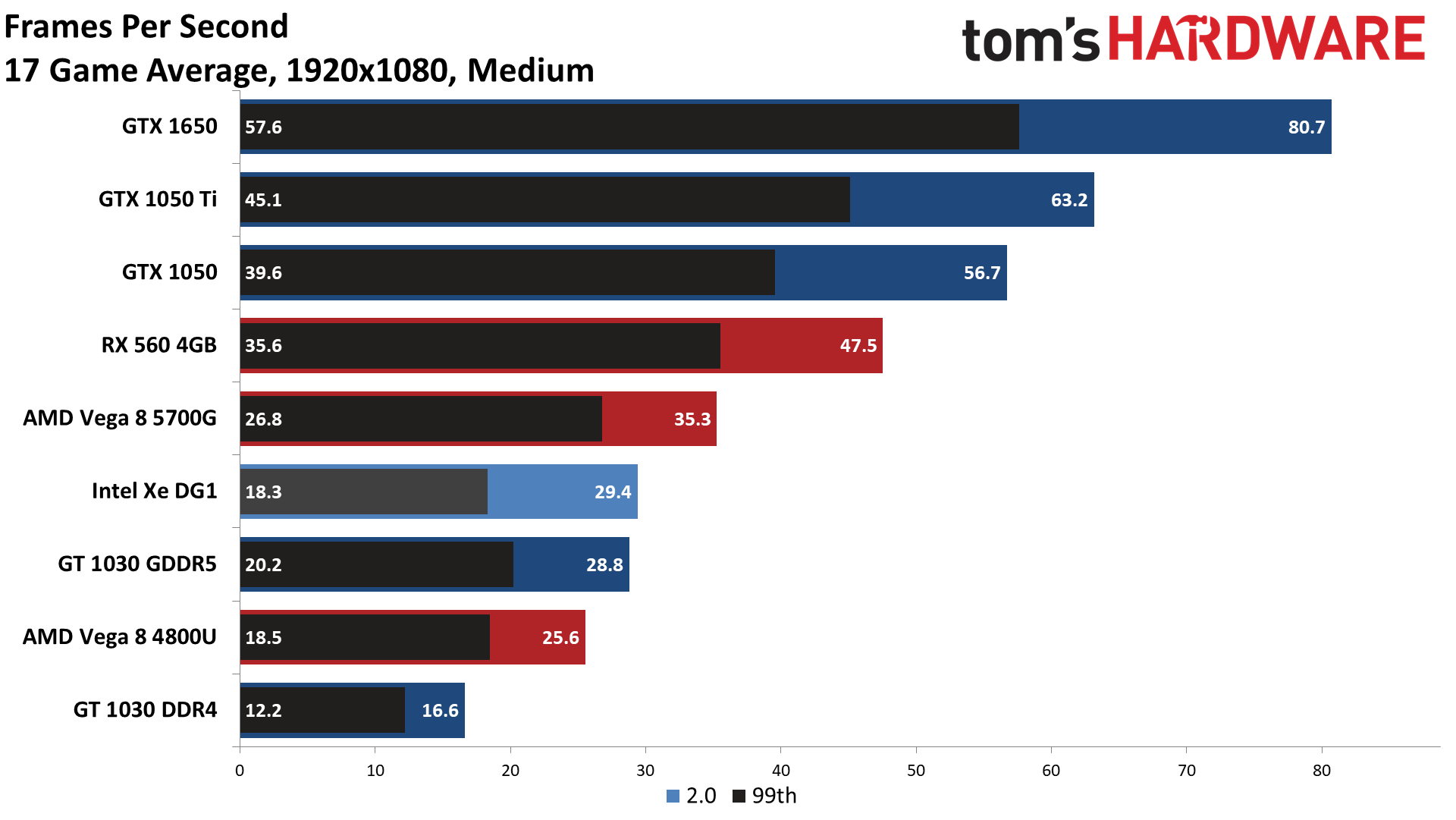

tested Intel's Xe DG1 last year and compared it to GTX 1650, 1050 Ti, 1050, RX 560, Ryzen 5700G, GT 1030, and a Ryzen 4800U. So up until Rembrandt and the Ryzen 6000-series mobile chips launched, that was the best we could get for integrated. Here are the results:

At 720p, the 5700G integrated graphics was 41% slower than a GTX 1650. At 1080p, it was 56% slower. Even the RX 6400 easily outclasses current integrated graphics. We'll see what AMD does with Ryzen 7000 RDNA2 graphics and Zen 4 later this year, but I suspect AMD probably won't put a ton of CUs into the desktop parts. If it does go as high as 12 CUs and a 105W TDP, that should be quite close to the GTX 1650 in performance I think.