AMD has expanded the ROCm GPGPU software stack to support consumer Radeon graphics cards and Windows.

AMD ROCm Comes To Windows On Consumer GPUs : Read more

AMD ROCm Comes To Windows On Consumer GPUs : Read more

CUDA is primarily an API. AMD has a clone called HIP (Heterogeneous Interface for Portability), which runs atop multiple different hardware/software platforms, including Nvidia GPUs and there's allegedly even a port to Intel's oneAPI. HIP only supports AMD GPUs atop ROCm, which is why ROCm support for consumer GPUs is important.AMD introduced Radeon Open Compute Ecosystem (ROCm) in 2016 as an open-source alternative to Nvidia's CUDA platform.

I'd rather nobody use any API that is "Vendor Specific".CUDA is primarily an API. AMD has a clone called HIP (Heterogeneous Interface for Portability), which runs atop multiple different hardware/software platforms, including Nvidia GPUs and there's allegedly even a port to Intel's oneAPI. HIP only supports AMD GPUs atop ROCm, which is why ROCm support for consumer GPUs is important.

AMD's wish is that people would use HIP, instead of CUDA. Then, apps would seamlessly run on both Nvidia and AMD's GPUs. There are other GPU Compute APIs, such as OpenCL and WebGPU, although they lack some of CUDA's advanced features and ecosystem.

With DirectCompute, you're just trading GPU-specific for platform-specific. That's not much progress, IMO.I'd rather nobody use any API that is "Vendor Specific".

That's why OpenCL & DirectCompute exists.

There really isn't one Open-sourced/Platform Agnostic GP GPU Compute API that meets all those requirements, is there?With DirectCompute, you're just trading GPU-specific for platform-specific. That's not much progress, IMO.

Also, from what I can tell, DirectCompute is merely using compute shaders within Direct3D. It doesn't appear to be its own API. I'd speculate they're not much better or more capable than OpenGL compute shaders.

You didn't mention Vulkan Compute, which is another whole can of worms. At least it's portable and probably more capable than compute shaders in either Direct3D or OpenGL. What it's not is suitable for scientific-grade or probably even financial-grade accuracy, like OpenCL.

OpenCL has the precision and the potential, but big players like Nvidia and AMD no longer see it as central to their success in GPU Compute, the way they see D3D and Vulkan as essential to success in the gaming market. Intel is probably the biggest holdout in the OpenCL market. It forms the foundation of their oneAPI.There really isn't one Open-sourced/Platform Agnostic GP GPU Compute API that meets all those requirements, is there?

OpenCL has the precision and the potential, but big players like Nvidia and AMD no longer see it as central to their success in GPU Compute, the way they see D3D and Vulkan as essential to success in the gaming market. Intel is probably the biggest holdout in the OpenCL market. It forms the foundation of their oneAPI.

One of the upsides I see from the Chinese GPU market is probably coalescing around OpenCL. We could suddenly see it re-invigorated. Or, maybe they'll turn their focus towards beefing up Vulkan Compute.

Oh, and WebGPU is another standard to keep an eye on. It's the web community's latest attempt at GPU API for both graphics and compute workloads. Web no longer means slow - Web Assembly avoids the performance penalties associated with high-level languages like Javascript. And you can even run Web Assembly apps outside of a browser.

#927 !

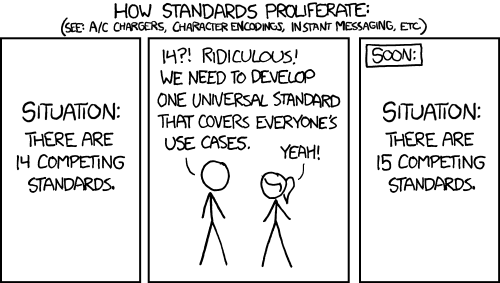

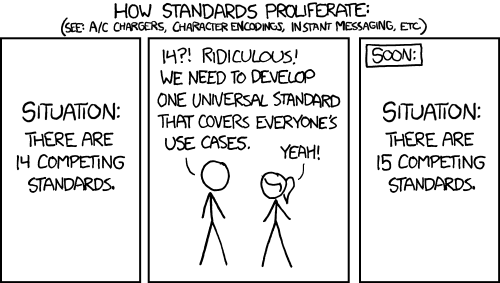

Why does this feel like another XKCD moment?

Its never too late for competition.Too little, too late? I've been waiting for ROCm on Windows since launch - it's been a mess. I've been wanting to play around with it first on my RX470 and now on my RX6800XT.

Intel's OneAPI seems to be the best way forward right now as far as open solutions go.

I'm sure some assess have been getting kicked at AMD for missing out on so much of the AI boom. I hope this is finally resulting in them getting the needed resources for their compute stack.Its never too late for competition.

To me the original HSA seemed the farm more attractive way to go which culminated in the (alledged?) ability to change control flow from the CPU part of an APU or SoC to GPU or xPU at the level of a function call, offering a really fine grained near zero overhead control transfer, instead of heavy APIs with bounce buffers or perhaps even cache flushes.Too little, too late? I've been waiting for ROCm on Windows since launch - it's been a mess. I've been wanting to play around with it first on my RX470 and now on my RX6800XT.

Intel's OneAPI seems to be the best way forward right now as far as open solutions go.

I don't understand how that would work. You don't do such things for multi-threaded programming on a CPU. Yeah, you could theoretically trigger an interrupt on the GPU and interrupt whatever it's doing to have it switch to running the new function you're calling, but what if it was already running a function called by another CPU thread?To me the original HSA seemed the farm more attractive way to go which culminated in the (alledged?) ability to change control flow from the CPU part of an APU or SoC to GPU or xPU at the level of a function call, offering a really fine grained near zero overhead control transfer, instead of heavy APIs with bounce buffers or perhaps even cache flushes.

I had the same idea, though I was more interested in OpenCL than HSA. Fortunately, I never got around to ordering it, though I think I got as far as spec'ing out all the parts.I even bought an AMD Kaveri APU to play with that

100 W at the wall, or CPU self-reported? I'm impressed it was able to match a Skylake, at all. The fact that you compared it against a mobile CPU meant both that the Skylake had an efficiency advantage (due to lower clocks), but also a decent performance penalty.drinking nearly 100 Watt for a job that an i5-6267 Iris 550 equipped SoC from Intel could do at exactly the same speed for 1/3 of the power budget (I benchmarked them extensively as I had both for years and it's quite fascinating how closely they were always matched in performance,

Not really. CPU cores are never as efficient as GPU cores, for a whole host of reasons.In a way HSA is finding its way back via all those ML-inference driven ISA extensions,

I'm fuzzy on the details, most likely because it never became a practical reality.I don't understand how that would work. You don't do such things for multi-threaded programming on a CPU. Yeah, you could theoretically trigger an interrupt on the GPU and interrupt whatever it's doing to have it switch to running the new function you're calling, but what if it was already running a function called by another CPU thread?

Just like we do for multi-threaded programming on CPUs, I think a GPU API will always be buffer-based. The key thing is that the GPU be cache-coherent, and that's what saves you having to do cache flushes. It lets you communicate through L3, just like you would between CPU threads.

Mostly self reported but also checked at the wall. And yes, the 22nm Intel Skylake process using 2 cores and SMT at 3.1 GHz was able to match almost exactly 4 AMD Integer cores (with 2 shared FPUs) at 4 GHz on 28nm GF.I had the same idea, though I was more interested in OpenCL than HSA. Fortunately, I never got around to ordering it, though I think I got as far as spec'ing out all the parts.

100 W at the wall, or CPU self-reported? I'm impressed it was able to match a Skylake, at all. The fact that you compared it against a mobile CPU meant both that the Skylake had an efficiency advantage (due to lower clocks), but also a decent performance penalty.

There are *so many* really interesting memory mapped architectures out there, both historically and even relativley recent, which act like memory to ordinary CPUs whilst they compute internally. The first I remember were Weitek 1167 math co-processors, which were actually designed ISA independent (and supposed to also work with SPARC CPUs, I believe). You'd fill the register file writing to one 64K memory segment (typically on an 80386) and then give it instructions by writing bits to another segment. You could then get results by reading from the register file segment.Not really. CPU cores are never as efficient as GPU cores, for a whole host of reasons.

AMX is an interesting case, but that's really just a special-purpose matrix-multiply engine that Intel bolted on. Oh, and the contents of its tile registers can't be accessed directly by the CPU - the go to/from memory. So, even with AMX, you're still communicating data via memory, even if the actual commands take the form of CPU instructions.

No, absolutely not. There's no way a GPU was executing x86. That'd be insane, on so many levels.my understanding was that quite literally it worked via an alternate interpretation of all instruction encodings and the APU would flip between an x86 and a "GPGPU" ISA on calls, jumps or returns.

Interrupts are traditionally pretty cheap. According to this, they're only a couple microseconds:the overhead of switching was absolutely negligible, nothing nearly as expensive as an interrupt,

I think the reason HSA fizzled is just for lack of interest, within the industry.it became quite obvious that it wouldn't do much good with discrete GPUs, even of both were to come from AMD, as they rarely shared the same memory space and even with an Infinity Fabric HBM/GDDRx/DRAM bandwidth and latency gaps would quickly destroy the value of such a super-tight HSA.

Probably because of HSA, AMD GPUs have supported cache-coherency with the host CPU, since Vega, if not before.Buffer based, yes, but generally without having to copy and flush

All Skylakes were 14 nm. It's Haswell (2013) that was 22 nm.yes, the 22nm Intel Skylake process using 2 cores and SMT at 3.1 GHz was able to match almost exactly 4 AMD Integer cores (with 2 shared FPUs) at 4 GHz on 28nm GF.

I had a Phenom II x2 that served me well. Never had any issues with it, actually. It was a Rev. B, however. In fact, I recently bought a Zen 3 to replace it.That and a Phenom II x6 threw me off AMD after a long love affair that had started with a 100MHz Am486 DX4-100, I think, and lasted many generations (I came back for Zen3).

No, read the ISA for it. It's only 8 instructions. The way tiles are read & written is by loading or saving them from/to a memory address. That's an example of communicating through memory, not memory-mapped hardware.There are *so many* really interesting memory mapped architectures out there,

Here is the report I still had in my mind from Anandtech: https://www.anandtech.com/show/7677/amd-kaveri-review-a8-7600-a10-7850k/6No, absolutely not. There's no way a GPU was executing x86. That'd be insane, on so many levels.

HSA defined a portable assembly language which is compiled to native GPU code at runtime. Calling that function should then package up your arguments, ship them over to the GPU block, and tell the GPU to invoke its version of the function.

Once complete, it packages up the results and ships them back, likely with an interrupt to let you know it's done. If they're smart, they won't send an interrupt unless you requested it.

Ja, ja der WoSch, der hat offiziell um 1989 herum meine Diplomarbeit bei der TU-Berlin/GMD-First betreut (ich habe X11R4 auf AX auf einem TMS32016 und einer Motorola 68030 portiert.)Interrupts are traditionally pretty cheap. According to this, they're only a couple microseconds:

With Bulldozer the idea was that AMD could let go of their 8087/MMX type FPUs, because thery had many more GPU cores capable of way bigger floating point compute. But that only works when the applications are converted to do all of their floating point stuff to HSA and there the tight integration really allowed to do that a single loop level: you weren't restricted to an entire ML kernel, which is the level of granularity you have with CUDA these days.If you're making enough calls for that overhead to be significant, then you're probably doing it wrong.

Software eco systems, how they come about and why the flounder is incredibly intersting to study, but unfortunately that doesn't mean anyone gets better at creating successful ones 🙂I think the reason HSA fizzled is just for lack of interest, within the industry.

you caught me there, I *was* going to put 14nm but fell for not checking my sources first (stupid me!)Probably because of HSA, AMD GPUs have supported cache-coherency with the host CPU, since Vega, if not before.

I think Intel supported it since Broadwell, which was the first to support OpenCL's Shared Virtual Memory.

All Skylakes were 14 nm. It's Haswell (2013) that was 22 nm.

The Micron AP processor was meant to achieve an order of improvement in how much computing you could do given the memory barrier of general purpose compute. The idea was to insert a small bit of logic into what was essentially RAM chips, and to avoid spending all of the DRAM chip's budget in transferring gigabytes of data, when only relatively light processing was required on it.I had a Phenom II x2 that served me well. Never had any issues with it, actually. It was a Rev. B, however. In fact, I recently bought a Zen 3 to replace it.

No, read the ISA for it. It's only 8 instructions. The way tiles are read & written is by loading or saving them from/to a memory address. That's an example of communicating through memory, not memory-mapped hardware.

I was very careful to say "memory address", because AMX obviously goes through the same cache hierarchy as the rest of the CPU. So, you're not necessarily bottlenecked by DRAM, since the data could still be in cache.The Micron AP processor was meant to achieve an order of improvement in how much computing you could do given the memory barrier of general purpose compute. The idea was to insert a small bit of logic into what was essentially RAM chips, and to avoid spending all of the DRAM chip's budget in transferring gigabytes of data, when only relatively light processing was required on it.

Please zoom out to 10.000 ft above ground ;-)I was very careful to say "memory address", because AMX obviously goes through the same cache hierarchy as the rest of the CPU. So, you're not necessarily bottlenecked by DRAM, since the data could still be in cache.

The way AMX is probably used, in practice, is to process a chunk of data where the output is still small enough to remain in L2 cache, then they use either AMX or AVX-512 to do the next processing stage.

Doesn't really have anything to do with AMX, but still an interesting post. Thanks for taking the time to type it out.Please zoom out to 10.000 ft above ground ;-)

It took me a while to notice that, but separate L1 caches for code & data really are a partial form of Harvard Architecture.Lots of caches have allowed returning to a partial Harvard (RISC) architecture, which separated code from data at least within them.