Review AMD Ryzen 9 7950X3D Review: AMD Retakes Gaming Crown with 3D V-Cache

Page 3 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Maebius

Splendid

mdbrotha03

Distinguished

I also did the same upgrade and notice better performance in games.the SAME can be said about going from and intel ddr 4 based comp to a intel ddr5 based comp as far as ram is concerned.

just did a quick price check with a 7950X, Asus Strix X670E0E gaming, 13900k, asus Strix Z790-e gaming and the same Corsair 5600 mhz CL36 ram ( could use faster ram,but still.

ram, same price, face it, very few would by a new system and use ddr4 on t he intel system, boards, for this board the AM5 board was the SAME price as the intel version, so that argument is FALSE. the cpus, the 7950x is $150 more, so that is true, so based on regular prices, you are HALF right.

BUT currently everything i have listed is on sale, and taking that into account the amd system is less expensive by 10 bucksthis isnt factoring anything else in the system, like cooling for example.

and thats half of his argument it seems, i doubt very few would by a 13900k and pair it with the ddr4 intel platform, that is just asking for another upgrade in 1-2 years for just the board and cpu, and at that time, may as well just upgrade the cpu as well.

heh, i went from a 3900x to a 5900x about a year after getting the 3900x, and saw quite the performance bump in transcoding with handbrake, a bluray went from about 60 mins i think it was to about 30 mins.

You are forgetting the cost of pci-e Gen 5, on top of the ridiculous inflation we are experiencing.

No, I don't see that as relevant. Don't people know that PCIe is backwards compatible? Just because it supports PCIe5 doesn't mean you are required to run PCIe5 NVMe's etc. I have a PCIe4-supporting mboard and I am running PCIe3 and a PCIe4 NVMe drives simultaneously. It's just like the PCIe mode for the GPU is PCIe 4--but it'll run PCIe3 GPUs just fine. Every motherboard autoconfigures to the PCIe mode of the device inserted into the slot--user intervention is not required (you don't have to set it in the bios--it configures itself automatically.)

I've seen this strange notion in other places and found it bizarre...where do people get these ideas? Supporting PCIe5 doesn't mean you are restricted to PCIe5--or PCIe4, either. The PCIe standard is backwards compatible. It's like system ram--if DDR5 6000 is supported, that doesn't mean you are required to run DDR5 ram rated at that speed. ..

I assume you're talking about little endian (i.e. lowest-valued bytes at lowest address). Although I think ARM is bi-endian, Android on ARM runs it in little endian mode. Even iOS runs in little endian, and Apple had its roots in big endian Motorola 68k. Pretty much every Linux distro, even on IBM POWER processors (which are also bi-endian), runs in little endian.But in the end the only way I found to make fast code was to assume x86 integer encoding, which probably doesn't run on ARM (most phones).

Just wanted to leave this here:

It's a Blender benchmark, showing the total amount of energy used to render the "BMW Car Demo" scene. Lower energy -> more efficient.

It's a Blender benchmark, showing the total amount of energy used to render the "BMW Car Demo" scene. Lower energy -> more efficient.

I've heard that GPU shaders get optimized with use. This suggests there's recompilation or iterative refinement going on. Otherwise, what I'd suggest is do the quickest compilation upon initial loading and then do a backgrounded optimization pass.1) The shader compile stuttering on PC needs to be solved. There is no point in a game running 200fps if it drops to 10fps each time a shader needs to be compiled. The proposed solution from Digital Foundry to do the shader compiles during loading and letting people wait 15 minutes is imo, ridiculous. My guess is that we will see a hardware solution to this problem, unless Nvidia and AMD agree on a unified driver architecture which is unlikely.

I think we probably can't overlook the responsibility of games developers, though. I wonder just how big their shaders are, and if they're using development tools which generate massive amounts of shader code. It could be that they either need to tamp down on the volume of the code they're generating, or somehow improve its structure so it doesn't bog down the optimizer with too much data & control-flow analysis.

FWIW, @JarredWaltonGPU recently mentioned that this involves a semi-manual benchmark. Maybe that's why it doesn't feature in more reviews?Can we get some 4K (UHD) gaming benches for MSFS that showed massive FPS improvement over Intel with this CPU between HD (1080p) and QHD (1440p)?

Perhaps they could lobby Microsoft to make it easier to benchmark?I know some in the MSFS sim community who are looking at upgrading their older hardware,

I think that's going to be almost 100% GPU-limited. Maybe there are some CPU layers or some CPU-intensive preprocessing or postprocessing, but AI is usually 100% GPU-limited.I would like to see some new tests included using AI engines; You could run something like REALESRgen to upscale some anime videos or something; Granted, if you have a 4090 you are using the GPU; but there are CPU options as well;

Adding PCIe 5.0 support to a motherboard adds cost, due to the signal integrity requirements. To satisfy those involves more PCB layers, more expensive materials, retimers, etc. I think that's what the point was about.No, I don't see that as relevant. Don't people know that PCIe is backwards compatible? Just because it supports PCIe5 doesn't mean you are required to run PCIe5 NVMe's etc.

No, I don't see that as relevant. Don't people know that PCIe is backwards compatible? Just because it supports PCIe5 doesn't mean you are required to run PCIe5 NVMe's etc.

Gen 5 costs more to produce. Backwards compatibility has nothing to do with it. Just like how X570 and B550 boards were more expensive, than X470 and B450, in part due to the gen 4 interface. This isn't a new development.

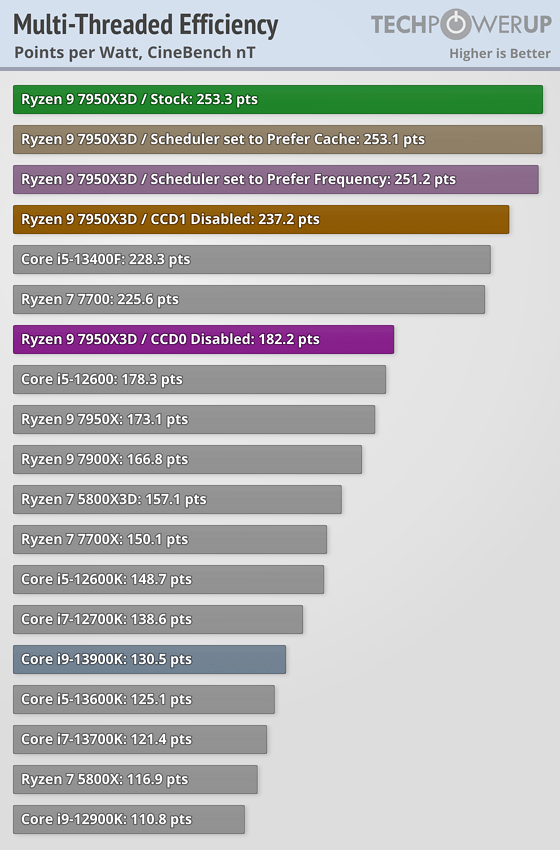

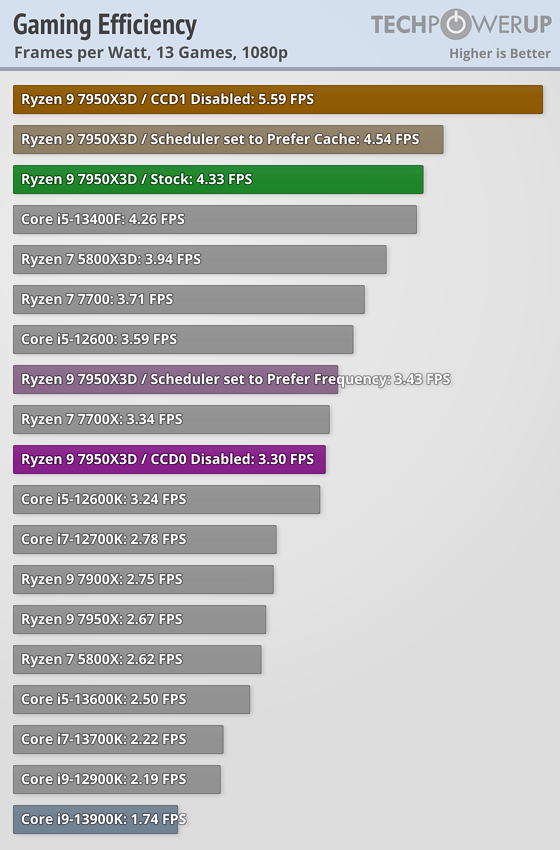

More efficiency data. This time, from TechPowerUp's review where they tried disabling each CCD, but it also includes similar scheduler tweaks. Note that 0 is the 3D cache die, so look for "CCD1 Disabled" (the brown bars) to see their simulated 7800X3D results.

-Fran-

Illustrious

Just to add. In their simulated 7800X3D, they found it used 44W to play games. That is absolutely Laptop territory.More efficiency data. This time, from TechPowerUp's review where they tried disabling each CCD, but it also includes similar scheduler tweaks. Note that 0 is the 3D cache die, so look for "CCD1 Disabled" (the brown bars) to see their simulated 7800X3D results.

I wonder if AMD will deliver on the laptop front and bury Intel in the sand for a good while.

Regards.

dalauder

Splendid

I'm not sure what point you're trying to make, considering that you're not upgrading an Intel build anyways. Their sockets are NEVER compatible. You'd be doing a brand new Intel build from scratch and spend $1500, but you're decrying an extra $60 on RAM that will work an any system in the next six years?The cons just weigh too heavy for me to even consider the CPU or the AM5 platform.

I already have DDR4. AMD expects me to just throw this into the trash and buy expensive new DDR5 for a 1% difference in performance. It even bothers me from an e-waste perspective, let alone the financial cost.

The fact performance of X3D CPU is all over the place bothers me too. Yes it's fast in -some- games, but then you get less overall performance in several important applications. If I just used my PC for gaming, I would have bought a console, I don't like the idea of having to make a trade-off with X3D CPU.

Another thing is, and this is not AMD specific but PC specific. The biggest issue with gaming on PC has been stuttering because shaders need to be runtime compiled on PC. This CPU will not solve this. When PC gaming becomes a meme, #stutterstruggle, I don't think many people are going to be willing to invest in $600+ CPU. Solve this problem, PC gaming is currently in shambles.

If you want your PC for productivity, AMD wins. If you want your PC for gaming, AMD wins. If you want to future-proof so that you can upgrade in two or three years, AMD wins. If you care about the cost of electricity, AMD wins. But $60 extra on RAM is your line in the sand?

Side note. I don't see you wanting to spout how badly Intel loses in performance metrics when paired with DDR4, despite stating it as the defining issue.

Last edited:

dalauder

Splendid

Buy before inflation price hikes!I'll be going with AM5 after the 7800X3d is released in another month. Haven't decided which one to buy, however. The advantage of AM5 is that it has years of CPU & system ram & bios development ahead of it, whereas the AM4 platform I'm currently using is at an end in terms of further development. I'm not in a huge hurry, though. I may wait a year for AM5 bios maturity and some new motherboard offerings.

What I don't care for with AM5 is the current crop of motherboards--for instance, my x570 Aorus Master is a much better motherboard than the x670e Aorus Master--which doesn't support nearly as many features as the x570 Master (like a back-panel CMOS clear button (!) and it has no hardware DAC or earphone amp & doesn't offer dual, manually switched bioses!) Adding insult to injury, it costs > ~$140 more while delivering much less! The x670e supports more NVMe drives, and it's 8 layers instead of 6, but that doesn't make up for the other deficits. To get what I had in my current mboard I'd have to go to a $699 Xtreme! Ridiculous. No way am I reverting to opening up the case to set a Clear CMOS jumper, and I'm not spending ~$700+ on a motherboard! The $360 for the x570 Master was a lot, too, but it was justified by the hardware that appealed to me. So I'll see what happens on the motherboard front.

simfreak101

Honorable

Thats why i said they could use the CPU profile, which does not use the GPU at all. From my experience, when you use the CPU profile, it pretty much maxes out all your cores; Since CPU's will start coming with AI accelerators built in, i think it would be a valid test.I think that's going to be almost 100% GPU-limited. Maybe there are some CPU layers or some CPU-intensive preprocessing or postprocessing, but AI is usually 100% GPU-limited.

10tacle

Splendid

FWIW, @JarredWaltonGPU recently mentioned that this involves a semi-manual benchmark. Maybe that's why it doesn't feature in more reviews?

Not sure I understand. He's already got MSFS in this CPU review at 1080p and 1440p and he's been using up to 4K resolution in GPUs tests for at least a year now....

Vanderlindemedia

Prominent

CPU in above cailber will set you good for the next 3 to 5 years. The price asked for it is a bargain.

Sorry, I missed that.Thats why i said they could use the CPU profile, which does not use the GPU at all. From my experience, when you use the CPU profile, it pretty much maxes out all your cores; Since CPU's will start coming with AI accelerators built in, i think it would be a valid test.

Well, I've seen AI benchmarks on a couple other sites and what I can tell you is that the 3D cache won't help when the model is small enough to fit in the standard 7950X's L3 cache. It's only for that narrow range of models that are too big for the non-3D version but not the 3D version where you're going to see a difference. And a lot of the models that people use for CPU-based inferencing are so small, they even fit in L2 cache!

a.henriquedsj

Commendable

saunupe1911

Distinguished

You guys could have down at least attempted a little 4K gaming. We at least want to see what it can do at that resolution. Very few is upgrading from a 3000 series to game at 2K.

There are other benchmark sites that have run 4k tests and even many other games. They all are fairly consistent from what I have seen. Except for something like cyberpunk where all cpu get about the same number the gains at 4k pretty much follow the gains you see at 1080. The order of the cpu doesn't change much.

The problem is the gains are very small and in some cases because the gains are so small you can't really be sure one cpu beats the other because of margin of error in the testing. Other than some games like tomb raider that the 3d cache make much more difference many games are in the single digit number of frames different at 4k.

In many cases much cheaper cpu can come within a few frames of whichever cpu is on top when you are looking at 4k results. Although there are people that will spend a extra $200 or $300 to get 1 extra fps I am not.

This also makes it very likely the 7800x3d is going to be the best value choice for gaming at 4k........but it still may not be enough for someone who has a system that is only 1 or so generation old and might even make less difference if they also do not have a top end GPU.

The problem is the gains are very small and in some cases because the gains are so small you can't really be sure one cpu beats the other because of margin of error in the testing. Other than some games like tomb raider that the 3d cache make much more difference many games are in the single digit number of frames different at 4k.

In many cases much cheaper cpu can come within a few frames of whichever cpu is on top when you are looking at 4k results. Although there are people that will spend a extra $200 or $300 to get 1 extra fps I am not.

This also makes it very likely the 7800x3d is going to be the best value choice for gaming at 4k........but it still may not be enough for someone who has a system that is only 1 or so generation old and might even make less difference if they also do not have a top end GPU.

Paul and I use different test scenes for MSFS, but these days I use the landing challenge for the Iceland airport. The plane pulls to the right, so I start by turning to the left, drop the engine power to 20%, and then just let the plane "coast" for about 30 seconds before I stop and restart the test sequence. I also have on the online (Bing maps) integration, but with live weather and traffic off, as that seems to improve the fidelity and also makes things a bit more demanding. But MSFS does take longer to load than a lot of other games, and there's no specific built-in benchmark. Also, installing the game initially takes an eternity, relatively speaking. Even with gigabit internet, it only averages maybe 200 Mbps download speeds and takes a few hours to do a clean install. Because MS puts most of the data separate from the executable, so you get a ~2GB game download, and then 100+ GB of updates within the game.FWIW, @JarredWaltonGPU recently mentioned that this involves a semi-manual benchmark. Maybe that's why it doesn't feature in more reviews?

- Status

- Not open for further replies.

TRENDING THREADS

-

News Windows 11 will reportedly display a watermark if your PC does not support AI requirements

- Started by Admin

- Replies: 19

-

-

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 3K

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.