The myth lives on, doesn't it?

Restricted to 125w it has similar efficiency to the 7950x 3d and far better than the 7950x. Please, the data is there

It's also much slower in that configuration.

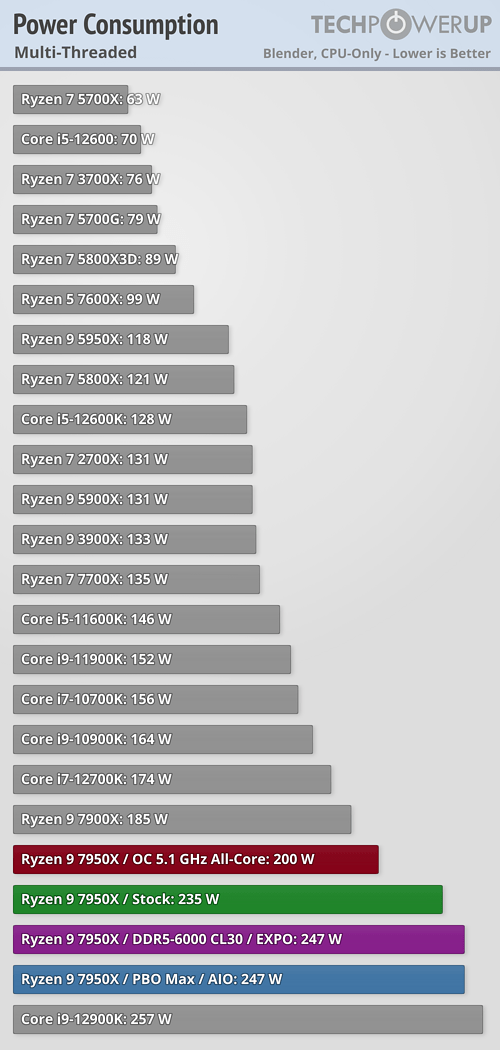

A stock 7950X3D consumes 140W in MT ( source:

https://tpucdn.com/review/amd-ryzen-9-7950x3d/images/power-multithread.png ), if both have the same points per watts and the 14900K is at 125W, it means the 7950X3D is faster to finish the task by ~140/125 = 1.12 = 12%.

To be clear at approximately ISO efficiency:

7950X3D @ 140W * 253.3 points = 35462

14900K @ 125W * 250.9 points = 31363

35462 / 31363 = 1.13069 = 13.1% faster for the 7950X3D.

With a bit of clever math ( and "MyCurveFit" Source:

https://mycurvefit.com/ - reference of that particular curve:

View: https://imgur.com/6HcwGWe

) we can extrapolate the 14900K performance at 140W given the data points of 253W, 200W, 125W, 95W, 65W and 35 in the curve of TPU's results which lands around 31882 points.

So at ISO power:

7950X3D @ 140W = 35462

14900K @ 140W = 31882

11.2% faster for the 7950X3D

With that data we can also extrapolate for fun, the points per watts at 140W for the 14900K to 227.7 giving:

7950X3D = 253.3 Points per Watt

14900K = 227.7 Points per Watt

And at ISO performance ( around 35000 points for both processors, more or less a few tenths of a percent ) we can also arrive at:

7950X3D = 140W

14900K = 253W

80.7% higher power consumption for the 14900K.

In conclusion:

- At ISO efficiency the 7950X3D is faster by ~13%.

- At ISO power the 7950X3D scores ~11% higher.

-At ISO performance the 7950X3D consumes ~44% less power ( or the 14900K consumes 80.7% more, same thing ).

You can dance around reality all you want and try to distort the facts to suit your argument, it doesn't matter.

Whether you dispute those proven undisputable facts that have been confirmed over and over again by the whole professional reviewing community and beyond or not is irrelevant.

BTW, are you related to the guy running Userbenchmark? I'm getting similar vibes here. I just hope this demonstration will get you back on track with reality contrarily to that guy which seems pretty hopeless.

I rest my case, it is ironclad and I'm done here, there's nothing left to say.

Note: Sorry

abufrejoval, I misquoted, my bad.

225w max

225w max