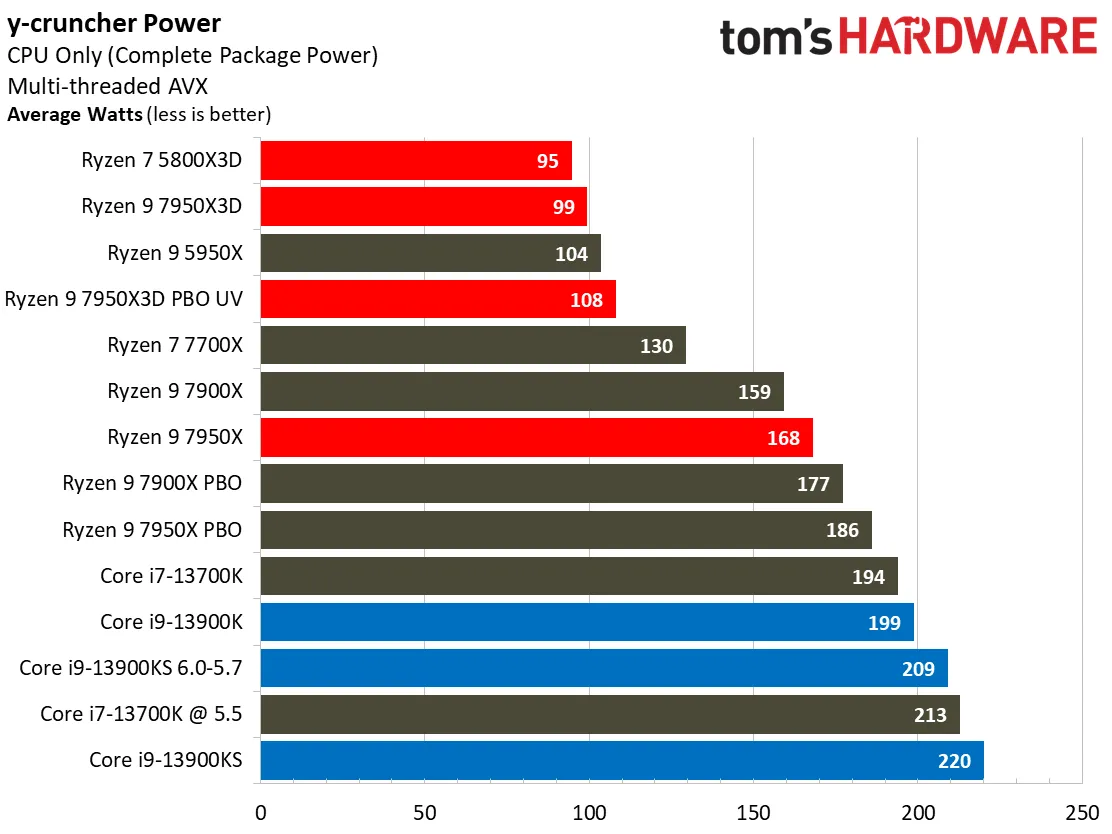

The electric bill thing might need some context, because I hear this a lot but nobody seems to put any real numbers behind it.

So let's compare the i5-13600K to the Ryzen 7800X3D in terms of power consumption. Going by what

TechPowerUp got in its numbers, the average power consumption across 12 games was 49W for the 7800X3D and 89W for the i5-13600K. Let's just put these in isolation for now, because the motherboard is a factor that can't be the same between them. So using just this number, the Intel chip has a power consumption factor of 1.816x

Let's say in a given week, you play games for about 30 hours. And then just say there's 4 weeks for a month.

- If we went by the most expensive electricity rate in the US, that would be Hawaii at $0.49 per kilowatt-hour. So it would cost you $2.88/mo to run the AMD CPU, $5.23/mo to run the Intel CPU. It would take you about 55 months, or 4.58 years to break even.

- If we went by the average electricity rate in the US, that would be $0.1372 per kilowatt-hour. So it would cost you $0.81/mo to run the AMD CPU, $1.47/mo to run the Intel CPU. It would take you about 197 months, or 16.4 years to break even.

- If you happen to live in Nebraska with the cheapest rate of about $0.0935 per kilowatt-hour, well, I'm pretty sure you'd long forgotten about your PC before it had a chance to break even.

The only thing that this may negatively impact is the temperature of your room, but I doubt 89W is really going to make that much of a difference vs 49W. And if you have to run the AC longer... well if you're in Hawaii, you're probably running the thing most of the day anyway.

Also I think the funny thing here is this is the same argument that people made for buying Intel over the AMD FX chips.

EDIT: Before any one wants to go "well ackshually" on my numbers, this is a simplified example and I can't be bothered to account for when you use your computer for your use case and if you have something like different electric rates depending on the time of day.

If you want to crunch your own numbers, go right ahead.