Hi,

Is AMD taking a page from NVIDIA's books now, as a direct response to the DLSS upscaling tech used in games ? Well according to a recent leak, AMD's next-generation RDNA 3 'GFX11' GPUs could feature hardware-accelerated FSR 3.0 tech as spotted through the addition of a new instruction set within LLVM.

As you may already know by now that one of the key advantages of FSR 1.0 and FSR 2.0 upscaling tech (FidelityFX™ Super Resolution) compared to NVIDIA's DLSS has been that it does not rely on any hardware assistance such as dedicated Machine Learning (ML) blocks, but that may soon be coming to an end.

While AMD has done an absolutely great job with FSR, offering not only a visual quality on par with NVIDIA's solution but also by making it more open-source, it looks like in the coming generation, AMD might be going one step ahead & using dedicated machine learning blocks to further boost the performance and visual quality that FSR has to offer. But this remains to be seen, and this has not been confirmed by AMD yet.

Since AMD has just made it's FSR 2 tech open-source, the chances to go for a dedicated machine learning tech are slim, but anything can change in the last moment. We still don't have a proper block diagram of the RDNA3 GPU architecture.

But this is not the first AMD architecture to support matrix operations though, because the CDNA architecture already supports it. So not sure if consumer gaming GPUs will also feature them.

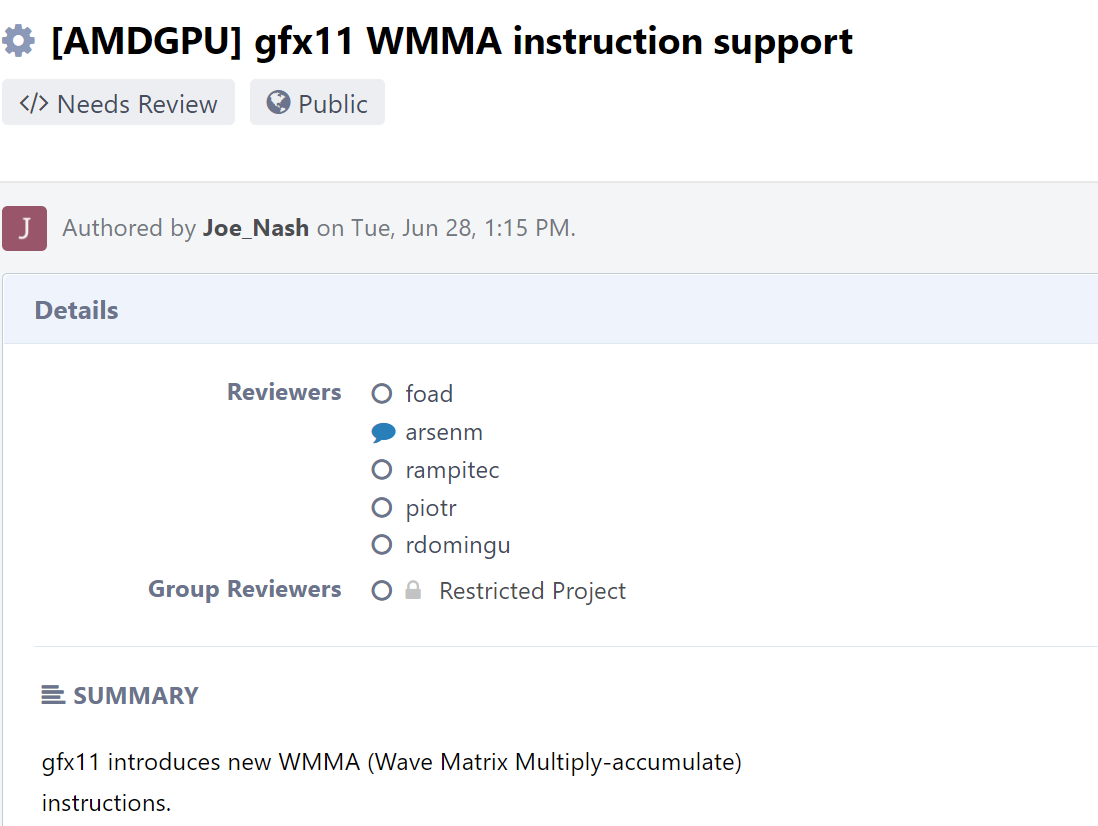

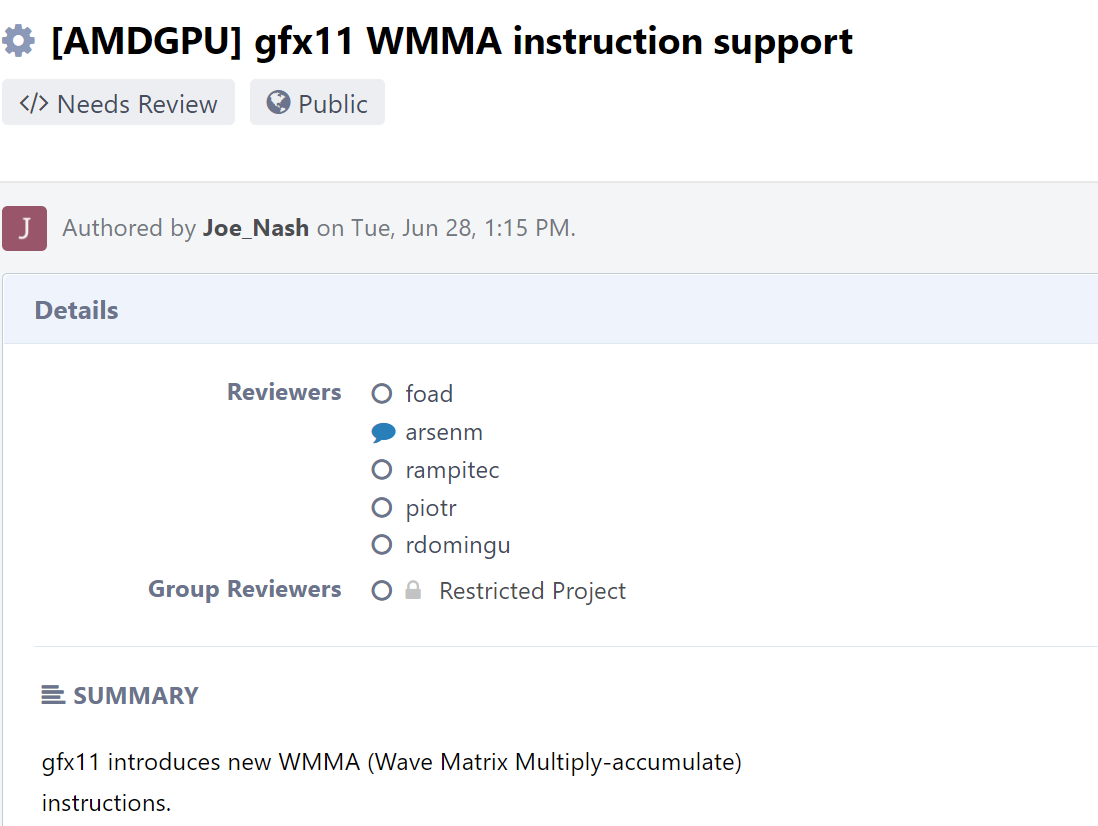

As spotted by @0x22h, the LLVM repository was recently updated with a new commit, introducing WMMA (Wave Matrix Multi-Accumulate) instructions on GFX11 hardware. The GFX11 codename is internally used for AMD's RDNA 3 GPU family which will be featured in the next-generation Radeon RX 7000 and Radeon Pro graphics cards. By the way, the code posted for AMDGPU suggests that WMMA only supports 16x16x16 matrixes, and it can output FP16 and BF16 data formats.

Similar to how NVIDIA uses matrix multiplactions for deep learning operations through its latest Tensor Core architecture, the AMD WMMA instructions will be fused on a hardware level to help achieve better Machine Learning or DNN operations. Now there aren't a lot of details provided, but this recent update in the LLVM could be a hint at a major graphics pipeline overhaul in the RDNA 3 GPUs.

In a year's worth of time, FSR has already seen double the adoption rate compared to its competitor, with over 113 games getting FiedlityFX Super Resolution support in just 1 year compared to 180+ titles in 3.4 years. Making the technology open-source for both PCs and consoles (Microsoft Xbox) will open up room for further adoption. If AMD was to rely on hardware acceleration for FSR tech moving forward, that would also suggest that NVIDIA was right in its decision to implement tensor cores on gaming hardware as early as its Turing generation of GPUs.

With that said, NVIDIA will be implementing an even better and more optimized "Tensor Core" architecture within its next-gen GeForce ADA RTX 40 series graphics cards for DLSS 3.0, and it will be an interesting comparison between it and FSR 3.0.

// WMMA (Wave Matrix Multiply-Accumulate) intrinsics

//

// These operations perform a matrix multiplication and accumulation of

// the form: D = A * B + C .

News Source:

https://www.coelacanth-dream.com/posts/2022/06/29/gfx11-wmma-inst/

View: https://twitter.com/greymon55/status/1541950168324403200

View: https://twitter.com/Kepler_L2/status/1541905092395388933

Is AMD taking a page from NVIDIA's books now, as a direct response to the DLSS upscaling tech used in games ? Well according to a recent leak, AMD's next-generation RDNA 3 'GFX11' GPUs could feature hardware-accelerated FSR 3.0 tech as spotted through the addition of a new instruction set within LLVM.

As you may already know by now that one of the key advantages of FSR 1.0 and FSR 2.0 upscaling tech (FidelityFX™ Super Resolution) compared to NVIDIA's DLSS has been that it does not rely on any hardware assistance such as dedicated Machine Learning (ML) blocks, but that may soon be coming to an end.

While AMD has done an absolutely great job with FSR, offering not only a visual quality on par with NVIDIA's solution but also by making it more open-source, it looks like in the coming generation, AMD might be going one step ahead & using dedicated machine learning blocks to further boost the performance and visual quality that FSR has to offer. But this remains to be seen, and this has not been confirmed by AMD yet.

Since AMD has just made it's FSR 2 tech open-source, the chances to go for a dedicated machine learning tech are slim, but anything can change in the last moment. We still don't have a proper block diagram of the RDNA3 GPU architecture.

But this is not the first AMD architecture to support matrix operations though, because the CDNA architecture already supports it. So not sure if consumer gaming GPUs will also feature them.

As spotted by @0x22h, the LLVM repository was recently updated with a new commit, introducing WMMA (Wave Matrix Multi-Accumulate) instructions on GFX11 hardware. The GFX11 codename is internally used for AMD's RDNA 3 GPU family which will be featured in the next-generation Radeon RX 7000 and Radeon Pro graphics cards. By the way, the code posted for AMDGPU suggests that WMMA only supports 16x16x16 matrixes, and it can output FP16 and BF16 data formats.

Similar to how NVIDIA uses matrix multiplactions for deep learning operations through its latest Tensor Core architecture, the AMD WMMA instructions will be fused on a hardware level to help achieve better Machine Learning or DNN operations. Now there aren't a lot of details provided, but this recent update in the LLVM could be a hint at a major graphics pipeline overhaul in the RDNA 3 GPUs.

In a year's worth of time, FSR has already seen double the adoption rate compared to its competitor, with over 113 games getting FiedlityFX Super Resolution support in just 1 year compared to 180+ titles in 3.4 years. Making the technology open-source for both PCs and consoles (Microsoft Xbox) will open up room for further adoption. If AMD was to rely on hardware acceleration for FSR tech moving forward, that would also suggest that NVIDIA was right in its decision to implement tensor cores on gaming hardware as early as its Turing generation of GPUs.

With that said, NVIDIA will be implementing an even better and more optimized "Tensor Core" architecture within its next-gen GeForce ADA RTX 40 series graphics cards for DLSS 3.0, and it will be an interesting comparison between it and FSR 3.0.

// WMMA (Wave Matrix Multiply-Accumulate) intrinsics

//

// These operations perform a matrix multiplication and accumulation of

// the form: D = A * B + C .

News Source:

https://www.coelacanth-dream.com/posts/2022/06/29/gfx11-wmma-inst/

View: https://twitter.com/greymon55/status/1541950168324403200

View: https://twitter.com/Kepler_L2/status/1541905092395388933

Last edited: