When in doubt, push more power!

Yeah why am I getting Tim Taylor from Home Improvement vibes from this article? LOL.

When in doubt, push more power!

As long as Sasa Marinkovic is the director of gaming marketing, there is no hope. The man's a complete tool.There's still hope for AMD, if Nvidia in hubris will go back to Samsung in order to save some dollars maybe they'll manage to compete again next time. Or the time after that. Never lose hope, Lisa!

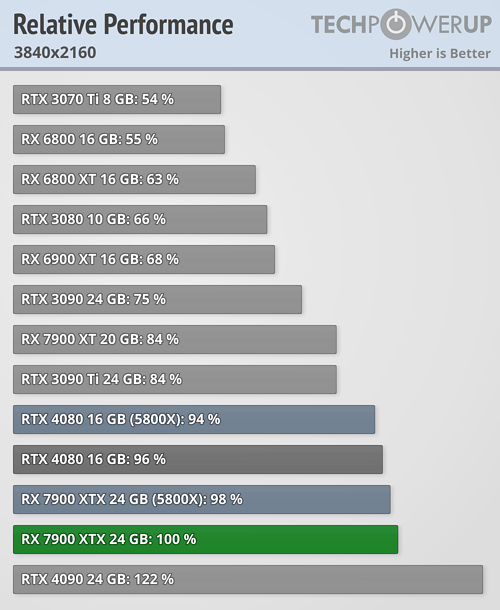

You make a good point. Power use scales exponentially, not linearly. That's why running Zen4 CPUs in Eco Mode significantly reduces power use, but not performance.Yeah but the article says that the 7900xtx scores 10% higher than some 4090 models so if we decrease clocks by 10% (from 3.3ghz to 3.0 ghz) then the voltage needed would decrease significantly (due to bringing the gpu core down the voltage -frequency curve toward the efficient area of the curve. I would guesstimate that the 7900xtx would need 500-550 watts in this case which is still worse than 4090 but not outrageous like 700 watts.

I've had the good fortune of getting to know Jim personally. He is, in my opinion, the greatest investigative tech journalist since Charlie Demerjian. Jim knows his stuff and often uses historical trends to demonstrate how and why he makes his predictions. Also, when he's wrong (which is almost never), he's the first one to admit it. That man earned my respect years ago and that respect has never been shaken by anything that he has done. If anything, it has only deepened.I know that MLID guy gets a fair bit of hate, but what about Jim at AdoredTV?

View: https://www.youtube.com/watch?v=RGulh89U4ow

At 700W, now we know why. 😕They could've done a 4090 competitor, but chose not to? 🤔

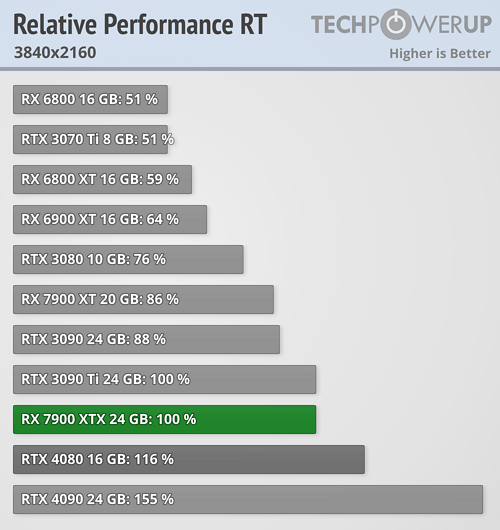

I don't think that it would get slapped in RT. I think that it would lose in RT to the RTX 4090 but that doesn't mean that it would get "slapped". People seem to forget that the Radeon RX 7900 XTX has about the same RT performance as the RTX 3090 Ti, not exactly a slouch when it comes to RT performance.Yeah, it's more like showcasing it's doable, not a real retail card.

I guess it could work with some garden hose sized watercooling setup.

but that's likely the reason why AMD did not go for 4090 competitors.

at full unlocked die, it would likely eat ~550W, and stil lget slapped in RT.

Sure maybe, but you'll have to buy into that whole ecosystem, shift all your software that isnt compatible, shift any procedures that worked in windows or linux, and never be able to upgrade or truly own the hardware. They could totally beat both of them in the future, but it will come with a ton of strings, likely wont be faster in everything because Apple likes to arbitrarily cut support for things, and many companies likely wont think those changes are worth it. Unless apple decides to do an about face on many of their policies and stances, neither Nvidia or AMD are going anywhere, and I dont know why you would ever want to live in an apple only world, it would look very much like their 1984 commercial, but apple would be the overlord.I dont like where the GPU technology is heading ... every generation is becoming more and more power hungry. They need to focus their research on low power . Once Steve Jobs opened Intel eyes when he made the first MAC AIR and asked Intel to produce a special CPU for him. and thats the reason Apple Today left Intel and made their own CPU .. I predict that in time Apple will start making their own dedicated GPU for teir high end workstation .and they will crush Nvidia and AMD. I give it 10 years from now.

In my opinion the true flex play for Apple would be to buy Nvidia and use them to design their ideas of a GPU.Sure maybe, but you'll have to buy into that whole ecosystem, shift all your software that isnt compatible, shift any procedures that worked in windows or linux, and never be able to upgrade or truly own the hardware. They could totally beat both of them in the future, but it will come with a ton of strings, likely wont be faster in everything because Apple likes to arbitrarily cut support for things, and many companies likely wont think those changes are worth it. Unless apple decides to do an about face on many of their policies and stances, neither Nvidia or AMD are going anywhere, and I dont know why you would ever want to live in an apple only world, it would look very much like their 1984 commercial, but apple would be the overlord.

Well they said it was a custom liquid-cooling loop, not LN2. In theory, it could keep going, but I doubt its VRM can handle it long term. Still, I bet they could make one robust enough to make a 7950 XTX. I assume AMD didn't though, in the first place, because of reliability.Neat - How long can it run at that power level? I mean I can run my RTX 4090 at RT X 4090 performance levels all day long

While I don't agree with the Apple statements...I dont like where the GPU technology is heading ... every generation is becoming more and more power hungry. They need to focus their research on low power . Once Steve Jobs opened Intel eyes when he made the first MAC AIR and asked Intel to produce a special CPU for him. and thats the reason Apple Today left Intel and made their own CPU .. I predict that in time Apple will start making their own dedicated GPU for teir high end workstation .and they will crush Nvidia and AMD. I give it 10 years from now.

"Still hope"? Nobody is selling a graphics card I'd consider purchasing anyways, so it's all a pretty moot point. It's like if all GM sold was Corvettes and Hummers.There's still hope for AMD, if Nvidia in hubris will go back to Samsung in order to save some dollars maybe they'll manage to compete again next time. Or the time after that. Never lose hope, Lisa!

Humor is subjective. I personally laugh ever time I see it.Yup been old for 20 years now and lost its funny along time ago.

now comes across as try hard

Absolutely it's subjective, and we should remember to remain tolerant of those who find things funny that we do not. For instance, farts are still amusing at 53Well "Humor is subjective" and depends on the crowd too. There's plenty of stuff that would be funny with some groups of friends, but not with others, or not with my parents. If something is clever enough, it can usually be funny while still being very offensive. But if it's just lazy and offensive, then that's probably less about humor and more about exclusivity.

That said, I now find myself curious how this thing does with Crysis. I kind of wish it were still used as a benchmark for DX9 Raster performance.

Sidenote: These "Reaction Score" and "Points" on the profile really bug me. I'd probably have 1000+ Reaction and 60,000 points if they did them and best answers the same in 2010.

And Nvidia could reply with something like the rumoured 4090Ti, regaining the performance crown at the same/similar power envelope. Honestly I don't see the point, RDNA3 isn't just as efficient as Ada Lovelace, AMD accepted it when they release their flagship, but some fans still cannot do it.Yeah but the article says that the 7900xtx scores 10% higher than some 4090 models so if we decrease clocks by 10% (from 3.3ghz to 3.0 ghz) then the voltage needed would decrease significantly (due to bringing the gpu core down the voltage -frequency curve toward the efficient area of the curve. I would guesstimate that the 7900xtx would need 500-550 watts in this case which is still worse than 4090 but not outrageous like 700 watts.

Yup been old for 20 years now and lost its funny along time ago.

now comes across as try hard

And Nvidia brought forth the first 700.00 class video card that began the climb toward 1,800.00 gpus and I was fortunate enough to be.able.to grab one of them, the EVGA 8800GTX ACS3 768mb gpu! I was able to proudly play Crysis ans smirk when I saw that ever famous " but can it play crysis" comment, lol! Later I grabbed 2 more and set up a 3 way SLI and played until the sweat running down my right calf where the pc sat distracted me. Those were the days...Crysis 1 came out in fall 2007. The original Far Cry came out in the first half of 2004 and could actually run fairly well on newer GPUs of the the time.

The Nintendo Switch can run Crysis (and actually run it, not through some cloud service). So unless we're talking about a Raspberry Pi 3 here, it's not really amusing of a question anymore.It will never get old! lmao

They did remaster Crysis recently and made it nearly impossible to run at 60 fps again, though, it is not the original 07 version of the game, its still 'Crysis.'The Nintendo Switch can run Crysis (and actually run it, not through some cloud service). So unless we're talking about a Raspberry Pi 3 here, it's not really amusing of a question anymore.

To you apparently. Humor is subjective. Not everyone finds the same thing funny.The Nintendo Switch can run Crysis (and actually run it, not through some cloud service). So unless we're talking about a Raspberry Pi 3 here, it's not really amusing of a question anymore.