This looks doable. http://www.nordichardware.com/news,7946.html Currently, the 4870 is 256mm^2 , so its possible, bringing the die size to 330mm^2? I also remember them saying somewheres, that with the 4xxx series they wouldnt be ramping the shaders, or shader clock+ core clock , so maybe that will change also

ATI 40/45nm Q1 09 2000 shaders?

- Thread starter JAYDEEJOHN

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Yea, the G280 and the 4870 run hot, this may even run hotter, tho it may not. Im thinking thermals in the 3xxx series range

More transistor per mm2 is going to catch up at some point, juat like the cpus. Maybe after this, have to go High K

ovaltineplease

Distinguished

Well if rv800 series was the tock of the r700 tick - you could almost expect a shrunk die with higher clocks and lower temperature/power requirements (overclocking ! d o_o b) - I do find the idea of adding that many shaders a bit hard to swallow though..

Maybe (hunch alert) they will actually have a better crossfire tech by 2009 that might deliver a lot more performance scaling from gpu to gpu like they initially intended?

Maybe (hunch alert) they will actually have a better crossfire tech by 2009 that might deliver a lot more performance scaling from gpu to gpu like they initially intended?

mactronix

Judicious

When they get the crossfire/interconect working somewhere near 100% and the die size down enough then we will start seeing modular cards for even greater value. its been heading this way since the 3870 X2.

2000 shaders would be mad !! then what happens when things get coded to actually run well on the archetecture

Mactronix

2000 shaders would be mad !! then what happens when things get coded to actually run well on the archetecture

Mactronix

TheGreatGrapeApe

Champion

IMO you're going to have trouble achieving higher clocks.

Think about the design, incredible amount of transistors in a tiny space with smaller gate spacing and increased cross-talk. Less surface area to disipate the heat per transistor (not necessarily overall).

I have little doubt that if they keep the speeds near the same as now, then the heat production will increase, definitely the TDP would be higher, so if they could make a super efficient cooler they could reduce temps, but definitely more heat will be produced, just need to get rid of it efficiently.

And of course hand-in-hand with that goes power consumption, where lower speed means lower power, but increased speed, heat and voltage to overcome the heat and stabilize speed equals more power, which translates into more heat, etc.

Think about the design, incredible amount of transistors in a tiny space with smaller gate spacing and increased cross-talk. Less surface area to disipate the heat per transistor (not necessarily overall).

I have little doubt that if they keep the speeds near the same as now, then the heat production will increase, definitely the TDP would be higher, so if they could make a super efficient cooler they could reduce temps, but definitely more heat will be produced, just need to get rid of it efficiently.

And of course hand-in-hand with that goes power consumption, where lower speed means lower power, but increased speed, heat and voltage to overcome the heat and stabilize speed equals more power, which translates into more heat, etc.

Wasn't there talk this past year of a new heatsink material being researched that would allow for recovery of waste heat? If GPU's keep going in this direction, we'll need something like that.

If Q1 is right for the next card, I might just skip the 4870x2. After all, the 3870x2 is "only" 6 months old and still runs current games well. By first quarter next year, I'll have an AM3 board, DDR3 and Deneb.

How often do you guys upgrade? I used to once every 2 years for GPU but the performance boost of the 4xxx series over the 3xxx is amazing. If the RV870 scales up in performance, then ATI should have a very good year for both holiday games and next spring's.

If Q1 is right for the next card, I might just skip the 4870x2. After all, the 3870x2 is "only" 6 months old and still runs current games well. By first quarter next year, I'll have an AM3 board, DDR3 and Deneb.

How often do you guys upgrade? I used to once every 2 years for GPU but the performance boost of the 4xxx series over the 3xxx is amazing. If the RV870 scales up in performance, then ATI should have a very good year for both holiday games and next spring's.

hannibal

Distinguished

Well the 740 seems to be more interesting. It should reduce the prices of GPU's somewhat.

GPU RV870 should be out about the same time as rumored first dx10.1 Nvidia card. Maybe we can see then card that can run Crysis at reasonable speed in very high settings... well at least at resolution of 1024*762...

These are ofcource just rumours, but it's guite near the time when ATI said that they are getting back to highend... (Yeah the 4870 is great, but I think that even ATI was surpriced the speed of that card) So this RV870 may be the first highend ATI card for years. Just hope that it does not meat the problems of 2900 series and Nvidias g200 series. High end chips are somewhat risky busines. Just like TheGreatGrapeApe said the heat can be really big problem.

GPU RV870 should be out about the same time as rumored first dx10.1 Nvidia card. Maybe we can see then card that can run Crysis at reasonable speed in very high settings... well at least at resolution of 1024*762...

These are ofcource just rumours, but it's guite near the time when ATI said that they are getting back to highend... (Yeah the 4870 is great, but I think that even ATI was surpriced the speed of that card) So this RV870 may be the first highend ATI card for years. Just hope that it does not meat the problems of 2900 series and Nvidias g200 series. High end chips are somewhat risky busines. Just like TheGreatGrapeApe said the heat can be really big problem.

outlw6669

Splendid

2000 shaders

That would be awesome!

I would like to see them try and stick a single slot cooler on that

@ hannibal

According to Fudzilla....

Nvidia will never do DX10.1.

They are going to jump directly to DX11.

@ yipsl

I think you were referring to This article.

It has definite potential. With the current 25% efficiency however, I doubt it will be viable for some time. Defiantly one to keep my eyes open for though.

That would be awesome!

I would like to see them try and stick a single slot cooler on that

@ hannibal

According to Fudzilla....

Nvidia will never do DX10.1.

They are going to jump directly to DX11.

@ yipsl

I think you were referring to This article.

It has definite potential. With the current 25% efficiency however, I doubt it will be viable for some time. Defiantly one to keep my eyes open for though.

outlw6669

Splendid

I hate to quote Fudzilla again but according to them, DX11 will not support ray tracing. Hopefully DX11 is picked up faster than DX10 *glares at nVidia* or we will be stuck with DX9 for quite a while to come.

In any case, I doubt ATI's refresh will support DX11. It is the "Tock" in their current stragity. New arch, Tick. Shrink and optimisation, Tock. Wait for the R9xx to support DX11 (or DX11.1 if nVidia holds their course).

In any case, I doubt ATI's refresh will support DX11. It is the "Tock" in their current stragity. New arch, Tick. Shrink and optimisation, Tock. Wait for the R9xx to support DX11 (or DX11.1 if nVidia holds their course).

The_Abyss

Distinguished

I tend to upgrade whenever a new generation comes out. My recent history goes:

- Ti4200

- FX5900 (last 2 weeks until...)

- 9800 Pro

- 6800GT

- 7800GT

- 7900GT (freebie)

- 8800 GTS 640

I didn't see any of the G92 series as worth upgrading to as

a) I don't need an e-penis extension and

b) the GTS played everything at 1920 x 1200 (sometimes with AA) adequately, other than the worst coded game in the world, Crapsis.

I'll definitely be moving up again on the current generation - I just want to see if the 4870X2 and it's drivers are all they are cracked up to be - less than a month to go now.

Otherwise, it will probably be just a single 4870 for me, as aside for the aforementioned badly coded piece of AIDS infected cat sick, there is still nothing new out that I'm likely to play that needs all the extra power.

Back on the original topic, this seems very much to be an evolution, moving to yet more efficient silicon and using the opportunity to squeeze a bit more on the die.

Both ATI and Nvidia really need to increase their R&D into cooling solutions IMO. The amount they spend on researching the actual chips dwarfs what is actually required to make it run comfortably for the end user. How some small companies can come up with relatively cheap 3rd party solutions so quickly and so efficiently should frankly embarrass and shame ATI and Nvidia far more than it does.

- Ti4200

- FX5900 (last 2 weeks until...)

- 9800 Pro

- 6800GT

- 7800GT

- 7900GT (freebie)

- 8800 GTS 640

I didn't see any of the G92 series as worth upgrading to as

a) I don't need an e-penis extension and

b) the GTS played everything at 1920 x 1200 (sometimes with AA) adequately, other than the worst coded game in the world, Crapsis.

I'll definitely be moving up again on the current generation - I just want to see if the 4870X2 and it's drivers are all they are cracked up to be - less than a month to go now.

Otherwise, it will probably be just a single 4870 for me, as aside for the aforementioned badly coded piece of AIDS infected cat sick, there is still nothing new out that I'm likely to play that needs all the extra power.

Back on the original topic, this seems very much to be an evolution, moving to yet more efficient silicon and using the opportunity to squeeze a bit more on the die.

Both ATI and Nvidia really need to increase their R&D into cooling solutions IMO. The amount they spend on researching the actual chips dwarfs what is actually required to make it run comfortably for the end user. How some small companies can come up with relatively cheap 3rd party solutions so quickly and so efficiently should frankly embarrass and shame ATI and Nvidia far more than it does.

If used, High K metal gate offers better efficienct, meaning that, the amount of electrons are actually used and not bled off, or actually in some cases thru the chip. This would bring down heat issues sufficiently . This is what Im wondering. Because gpus hace a higher resistance to heat before failure, they can probably go to this process (40/45nm) without HKMG, but after that, wouldnt it be required? @ Ape, I agree 100%. Lower clock speeds, but the rumored shader speeds over core speeds would help alot of this

TheGreatGrapeApe

Champion

mactronix :

I am wondering what the factor is you would need between the shrink and the increase in transistors to offset the power/heat increase ?

Well JDJ already mention the High K method that intel is already using, unlike the previous Low-K dialectic material alone, you would have low K material but with high K gates, this should reduce leakage at the gates. Now this would help efficiency greatly, but it might still only yield fractional benefit because if you take out the leakage part of the equation would you not still have a very power hungry high number of transistors? To me usually the doped gates is an effort to not have things increase at a geometric rate, but they still increase, more inline with transistor number alone, and not greatly affected by gate and channel proximity. The biggest problem IMO, TSMC doesn't currently offer High-K gate doping, nor is it on their 40nm roadmap... currently. Sofar it seems that like IBM, TSMC will enter the High-K game in he 32nm process next year. I haven't heard any positive updates on the TSMC front about adding it before then, though there was talk of adding it to the 45nm or even 40 nm timeframe, nothing has meterialized yet that I've seen.

As for changing some of the clock properties like clock gating (for idle power) and clock domains for specific areas like shader would help somewhat although with ATi's current design they would likely help less where the TMU is very closely tied to the long shader cluster. It's not like it's the ROPs or ancilary parts that are generating the most heat, it's highly likely it's the shaders which compose most of the chip, and so making them faster and keeping everything else at 500mhz, likley wouldn't achieve much power and heat wise. If anything you'd only once again benifit at leass than max, where you could turn off shader clusters etc, however they kinda already do that a bit as it is, you would just increase fractional efficiency where instead of 150W at 50% load it's 125W, but when maxed you're still at 200+W or whatever. I think yes you do want to do it to add some additional efficiency and hope that all these little things add up to bigger savings.

You can get very efficient with your design, but remember we're talking about 2 billion transistors in a die that will be almost as small as the current one (18x18mm vs 16x16mm), that's still a very small surface area to dissipate heat, and that to me is going to require attention as well. Both chip and HSF designs need to be improved IMO.

TheGreatGrapeApe

Champion

Hey just re-read the second part to Nordic's post (following their link), that's going to be 2000 shader on 2 chips, so only 1000 per chip, which isn't as much of an issue (I'm almost surprised it would involve a die size increase for just a 12.5% shader increase but on a full node jump).

This definitely opens up the situation a little more. Also means significantly less performance per chip, but definitely not the heat/power nightmare I was thinking before.

This definitely opens up the situation a little more. Also means significantly less performance per chip, but definitely not the heat/power nightmare I was thinking before.

mactronix

Judicious

Thanks alot TGGA that helps me get my head around it a lot. I think.

So pretty much what it boils down to is that increasing the transistor count significantly cant really be offset to any beneficial degree by die shrinking.

Turning down the mhz wouldn't really help either.

Is it possible that we will get to the stage where water cooling is going to become standard ?

I recently read an article about stacking CPU chips and honeycombing them with tiny hair width water channels for cooling. Could something where say laying channels of cooling ducts alongside the shaders actually on die filled with a suitable conductive liquid that wouldn't evaporate away work ? Or would that be a double jeopardy kind of situation where the very thing you are cooling with is actually keeping the heat in ? My reasoning is that it would increase the spread of the heat and therefore reduce it while also allowing a bigger actual surface area to be presented to the heat sink and fan.

Mactronix

So pretty much what it boils down to is that increasing the transistor count significantly cant really be offset to any beneficial degree by die shrinking.

Turning down the mhz wouldn't really help either.

Is it possible that we will get to the stage where water cooling is going to become standard ?

I recently read an article about stacking CPU chips and honeycombing them with tiny hair width water channels for cooling. Could something where say laying channels of cooling ducts alongside the shaders actually on die filled with a suitable conductive liquid that wouldn't evaporate away work ? Or would that be a double jeopardy kind of situation where the very thing you are cooling with is actually keeping the heat in ? My reasoning is that it would increase the spread of the heat and therefore reduce it while also allowing a bigger actual surface area to be presented to the heat sink and fan.

Mactronix

TheGreatGrapeApe

Champion

Well yeah, I think its IBM using those micro-channels, they were the first to expose the micro pump idea about 5+ years ago. However I still think it's very early for that type of cooling. I think they're reserving that type of thing as a nano-tech solution for when the optical lithography reaches a limitation. For the mean time it's likely still easier and cheaper to run bigger lower speed chips more than move to such exotic solutions, regardless of how attractive they are outside of the cost/benefit aspect which is still the weightiest factor.

mactronix

Judicious

Yes i realised the impact on the poduction process about 10 mins after i posted  .

.

Is there a reason why (Apart from keeping the cost down ) that they dont just shrink the transistors but space them out more on the die ? Would the extra distance the currant would have to travel increase the heat and potential leakage ?

Thanks for answering all these by the way, Im learning some things today.

Mactronix

.

.Is there a reason why (Apart from keeping the cost down ) that they dont just shrink the transistors but space them out more on the die ? Would the extra distance the currant would have to travel increase the heat and potential leakage ?

Thanks for answering all these by the way, Im learning some things today.

Mactronix

TheGreatGrapeApe

Champion

I don't know what the impact on greater die spacing would be.

Some thing are a specific size of course but you could increase the distance between those components and space them out more, however I think that would become a yield issue where you wind up with a noticeably bigger die.

You have to consider that drawback when considering the level of offspring you can have from a single chip and what's SKUs remain profitable. I think that is the reason that focuses them more on die size than transistor/component spacing. If you clock an RV770 at 400mhz you probably still have a reasonable shot at making a previously named SE/LE/etc part that you could sell your bin'ed components on cards selling for $100-150 and still make a profit, make the die bigger, and it makes the cost more per chip and then you would need a higher failure rate to make such a part attractive (where your selling something otherwise discarded).

ATi was pretty impressed with their ability to pack everything tightly on this HD4K die and not have much wasted space. They implement a few changes to make thing even more tightly packed and started the X junction fab changes in either the 110nm or 90nm TSMC process which saved them a whole layer. It seems their focus is primarily on die size and desntiy over heat/power concerns, whether that will remain to be the case is a different question, and I'm sure the reaction to both the single and the dual chip HD4K models will influence their prusuits on the R8xx generation.

IMO, they need to strike a better balance of heat & power consumption v performance and transistor density, because a modular design is a great idea, however you lose alot of the benefit when your single smaller modular die is hotter and more power consuming than the single mega die it competes with.

It's all about the tradeoffs, but IMO if the GTX280 comes close to the R700 with significantly less power and heat, it'll really make me wonder about ATi's ability to move to a modular future with their current architecture.

Don'e get me wrong the HD4K is a great chip, but definitely not displaying the attributes I would expect from what we expected to be a multi-GPU revolution building block.

Some thing are a specific size of course but you could increase the distance between those components and space them out more, however I think that would become a yield issue where you wind up with a noticeably bigger die.

You have to consider that drawback when considering the level of offspring you can have from a single chip and what's SKUs remain profitable. I think that is the reason that focuses them more on die size than transistor/component spacing. If you clock an RV770 at 400mhz you probably still have a reasonable shot at making a previously named SE/LE/etc part that you could sell your bin'ed components on cards selling for $100-150 and still make a profit, make the die bigger, and it makes the cost more per chip and then you would need a higher failure rate to make such a part attractive (where your selling something otherwise discarded).

ATi was pretty impressed with their ability to pack everything tightly on this HD4K die and not have much wasted space. They implement a few changes to make thing even more tightly packed and started the X junction fab changes in either the 110nm or 90nm TSMC process which saved them a whole layer. It seems their focus is primarily on die size and desntiy over heat/power concerns, whether that will remain to be the case is a different question, and I'm sure the reaction to both the single and the dual chip HD4K models will influence their prusuits on the R8xx generation.

IMO, they need to strike a better balance of heat & power consumption v performance and transistor density, because a modular design is a great idea, however you lose alot of the benefit when your single smaller modular die is hotter and more power consuming than the single mega die it competes with.

It's all about the tradeoffs, but IMO if the GTX280 comes close to the R700 with significantly less power and heat, it'll really make me wonder about ATi's ability to move to a modular future with their current architecture.

Don'e get me wrong the HD4K is a great chip, but definitely not displaying the attributes I would expect from what we expected to be a multi-GPU revolution building block.

They added that about the x2 later. This is how I see it then. Im thinking theyll still go for a higher shader clock, and didnt we see better power/performance going from 2xxx to the 3xxx series? Im thinking well see a repeat of this again. The speed of the shaders will cause some heat issues, but since it isnt a huge increase in shaders like we saw going from 2xxx to 3xxx, itll be more manageable. Besides, it gets to a certain point where your flogging a dead horse using the same arch. Just like we saw very little improvement with the G280, Im thinking the same for the 5xxx series other than the shader clocks

G

Guest

Guest

ATI come with 45nm While nVIDIA Still in 65nm?

45nm will bringing great OC level

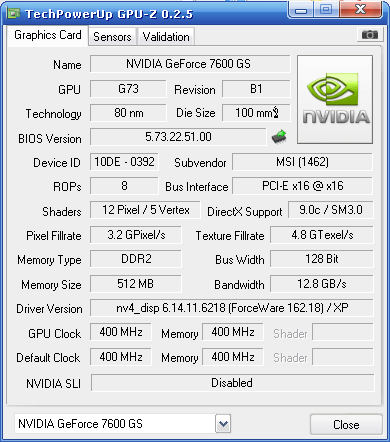

I have MSI NX 7600GS and i can't OC it more then 433MHz (normal=400MHz)

because the 80nm GPU !

even Geforce 8600 stil use 80nm article .

45nm will bringing great OC level

I have MSI NX 7600GS and i can't OC it more then 433MHz (normal=400MHz)

because the 80nm GPU !

even Geforce 8600 stil use 80nm article .

TheGreatGrapeApe

Champion

Just finishing up here at work so this'll be my last post for a bit, check in later.

Yes that's true, but they also went from a problematic TSMC 80nm HS fab to the luckily very stable 55nm fab, which jumped a full node in the process (move node and optical shrink bypassing 65nm), and this time around you'd be going from a stable 55nm process to an unknown 45/40nm fab. May or may not be better/same/worse. I wouldn't count on the same power/heat reductions we got from the HD2K-3K

I agree somewhat, it's definitely less than I originally thought (+12.5% vs +150%) , but I'd say adding 12.5% and then increasing speed by at least that amount (go from 800MHz to 1GHz) would be quite a burden.

Definitely, but the question is how you change. I'm curious about their scaling if they're stil resorting to amplification of compoents and not exploiting raw efficiencies.

I think the HD4K is a very weird beast though because as a single chip it's very cost focused, but heat and temp wise it's not better than the GTX which is that big single chip solution. When it came out we were all thinking, yeah nV's in big trouble, because you'd never put that much heat & power draw on a single card, but oie, it's actually nearly the same as the HD4K, and it's not the power/heat that's keeping them from making a GX2 it's the yields & cost (especially of a complex board).

I don't know I guess, I'm a little bitter at the fact that this loses them the modular option of laptops solutions, in which there's just as equally no freakin' way they're plopping an HD48xx in anywhere near its current form in a laptop. And that to me was where another benefit of the modular design lay, and it's lost due to the power/heat issues.

There's no arguing that increasing so many components by 2.5X without increasing the power consumption by much more than 25% is an impressive feat with is consuming less than the HD2900XT. However it does limit modular designs greatly, where there's little benefit to an X2 when running them in QuadFire mode yields you over 500W of actually draw from the cards alone, versus less from 2 GTX in SLi, and with the still yet to be refined Tri & Quad scaling I'm not sure that Quadfire would be as attractive as GTX SLi in many cases. Anywhoo, still early for any of this, just my major reservation sofar, which may admittedly be jumping the gun a bit.

nV will be 55nm by the fall with the GTX refresh.

I though the GF7600GS was 90nm only the GF7650 was to be 80nm, but then again there's lotsa naming monkey business.

Anywhoo, 45nm doesn't guarantee higher OCs anymore than TSMC's overheating 80nmHS did for the R600. One would hope it offers some more headroom, but that depends on the other factors like power & heat as well as a stable process node.

JAYDEEJOHN :

They added that about the x2 later. This is how I see it then. Im thinking theyll still go for a higher shader clock, and didnt we see better power/performance going from 2xxx to the 3xxx series?

Yes that's true, but they also went from a problematic TSMC 80nm HS fab to the luckily very stable 55nm fab, which jumped a full node in the process (move node and optical shrink bypassing 65nm), and this time around you'd be going from a stable 55nm process to an unknown 45/40nm fab. May or may not be better/same/worse. I wouldn't count on the same power/heat reductions we got from the HD2K-3K

Im thinking well see a repeat of this again. The speed of the shaders will cause some heat issues, but since it isnt a huge increase in shaders like we saw going from 2xxx to 3xxx, itll be more manageable.

I agree somewhat, it's definitely less than I originally thought (+12.5% vs +150%) , but I'd say adding 12.5% and then increasing speed by at least that amount (go from 800MHz to 1GHz) would be quite a burden.

Besides, it gets to a certain point where your flogging a dead horse using the same arch. Just like we saw very little improvement with the G280, Im thinking the same for the 5xxx series other than the shader clocks

Definitely, but the question is how you change. I'm curious about their scaling if they're stil resorting to amplification of compoents and not exploiting raw efficiencies.

I think the HD4K is a very weird beast though because as a single chip it's very cost focused, but heat and temp wise it's not better than the GTX which is that big single chip solution. When it came out we were all thinking, yeah nV's in big trouble, because you'd never put that much heat & power draw on a single card, but oie, it's actually nearly the same as the HD4K, and it's not the power/heat that's keeping them from making a GX2 it's the yields & cost (especially of a complex board).

I don't know I guess, I'm a little bitter at the fact that this loses them the modular option of laptops solutions, in which there's just as equally no freakin' way they're plopping an HD48xx in anywhere near its current form in a laptop. And that to me was where another benefit of the modular design lay, and it's lost due to the power/heat issues.

There's no arguing that increasing so many components by 2.5X without increasing the power consumption by much more than 25% is an impressive feat with is consuming less than the HD2900XT. However it does limit modular designs greatly, where there's little benefit to an X2 when running them in QuadFire mode yields you over 500W of actually draw from the cards alone, versus less from 2 GTX in SLi, and with the still yet to be refined Tri & Quad scaling I'm not sure that Quadfire would be as attractive as GTX SLi in many cases. Anywhoo, still early for any of this, just my major reservation sofar, which may admittedly be jumping the gun a bit.

ATI come with 45nm While nVIDIA Still in 65nm?

nV will be 55nm by the fall with the GTX refresh.

I have MSI NX 7600GS and i can't OC it more then 433MHz (normal=400MHz)

because the 80nm GPU !

even Geforce 8600 stil use 80nm article .

I though the GF7600GS was 90nm only the GF7650 was to be 80nm, but then again there's lotsa naming monkey business.

Anywhoo, 45nm doesn't guarantee higher OCs anymore than TSMC's overheating 80nmHS did for the R600. One would hope it offers some more headroom, but that depends on the other factors like power & heat as well as a stable process node.

G

Guest

Guest

TheGreatGrapeApe :

I though the GF7600GS was 90nm only the GF7650 was to be 80nm, but then again there's lotsa naming monkey business.

.

But GPUz Tell me my GPU have 80nm!

TheGreatGrapeApe :

Anywhoo, 45nm doesn't guarantee higher OCs anymore than TSMC's overheating 80nmHS did for the R600. One would hope it offers some more headroom, but that depends on the other factors like power & heat as well as a stable process node.

That's make it different then the chip of CPU's

Because when the CPU madded smaller

will be more powerful Like different between E7200 and with 45 nm and E6850 with 65 nm !

TRENDING THREADS

-

-

-

-

Question I have been stuck between NVMe and SATA SSD. What should I do now?

- Started by maniac2556

- Replies: 12

-

Question 1TB HDD 80% Fragmented, Windows 10 Optimise Drives Program Doesn't Help

- Started by sdfbvcxbf

- Replies: 7

-

RTX 4070 vs RX 7900 GRE faceoff: Which mainstream graphics card is better?

- Started by Admin

- Replies: 70

-

Question New pc build r9 7900x3d rtx 4080 super no post only ram rgb turns on

- Started by Harvey Durward

- Replies: 4

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.