Niche idea.

If I design the card to fit in a single PCIe slot, would I encounter thermal dissipation issues?

PCI-E 3.0 drives operate at ~55C. So, if you group several together, you'd be feeding heat from one drive to another. Also, you'd be heat soaking the PCB as well, which in turn also increases temps. Moreover, i'm concerned about the rightmost drive slot, since it is way too close to PCI-E 12VHPWR power socket. And that power socket can deliver easy 600W of power. So, those power pins would also get very hot, probably feeding heat to the drive just next to it. Heck, many 12VHPWR slots have already melted on RTX 4090 GPUs.

With this, active cooling for the drives would be necessary, with far more space between 12VHPWR connector and rightmost drive slot.

With PCIe 3.0 speeds, would x8 or x16 configurations be sufficient to meet current market demands?

No.

When all 8x drives are populated, that leaves 2x lanes per drive (when using x16 mode) or only 1x lane per drive (when using x8 mode).

All PCI-E 3.0 NVMe drives operate in x4 mode. E.g my Samsung 970 Evo Plus 2TB. When it needs to operate in x2 mode, the effective read/write speed is cut in half. And in x1 mode, only quarter would remain.

970 Evo Plus 2TB has 3500 MB/s read and 3300 MB/s write speeds. In x2 mode, it's half: 1750 MB/s read and 1650 MB/s write. In x1 mode, it's quarter: 875 MB/s read and 825 MB/s write. Slightly faster than SATA3.

I'd like to see someone buying the drive and not getting the advertised speeds. Instead, leaving only half or quarter available.

Would it be a viable product on the market?

No.

Many people are currently buying PCI-E 4.0 drives and some are already buying PCI-E 5.0 drives. PCI-E 3.0 drives are hardly bought anymore. If you would've come up with your idea 5 years ago, where PCI-E 3.0 drives were the fastest, then maybe.

Also, you can't configure the add-on card to run in x16 mode that easily, since you have to consider MoBo as well. None of the consumer MoBo, that i know of, is able to allocate 16x lanes to two PCI-E x16 slots at once. Usually it is either:

1st PCI-E x16 slot - in x16 mode - where GPU goes

2nd PCI-E x16 slot - in x4 mode - for add-on cards (usually using chipset lanes)

There is lane splitting that can be done but it would put the PCI-E slots;

1st PCI-E x16 slot - into x8 mode

2nd PCI-E x16 slot - into x8 mode

When running e.g dual-GPU setup.

So, only way your add-on card can utilize 16x lanes, is when person doesn't have dedicated GPU in their system and they put that add-on card in place of the GPU. But hardly everyone would do that.

Whereby x8 mode for your add-on card is more viable, but it then limits the drive speeds to the quarter, since each drive would only have 1x PCI-E lane to operate with.

Another issue is the fact that many GPUs are dual-, triple-slot GPUs, blocking off 2nd PCI-E x16 slot. And even when there is enough space to slot the add-on card just in front of the GPU, it will block off GPU fans and GPU airflow.

So, what would be viable, when considering the MoBo limitations and also current day drives;

add-on card that runs in x8 mode

add-on card that supports PCI-E 4.0 (at minimum), or preferably PCI-E 5.0

add-on card has active cooling for PCI-E 4.0/5.0 drives

add-on card that has 2x M.2 slots, so that both drives can be utilized at their full speed

This is actually what you can see currently on the market, where M.2 add-on cards have only two M.2 slots.

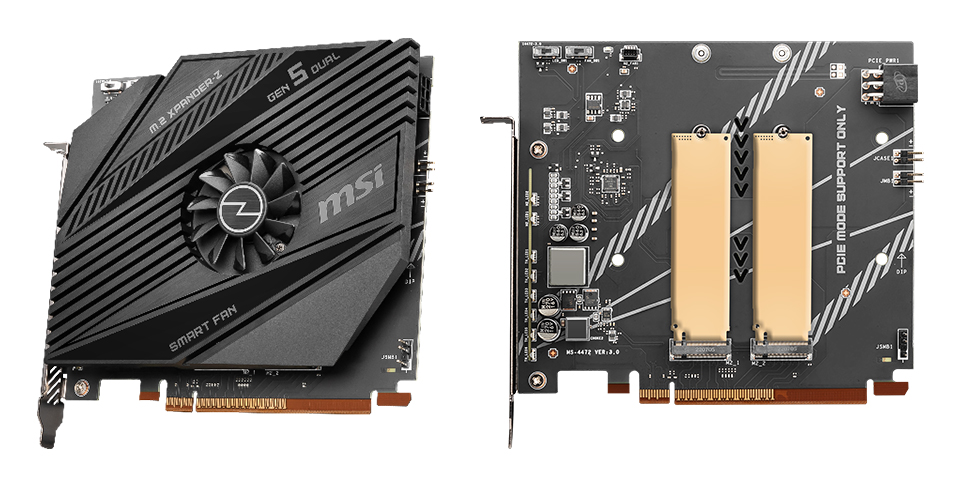

E.g like this MSI X-panderZ Dual Card add-on card, that supports 2x M.2 PCI-E 4.0 drives and runs in x8 mode;

It is not viable to cut drive read/write speeds in half (or worse yet, only quarter), just to have more drives. Not for consumer use and especially not for enterprise (server park) use.