I just recently decided to go with a 6 core processor because I was interested in learning some parallel programming.

But I did some benchmarks and saw things I didn't expect.

I had read that multiple cores only benefit you when you are running something that utilizes more than one core.

But that's now what my tests are showing.

Also if I turn off 5 cores leaving only one, you can see it in the results even with something that only runs on one core.

I also found something else I didn't expect.

I had assumed that if you plot the speeds of benchmarks, each time increasing the number of threads and the number of iterations , you would see a drop off in how much each thread helps you out after you exceed the number of threads the processor has.

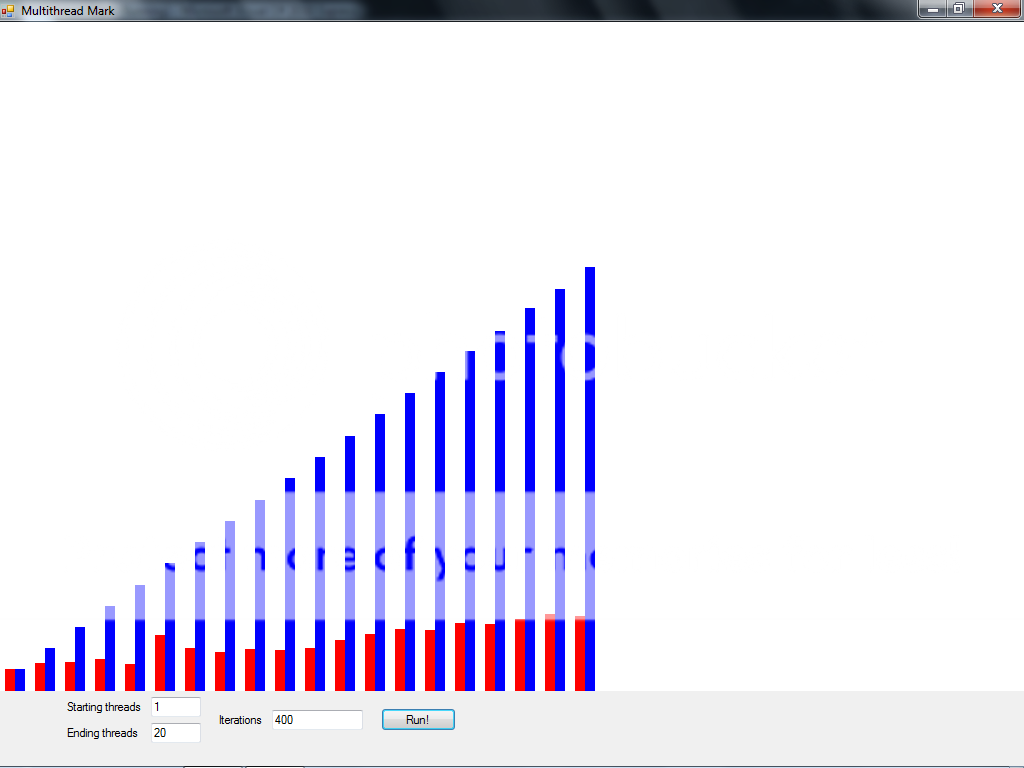

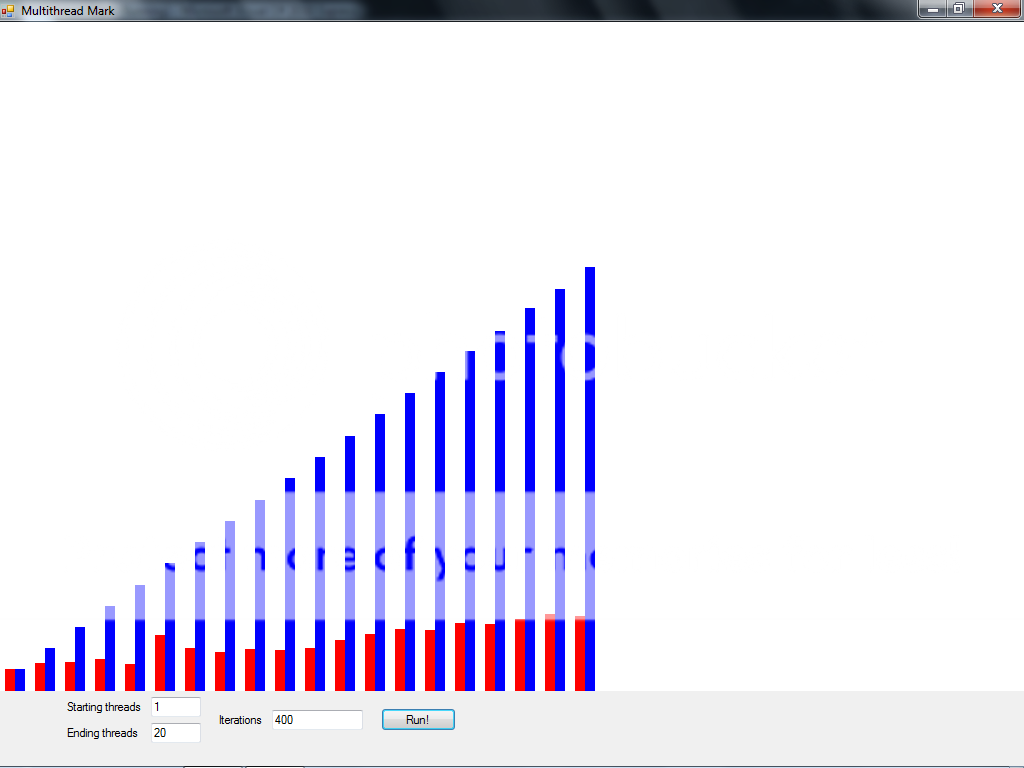

Here is what I got -

Red represents the time it takes to execute on multiple cores, and blue represents the time it takes on a single core.

The blue increases linearly as expected but the red always seems to increase in a "wave" pattern, and it doesn't spike up after utilizing all 6 cores.

I've even tested using 50+ threads and the times stay increasing at nearly the same rate.

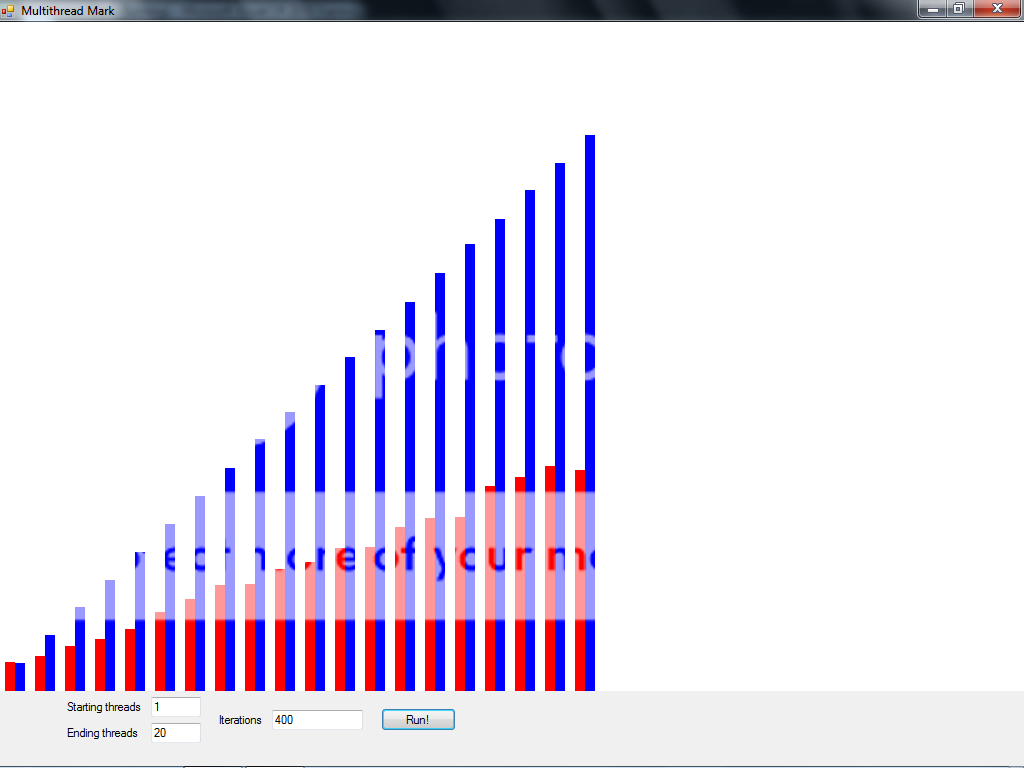

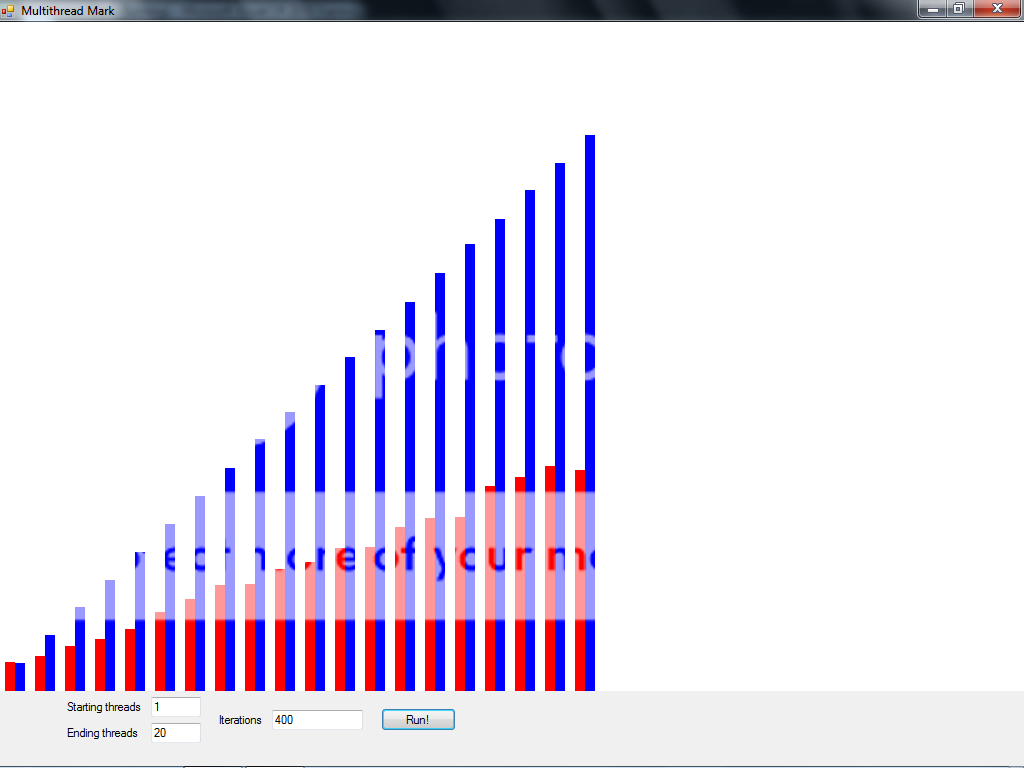

I did the test again after shutting off 3 cores and here is what I got -

The time increased even on the single core tests.

After some other tests I figured out this wasn't a fluke so now I'm just wondering if this is supposed to happen, since I always hear that your applications won't increase in speed unless they're designed to use multiple cores.

Or am I understanding this wrong?

But I did some benchmarks and saw things I didn't expect.

I had read that multiple cores only benefit you when you are running something that utilizes more than one core.

But that's now what my tests are showing.

Also if I turn off 5 cores leaving only one, you can see it in the results even with something that only runs on one core.

I also found something else I didn't expect.

I had assumed that if you plot the speeds of benchmarks, each time increasing the number of threads and the number of iterations , you would see a drop off in how much each thread helps you out after you exceed the number of threads the processor has.

Here is what I got -

Red represents the time it takes to execute on multiple cores, and blue represents the time it takes on a single core.

The blue increases linearly as expected but the red always seems to increase in a "wave" pattern, and it doesn't spike up after utilizing all 6 cores.

I've even tested using 50+ threads and the times stay increasing at nearly the same rate.

I did the test again after shutting off 3 cores and here is what I got -

The time increased even on the single core tests.

After some other tests I figured out this wasn't a fluke so now I'm just wondering if this is supposed to happen, since I always hear that your applications won't increase in speed unless they're designed to use multiple cores.

Or am I understanding this wrong?