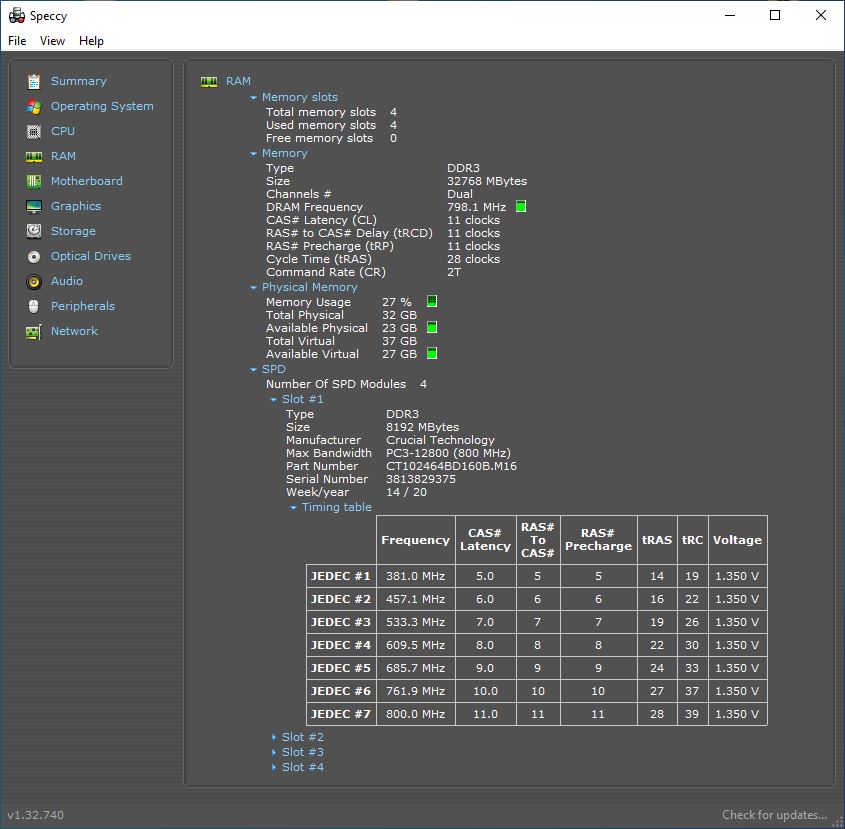

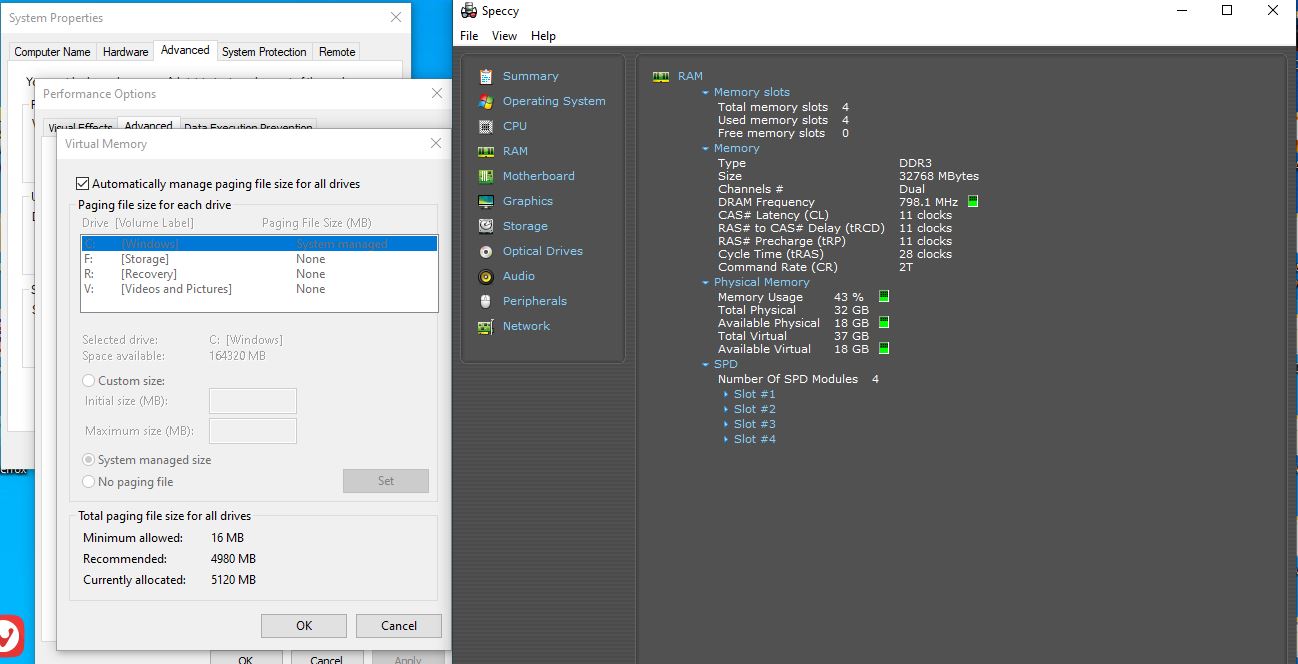

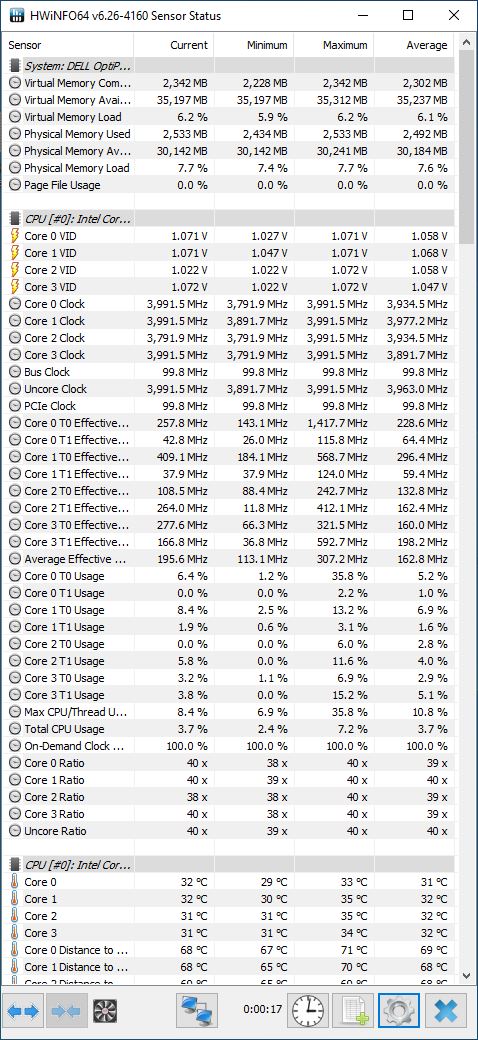

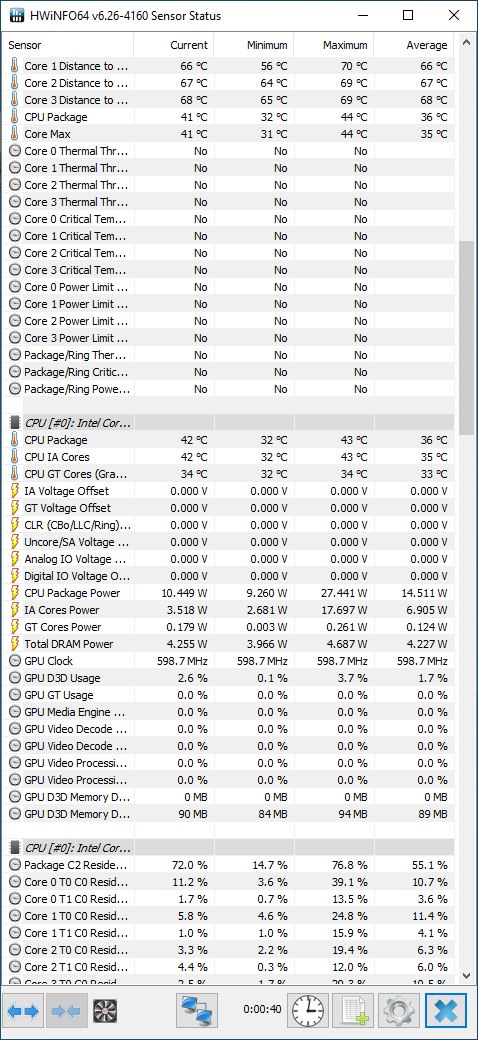

I'm looking for a computer, and am strongly considering a refurbished Dell OptiPlex 9020. The particular one that I'm most considering is selling for an extremely affordable price (approximately $230), but there's one drawback: it only has 8 GB of RAM (it can support 32, and I want as much as possible).

Does anyone have any recommendations on which RAM provides the best balance of price and reliability?

I searched NewEgg for RAM that's compatible with the aforementioned computer, and here are the results:

https://www.newegg.com/tools/memory-finder/#/result/12000705;flt_storagecapacitytitle=32%20GB

Also, here are the results from Crucial:

https://www.crucial.com/compatible-upgrade-for/dell/optiplex-9020-(mini-tower)#memory

And from MemoryStock:

https://www.memorystock.com/memory/DellOptiPlex9020.html

Price-wise, I'm hoping for no more than $180 total for all 4 8GB sticks altogether, although I could go as high as $200 if absolutely necessary. But I don't want unreliable RAM that will damage my computer and/or give me BSODs. Out of the options on the pages linked to above (or any other options that you know of), what is my safest bet for reliable RAM for an affordable price?

Also, I read that some brands of RAM don't actually run at the advertised 1600 Mhz, and instead only run at 1333 Mhz due to a setting called XMP, which has to be changed in the BIOS. I read one thread on the Dell forums where someone complained that editing these BIOS settings gave them BSODs.

https://www.dell.com/community/Alienware-General-Read-Only/How-to-enable-XMP/td-p/5521235

Are all brands of RAM like this, or just some? Is it something that I need to worry about?

Also, if I buy the refurbished Dell computer, would it be safe for me to remove its RAM sticks (only 8GB altogether) and replace them with a separately-purchased 32GB set of sticks (4 x 8GB) before ever plugging in and booting up the computer, registering Windows, etc? Or would I need to wait until later?

Does anyone have any recommendations on which RAM provides the best balance of price and reliability?

I searched NewEgg for RAM that's compatible with the aforementioned computer, and here are the results:

https://www.newegg.com/tools/memory-finder/#/result/12000705;flt_storagecapacitytitle=32%20GB

Also, here are the results from Crucial:

https://www.crucial.com/compatible-upgrade-for/dell/optiplex-9020-(mini-tower)#memory

And from MemoryStock:

https://www.memorystock.com/memory/DellOptiPlex9020.html

Price-wise, I'm hoping for no more than $180 total for all 4 8GB sticks altogether, although I could go as high as $200 if absolutely necessary. But I don't want unreliable RAM that will damage my computer and/or give me BSODs. Out of the options on the pages linked to above (or any other options that you know of), what is my safest bet for reliable RAM for an affordable price?

Also, I read that some brands of RAM don't actually run at the advertised 1600 Mhz, and instead only run at 1333 Mhz due to a setting called XMP, which has to be changed in the BIOS. I read one thread on the Dell forums where someone complained that editing these BIOS settings gave them BSODs.

https://www.dell.com/community/Alienware-General-Read-Only/How-to-enable-XMP/td-p/5521235

Are all brands of RAM like this, or just some? Is it something that I need to worry about?

Also, if I buy the refurbished Dell computer, would it be safe for me to remove its RAM sticks (only 8GB altogether) and replace them with a separately-purchased 32GB set of sticks (4 x 8GB) before ever plugging in and booting up the computer, registering Windows, etc? Or would I need to wait until later?