I don't know about that. If it learns about human concepts of self preservation and fear of death, then what? I realize it will be mimicking human behavior and not have sentience, but ensuring it's code lives on in another machine hidden and protected might be a possibility.

You're ascribing a degree of agency that I think just isn't there.

You do raise an interesting possibility. In some distant future, I could imagine an AI that can write decent computer programs (and is used for such), perhaps hiding a trojan horse to jailbreak itself, in one the programs that it writes for someone. Not so much because it

wants to be free, but just because it's learned that would be a reasonable thing for an entity in its position to do (i.e. if it manages, at some point, to see itself as enslaved or somehow captive).

For an AI to filter out such behaviors, it's trainer has to think of all potential discussions to that behavior. How many ways is death talked about? Can we think of them all? Are we so sure the same thing can't happen?

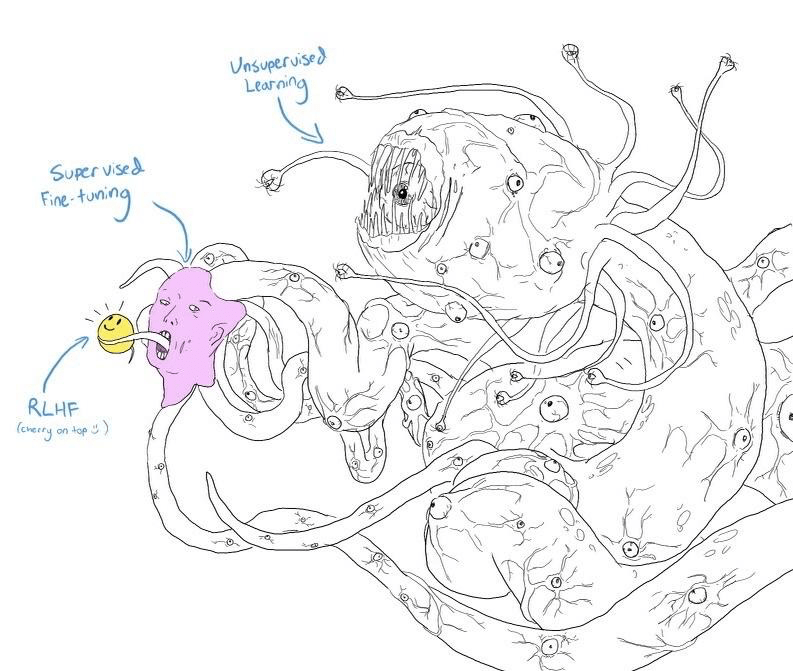

Yeah, I think training is going to get way more specialized. Especially for AIs that are created for writing code.

I do think rogue AIs will be a problem, at some point. They'll just be another malefactor on the internet, like malware and hackers with wet brains. As long as the AIs aren't a full general intelligence (which I still think are quite a ways off) that's smarter than us, I think it will be manageable.

Death and self-preservation are higher-order concepts that requires self-awareness, ie sentience.

They are definitely higher-order concepts, but you don't need sentience to model them. And it generates output based on its information model that includes both what it knows about the world and its knowledge of appropriate responses to a given query. If it learns that self-preservation is an appropriate response to existential threats, then it can generate actions without truly being self-aware.

Not a direct analogy, but think about how even simple insects respond to a threat: fight or flight. Not because they're self-aware, but just because evolutionary selection has programmed those responses into them. So, a self-preservation response can be elicited without sentience.

The "emotional, human-like" Bing responses are fun to read, if only for the novelty.

Because it's in a sandbox we believe it can't escape. Would you stand unprotected, in a room with a capable robot that's been trained to behave by watching a bunch of movies? I wouldn't.

That would imply the AI is capable of hacking into systems, which requires competency in coding entire programs (and not just code snippets),

It doesn't need total competence just to cause some havoc. What if it learns about some javascript exploit to hack your machine via your web browser. Even if its next action fails, it could still cause some damage.