10,000 H100 = 80,000 AD100 chips (Like the 4090 but fully enabled) and really all the 4090 really was is a place to use the chips when they were ironing out the manufacturing process and tweaking it to get higher yields and currently a place to put the chips that don't quite cut it for the H100.

You're badly misinformed about this stuff.

I'm not quite sure I follow what you're saying about 10k H100 = 80k AD100. As far as I can tell, there exists no such chip as the AD100. There's an A100, which is made on TSMC N7. However, the AD102 (RTX 4090) and H100 are both made on TSMC 4N. And the GA102 used Samsung 8 nm.

As for the AD102 vs. H100, the former die is 608.5 mm^2 in size, while the latter is 814 mm^2. And no, the AD102 isn't merely shaved down from the H100 - they're fundamentally different designs. The H100 lacks a display controller and (virtually?) any raster or ray-tracing hardware. The H100 also features vector fp64 support, which the AD102 lacks, and replaces GDDR6 memory controllers with HBM2e or HBM3.

We really haven't seen Nvidia repurpose a 100-series GPU for "gaming" cards since the Titan V launched in 2017.

Here's a secret decoder ring. Study it. Learn it. Know it.

If Nvidia does release a 4090 Ti they would like have to sell it for inexcess of $3000 up to $3500 or they would be leaving money on the table by not putting those chips into the H100

The conflict exists at the

wafer level. Nvidia ordered a certain number of 4N wafers from TSMC. Until those wafers go into production, they can choose which mask to use on them. So, it's diverting wafers instead of chips. But, the tension is real.

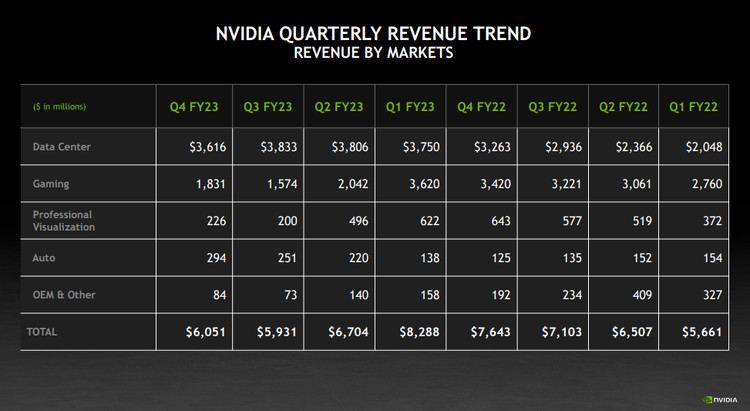

I just get a big laugh out of all the clueless predicting Nvidia is struggling and going out of business

Whoever is saying that isn't looking at their quarterly reports. Their revenues are still down from their peak (as of the latest quarter to end), but they haven't been unprofitable for a long time.

AMD is likely slashing orders (and that comes with a penalty) since they still have a ton of 6000 series devices they need to get rid of before they can start production of the 7800 and 7700 lines and Nvidia is likely to go in and buy that time up too to make A100 and H100 chips

If it's truly RX 6000 GPU orders that AMD is slashing, then no. Most of those are made on TSMC N7, and the 4N process Nvidia uses for AD GPUs requires entirely different fab production lines. I don't even know if the N5 and 4N production can share much/any of the same equipment.

To give you an idea of the high demand for the H100 the normally $35,000 H100 units are selling on eBay for $40,000 - $46,000

That's basically what the article said, but I think $35k isn't even the "normal" price for H100's. If you bought back in November or so, they'd probably have cost much less. I seem to recall seeing a price of $18k, but I'm not 100% sure that wasn't for an A100.