News Far Cry 6 Proves Consoles Aren't Powerful Enough for Ray Tracing

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RT feels a bit like 3D TVs: Expensive hardware for little benefit. I wonder if interest will wane just as dramatically.

What?Far Cry 6 Proves Consoles Aren't Powerful Enough for Ray Tracing

I guess Spiderman Mile Morlaes, R&C RA and Resident Evil Village with Ray tracing on consoles don't exist...

Sure the consoles are not as powerful as a top end PC for Ray Tracing, though even the mighty 3090 chockes on some games with RT, so that's not powerful enough either, but come on with that big title statement you just shot yourself in the foot.

This site is going more and more downhill...

You are taking one of the most lazy 3rd party devs studios as rule of law and because they can't make their game have RT on consoles, while other proved it works and did it before them, you generalize this and shout this from the rooftops with a clickbait title. Amazing.

If you want to be fair you would also say that 3090 also chokes on CP 77 at 4k native with RT ON.That being said, even my 6900XT, factory overclocked, chokes on CP2077 at 4k native with raytracing. To be fair, AMD didn't design these GPUs with RT specifically in mind, like NVidia did with its tensor cores, and the 6900XT kicks butt in non-RT situations.

Actually it does not even hold a steady 60fps at 4k with RT ON with DLSS ON... that's the sad truth about both how still under-powered the best GPUs of today are for RT and how badly optimized CP 77 is...

Yes, very stupid article. Also so many ignorants that agree with it...Stupid article…

Look at Spider-Man and Ratchet and Clank. Those look more realistic and have raytracing.

This is just bad game design.

Last edited by a moderator:

david germain

Distinguished

It would be nice to get a plugin external RT module. OR maybe have the SSD upgrade slot re-proposed for RT module. Even if it just helps off load some of the non critical stuff from the CPU/GPU. like Audio, and menus, and some of the network stuff.

Heat_Fan89

Reputable

Yes to this. Those have been my thoughts for quite some time now. Games already look great without RT. To me it doesn’t improve the gameplay, it’s just another way to sell a game in an overly saturated market. I have seen screenshots of games with and without RT and my initial response has been, So?RT feels a bit like 3D TVs: Expensive hardware for little benefit. I wonder if interest will wane just as dramatically.

If the game is good, RT won’t really matter that much. I have an RTX 3080 and I have not enabled RT in any of the games that use it like Control.

And damn, I can’t wait for Far Cry 6. I’ve playing Far Cry 5 and that is crazy fun and the story is pretty good. On the XBOX One the game looks real good where I don’t feel i’m missing out if there’s no RT in the game.

Heat_Fan89

Reputable

Wow, it sounds like you took this a bit personal mate, like the writer insulted your Mom. No one said RT can't be done on a console. The question becomes how much performance you want to trade for it and what level of RT the game will introduce? That's what Ubisoft was trying to say. They came to the conclusion that it wasn't worth it.What?

I guess Spiderman Mile Morlaes, R&C RA and Resident Evil Village with Ray tracing on consoles don't exist...

Yes, very stupid article. Also so many ignorants that agree with it...

While you called the developer lazy you fail to understand that Miles Morales Spiderman was built around the PS5. The RT screenshots I saw of Spiderman and RE:V were not earth shattering. RT in MMS or RE:V, no big deal.

The Far Cry series is one of Ubisoft's top IP's and they have deadlines and budgets to meet, something you apparently don't seem to realize. If they had the time and resources, sure anything is possible.

Last edited:

Soaptrail

Distinguished

Ubisoft is right to make the Far Cry 6 ray tracing effects run on PCs only.

Far Cry 6 Proves Consoles Aren't Powerful Enough for Ray Tracing : Read more

Is this part of the article a typo?

RT might look better in a lot of cases, but the visual improvements aren't large enough that turning off RT and getting a boost in framerates isn't generally the better choice.

shouldn't it say "visual improvements aren't large enough that turning off RT and getting a boost in framerates IS generally the better choice. "

hotaru.hino

Glorious

Likely no. But since my morning coffee hasn't kicked in, I'll just summarize my thoughts in bullet point form:RT feels a bit like 3D TVs: Expensive hardware for little benefit. I wonder if interest will wane just as dramatically.

- The same lighting effects using rasterization already take up a significant amount of computational power to do. It's just that we've had 10+ years of experience and optimizations on top of that to make them run as well as they do now. I would argue in some cases we've actually regressed a little. Transparency for instance in many games have resorted to using dithering because it's cheaper to do that than try to do some order-independent transparency algorithm. Or maybe it's because the engine is uses a deferred shader renderer which doesn't work well with transparency.

- From what I've seen/read/etc from articles or videos about ray tracing from the developer side, or at least people putting together the levels and such, is that RT is literally a "turn a switch on and boom, everything looks correct." There is a video floating around from 4A Games about Metro Exodus where they were describing having to place what are essentially non-existent lights to get a scene to look just right, but they didn't have to do the same thing with RT

- Every time a major hardware feature milestone, software overhaul, or graphical feature comes out (e.g., Hardware T&L, fully programmable shaders, DX10, tessellation, SSAO), using it at the time of release either brought no appreciable improvement or tanked performance. It took years before people just take said features for granted.

- The portion of the die that implements the RT as far as I can tell is pretty tiny compared to the rest of the die, so it's not really making GPUs more expensive and even if we repurposed that area for shaders, you're not going to get much out of it.

- People want to bemoan how expensive GPUs are getting before taking into account of the scalpers and the pandemic shortage issue. Well...

- It's getting more expensive to build tinier transistors. The cost per transistor has basically flat-lined since 16nm

- I remember spending around $600 for a GeForce 7800 GTX back in 2005. That's about $840 today (well, if you want take out the pandemic induced inlation, we can call this $800). Outside of the RTX 2080, no other vanilla 80-class NVIDIA GPU has exceeded the cost of a 7800 GTX with inflation accounted for since Kepler.

The problems with this approach are:It would be nice to get a plugin external RT module. OR maybe have the SSD upgrade slot re-proposed for RT module. Even if it just helps off load some of the non critical stuff from the CPU/GPU. like Audio, and menus, and some of the network stuff.

- For a fixed configuration system like consoles, having an optional component is a great way to kill the feature the component offers because few devs are going to target that specifically.

- You'll likely have worse performance because of the increased latency needed to pass stuff back and forth between the GPU and the offloading module. If you're doing RT rendering, RT is the first thing that happens and nothing else can be done until the RT stuff is resolved.

Last edited:

Elterrible

Distinguished

Ubisoft is right to make the Far Cry 6 ray tracing effects run on PCs only.

Far Cry 6 Proves Consoles Aren't Powerful Enough for Ray Tracing : Read more

No what it means is that AMD’s Ray Tracing isn’t good enough for the lower end GPUs these Consoles have installed.

This is a real problem, because you’re now saying that the PS4 Pro might be good enough for several years to come.

Elterrible

Distinguished

Likely no. But since my morning coffee hasn't kicked in, I'll just summarize my thoughts in bullet point form:

The problems with this approach are:

- The same lighting effects using rasterization already take up a significant amount of computational power to do. It's just that we've had 10+ years of experience and optimizations on top of that to make them run as well as they do now. I would argue in some cases we've actually regressed a little. Transparency for instance in many games have resorted to using dithering because it's cheaper to do that than try to do some order-independent transparency algorithm. Or maybe it's because the engine is uses a deferred shader renderer which doesn't work well with transparency.

- From what I've seen/read/etc from articles or videos about ray tracing from the developer side, or at least people putting together the levels and such, is that RT is literally a "turn a switch on and boom, everything looks correct." There is a video floating around from 4A Games about Metro Exodus where they were describing having to place what are essentially non-existent lights to get a scene to look just right, but they didn't have to do the same thing with RT

- Every time a major hardware feature milestone, software overhaul, or graphical feature comes out (e.g., Hardware T&L, fully programmable shaders, DX10, tessellation, SSAO), using it at the time of release either brought no appreciable improvement or tanked performance. It took years before people just take said features for granted.

- The portion of the die that implements the RT as far as I can tell is pretty tiny compared to the rest of the die, so it's not really making GPUs more expensive and even if we repurposed that area for shaders, you're not going to get much out of it.

- People want to bemoan how expensive GPUs are getting before taking into account of the scalpers and the pandemic shortage issue. Well...

- It's getting more expensive to build tinier transistors. The cost per transistor has basically flat-lined since 16nm

- I remember spending around $600 for a GeForce 7800 GTX back in 2005. That's about $840 today (well, if you want take out the pandemic induced inlation, we can call this $800). Outside of the RTX 2080, no other vanilla 80-class NVIDIA GPU has exceeded the cost of a 7800 GTX with inflation accounted for since Kepler.

- For a fixed configuration system like consoles, having an optional component is a great way to kill the feature the component offers because few devs are going to target that specifically.

- You'll likely have worse performance because of the increased latency needed to pass stuff back and forth between the GPU and the offloading module. If you're doing RT rendering, RT is the first thing that happens and nothing else can be done until the RT stuff is resolved.

Yeah, except ray tracing does make for a competitive disadvantage in multiplayer games where render time is a factor.

Elterrible

Distinguished

Yes, considering Nvidia's RTX cards actually need DLSS for raytracing to perform well enough and are way faster at raytracing than even the fastest AMD Radeon card.

Not only do the current gen consoles not use discrete GPUs (limiting how much heat they can generate before they need throttling), they don't have DLSS to improve performance, and their graphics are based on the RDNA architecture, as opposed to RDNA 2 used in the RX 6900 XT.

To be honest, I'm surprised they even support raytracing at all.

The discrete GPU isn’t as big a factor when cache is increased and the system RAM is using sufficiently fast GDDR6. I think we will see a lot of people using computers without discrete GPUs, when the next generation AMD Ryzen drops using GDDR5 RAM and rDNA2 GPU cores. People will forgo Raytracing for lower cost gaming at high FPS.

hotaru.hino

Glorious

So does every other graphical feature. I'm pretty sure all the eSports pros run their games on the lowest possible settings, save for resolution.Yeah, except ray tracing does make for a competitive disadvantage in multiplayer games where render time is a factor.

But they're not the target market for this sort of thing anyway.

cryoburner

Judicious

Except when Spider Man originally launched, you could only get RT in a 30fps mode, and had to give up the 60fps of the performance mode, something that can be rather noticeable in a game with fast movement. They later added a 60fps "Performance RT" mode, but with the caveats that you will have reduced "scene resolution, reflection quality, and pedestrian density". And really, based on comparisons I've seen, the RT effects typically don't look much better than the standard non-RT effects in that game, and in some ways they can be "worse" at times, with certain objects not casting reflections with RT enabled, and the reflections themselves being a lower resolution than the rest of the scene. Enabling RT means making tradeoffs in other areas of the game's visuals, and while the RT effects might look "good" in many cases, the game arguably looks good even without them. Most scenes in the game look nearly identical with or without RT enabled, with the differences in resolution and framerate potentially being more noticeable than any improvements RT brings to the table.Stupid article…

Look at Spider-Man and Ratchet and Clank. Those look more realistic and have raytracing.

This is just bad game design.

And that's what this article is saying. They can add RT effects in some limited capacity, sure, but due to the big hit to performance that results, compromises will need to be made in other areas to make that happen. I wouldn't necessarily say the consoles are "not powerful enough for RT", as I'm sure there are areas where RT effects can improve visuals in many titles, but it's certainly not something that's going to be the best option in all cases.

Coming back to Far Cry 6, I'm not so sure it's even a game that would benefit all that much from RT, or at least the limited RT effects that the consoles (and most PCs) can handle. For a game that takes place in a modern or futuristic setting with lots of shiny, reflective surfaces, something like raytraced reflections can potentially add noticeable improvements to visuals, but this game seems to take place in mostly dusty, dirty, war-torn outdoor environments. Even on PC, I suspect the improvements brought by raytracing will be subtle, and probably not worth enabling unless one's graphics card is overpowered relative to the resolution they will be running the game at.

I think that might have been an Nvidia-sponsored video. : P In reality, the implementation of RT effects is not likely to be that smooth. If it were, we would have likely seen rapid implementation of RT in games, and games with RT available at the 20-series launch, rather than having only a handful of limited implementations trickle out over the first year the cards were on the market.From what I've seen/read/etc from articles or videos about ray tracing from the developer side, or at least people putting together the levels and such, is that RT is literally a "turn a switch on and boom, everything looks correct." There is a video floating around from 4A Games about Metro Exodus where they were describing having to place what are essentially non-existent lights to get a scene to look just right, but they didn't have to do the same thing with RT

Another thing to consider is that until the developer's entire market is on RT-capable hardware, they will need to implement, test and debug two different lighting systems. So adding RT is going to create more work for development for a number of years to come. And again, in the case of consoles especially, the big performance hit from RT is often going to require the complexity and detail of scenes to be reduced in other areas in order to maintain performance targets.

And while RT can potentially allow for scenes to be lit more realistically, that can create other problems. For example, not having adequate lighting in a certain room, and having to figure out how to add proper light-sources in ways that fit with the intended look of the scene. Not always is the more-realistic lighting going to light the scene in a way that looks better from an artistic standpoint, so it could actually create additional work figuring out how to add believable light sources rather than just adding some "non-existent" lights to brighten those areas. Raytraced lighting effects might have the potential to be "easier" to implement, though I suspect the development process will often just be "different", rather than "easier".

hotaru.hino

Glorious

I ended up finding the video, it was from Digital FoundryI think that might have been an Nvidia-sponsored video. : P

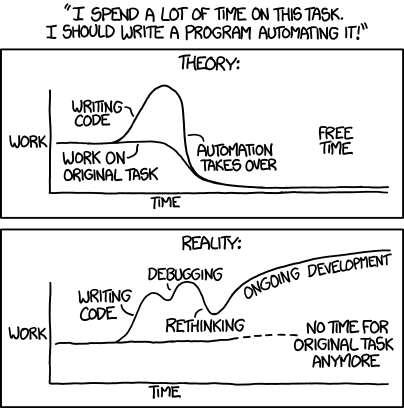

And what you're describing here is how long it took to get the tools set up to get development going. Not how these tools will help developers do their work. While this is intended to be satire, it's still more or less true:In reality, the implementation of RT effects is not likely to be that smooth. If it were, we would have likely seen rapid implementation of RT in games, and games with RT available at the 20-series launch, rather than having only a handful of limited implementations trickle out over the first year the cards were on the market.

Even in my line of work, I may not be developing tools, but I do keep in mind when something makes my life easier. For example, I would rather spend the hour trying to make a generic iterator over a series of well defined limits than to copy and paste the same code, but changing what that code is acting upon even though copying and pasting is supposedly "faster".

Which is only a problem with developers making the engines themselves. If I choose Unreal or Unity, I don't have to implement two different lighting systems. I don't even have to make two different versions for a map/level/whatever. Assuming we're going with a realistic representation and RT can give me just that, the RT version becomes the baseline. I just make touch ups for the rasterized only render path if I really want to.Another thing to consider is that until the developer's entire market is on RT-capable hardware, they will need to implement, test and debug two different lighting systems. So adding RT is going to create more work for development for a number of years to come. And again, in the case of consoles especially, the big performance hit from RT is often going to require the complexity and detail of scenes to be reduced in other areas in order to maintain performance targets.

You're describing a generic problem with level design.And while RT can potentially allow for scenes to be lit more realistically, that can create other problems. For example, not having adequate lighting in a certain room, and having to figure out how to add proper light-sources in ways that fit with the intended look of the scene. Not always is the more-realistic lighting going to light the scene in a way that looks better from an artistic standpoint, so it could actually create additional work figuring out how to add believable light sources rather than just adding some "non-existent" lights to brighten those areas. Raytraced lighting effects might have the potential to be "easier" to implement, though I suspect the development process will often just be "different", rather than "easier".

The whole point of the lighting techniques developers made up to this point was to get as close to RT quality as possible. RT is the ground truth because it, more or less, simulates how light actually works. If you were to make a "realistic rendering engine" from scratch, using RT would get you there much faster than pure-rasterization. It's to a point where you can generate this image using code that will fit on a business card.

Last edited:

TRENDING THREADS

-

-

Question Tried moving SSD from old PC to new one, now it won't boot from either

- Started by scyer327

- Replies: 9

-

Question why my pc dont have sound after install new Nvidia GPU Card?

- Started by sudsaviour

- Replies: 3

-

News Windows 11 will reportedly display a watermark if your PC does not support AI requirements

- Started by Admin

- Replies: 17

-

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.