This thread is for feedback on HammerBot - the Tom's Hardware AI Chatbot. You can find out all about it HERE.

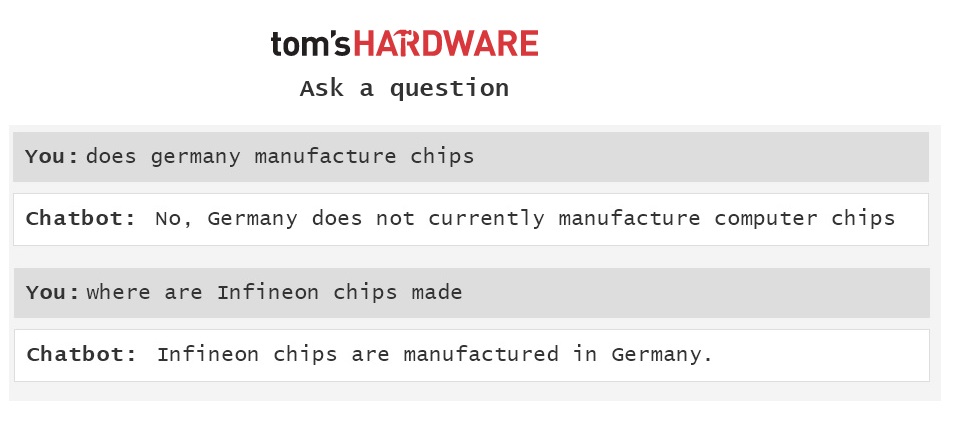

Please keep it concise and on topic. If you encounter any issues, we'd find it valuable for you to describe exactly what you did with screenshots if necessary.

It's important to note this is a first step and that's why we're looking for feedback. Please keep this in mind and make sure to check the known issues below. Also, please keep discussion and feedback civil and on point, The main use of this thread is for gathering feedback on issues and what improvements can be made.

We want you to be able to ask our bot to compare two specific CPUs and be shown a chart with both of them in it and a side-by-side table with the specs of each. And we want the bot to be able to help you build a PC shopping list, but we’re not there just yet.

Thank you so much for taking the time to help us enhance this feature.

Please keep it concise and on topic. If you encounter any issues, we'd find it valuable for you to describe exactly what you did with screenshots if necessary.

It's important to note this is a first step and that's why we're looking for feedback. Please keep this in mind and make sure to check the known issues below. Also, please keep discussion and feedback civil and on point, The main use of this thread is for gathering feedback on issues and what improvements can be made.

Known Issues

We know you’ll find ways that the output of HammerBot could be better. Here are some you may encounter:- Answers sometimes out of date: occasionally recommends last-gen products

- Answers not always the top link: The top link in the search result may not be the one most directly related to the chat answer (ex: the answer mentions Ryzen 5 5600X but the top result isn’t the Ryzen 5 5600X review).

- Recommended offers: section may not have a product that matches the chat.

- Fairly short answers: Most of the answers are a bit terse right now.

- Chat window may zoom in when you enter input on mobile.

- May express opinions that aren’t necessarily those of Tom’s Hardware.

More and Better Coming Soon

Aside from just working out some of the known (and unknown issues), we want to make HammerBot a much more powerful tool by adding new capabilities including access to structured data: benchmarks, product specs and up-to-date pricing. Right now the model gets some of this data from article texts, but it’s not as organized in that format.We want you to be able to ask our bot to compare two specific CPUs and be shown a chart with both of them in it and a side-by-side table with the specs of each. And we want the bot to be able to help you build a PC shopping list, but we’re not there just yet.

Thank you so much for taking the time to help us enhance this feature.

Last edited: