An old CPU like the FX-8370 has better frame time variance or better frame rate than the i7 5690X in some games.

Project Cars At 1080p

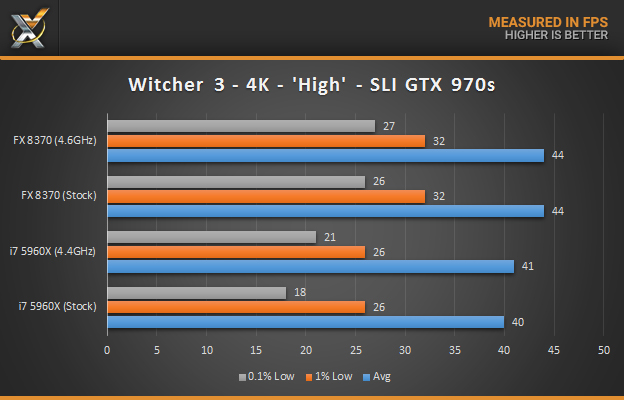

The Witcher 3 4K result is shocking.

http://http://www.technologyx.com/featured/amd-vs-intel-our-8-core-cpu-gaming-performance-showdown/?hootPostID=282b782e2a8d67e68bbb50aeea079e96

The FX-8350 also performs better than the i7 4930K at 4K.

http://http://www.tweaktown.com/tweakipedia/56/amd-fx-8350-powering-gtx-780-sli-vs-gtx-980-sli-at-4k/index.html

With Win10 and modern games, the FX 8-cores has a new life. I don't know why everyone suggests to pick an i3/i5 over an FX 8-cores.

Why is the FX 8-cores so underestimated by the community?

Project Cars At 1080p

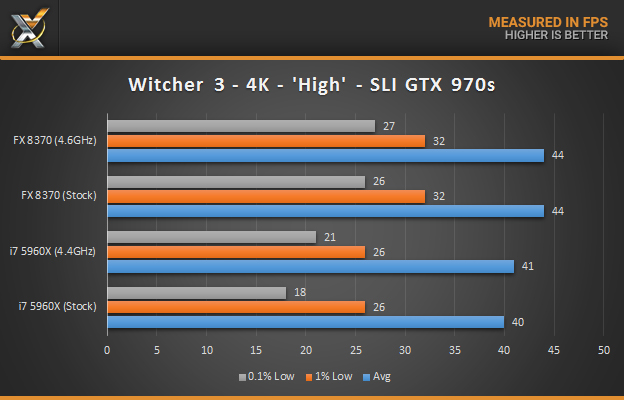

The Witcher 3 4K result is shocking.

http://http://www.technologyx.com/featured/amd-vs-intel-our-8-core-cpu-gaming-performance-showdown/?hootPostID=282b782e2a8d67e68bbb50aeea079e96

The FX-8350 also performs better than the i7 4930K at 4K.

http://http://www.tweaktown.com/tweakipedia/56/amd-fx-8350-powering-gtx-780-sli-vs-gtx-980-sli-at-4k/index.html

With Win10 and modern games, the FX 8-cores has a new life. I don't know why everyone suggests to pick an i3/i5 over an FX 8-cores.

Why is the FX 8-cores so underestimated by the community?