Still watching it. They really pulled out all the stops and spent some money on this one. Kudos Steve!

View: https://www.youtube.com/watch?v=ig2px7ofKhQ

Discussion Gamers Nexus 12VHPWR melting analysis is in!

- Thread starter alceryes

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Dirt(carbon) in the cable from manufacturing.

User error. Plugging that thing in correctly must be a real PITA. If it didn't click, best to assume it's not in all the way.

User error - dirt. Not plugging it in correctly can cause some shavings to fall further in and start heating up.

No cable is truly safe, not even the aftermarket ones.

User error. Plugging that thing in correctly must be a real PITA. If it didn't click, best to assume it's not in all the way.

User error - dirt. Not plugging it in correctly can cause some shavings to fall further in and start heating up.

No cable is truly safe, not even the aftermarket ones.

There is a point at which a connection is so poorly designed, that it lends itself to being used improperly, regularly, that the design itself is at fault.Dirt(carbon) in the cable from manufacturing.

User error. Plugging that thing in correctly must be a real PITA. If it didn't click, best to assume it's not in all the way.

User error - dirt. Not plugging it in correctly can cause some shavings to fall further in and start heating up.

No cable is truly safe, not even the aftermarket ones.

We may be at that point.

Same.I can do naught but laugh and shake my head. Crap like this is just further proof to not early adopt, yet thank the early adopters at the same time...

Changing gears, I was actually considering buying into AM5 at launch. Glad I didn't. Those early BIOSs were horrible.

I'm hoping to save at least $250 on a motherboard/CPU package by waiting till BF/CM. The hardest part will be to tell myself "no," if the savings isn't where I want it to be.

Last edited:

I feel I'm overdue for an upgrade, but the system still does what I want it to do...

This is just a hobby. I'm not chasing the latest and greatest.

This is just a hobby. I'm not chasing the latest and greatest.

Viking2121

Splendid

Same other than being over due for an upgrade, in fact I plan to skip the next couple of gens unless I find a deal, im sucker for that.I feel I'm overdue for an upgrade, but the system still does what I want it to do...

This is just a hobby. I'm not chasing the latest and greatest.

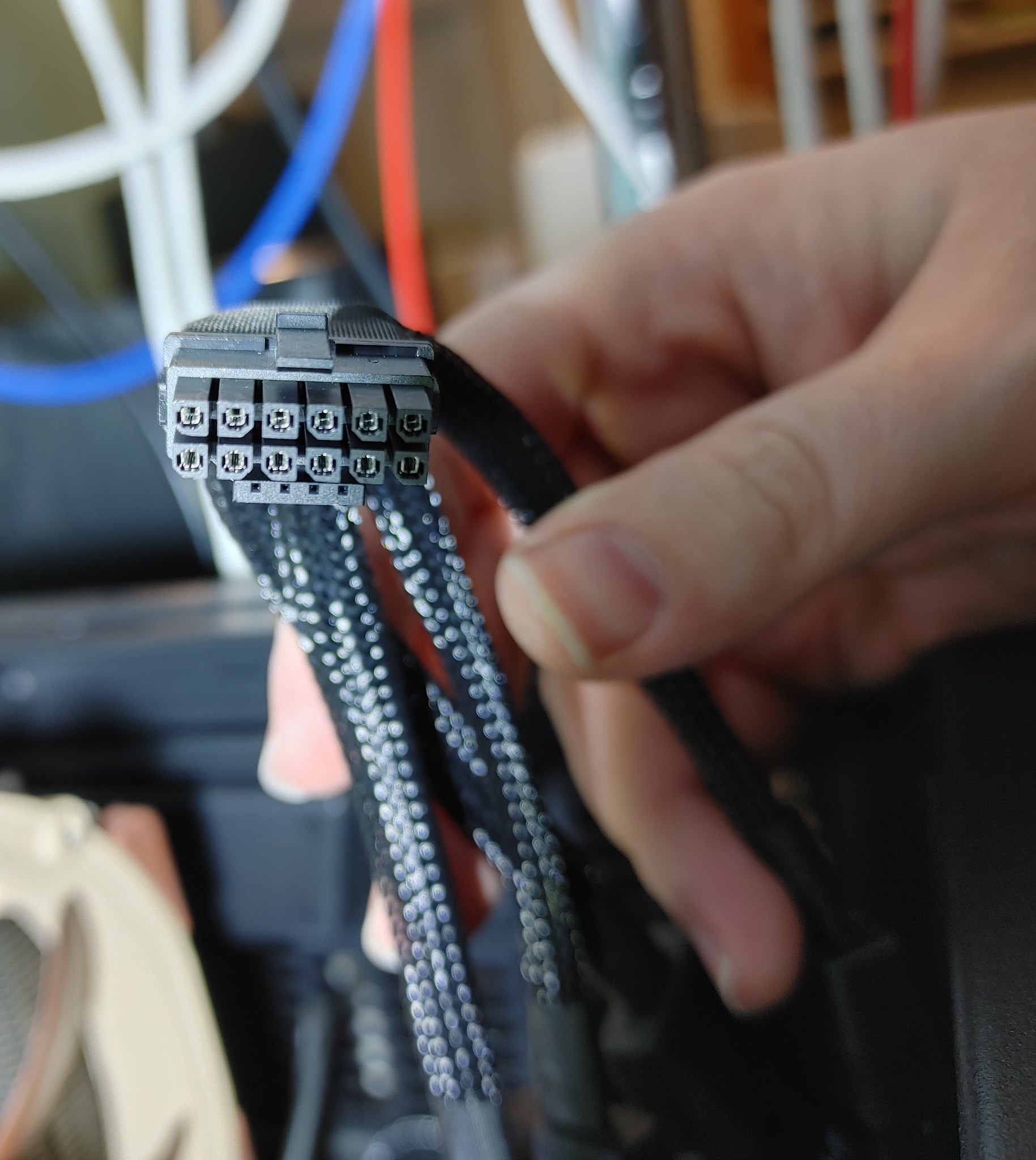

The whole entire plug melting, if its from the users not plugging them in all the way, its still a problem Nvidia needs to address, In GN's video, you can clearly see how much wiggling and pressure it needs to be fully inserted and it still may not be fully inserted, he also states you can't always feel or hear it clip, I guess its best for the moment is to yank on the cable and wiggle it while pulling to make sure it wont come out of its socket.

The plug its self in my opinion isn't great because of the size, makes it harder to make sure things are the way they are suppose to be for the amount of power draw. Something like a modified 8pin pcie cable isn't hard to plug in, most do make a clip sound of feel, and its visually easier to see if its clipped in.

RodroX

Honorable

So basically Jonny Guru was correct on that if the connector is not fully inserted it can cause the issue.

Yet that could not be the end of the story, cause of the debris that can happend from manufacturing and/or use. All of this could have been avoided with a better design. And for a piece of tech that cost $1600 (or more), they (nvidia) should have payed more attention to details.

If the connector is hard, really hard to get fully inserted, and its as hard to know if it clipped or not (mainly because most users are working inside a case, on an awkward position ) then blaming on the user alone is not the right call.

Time will tell if this is all there is, and we shall wait till nvidia said something about it (someday before 2023 perhaps?)

Yet that could not be the end of the story, cause of the debris that can happend from manufacturing and/or use. All of this could have been avoided with a better design. And for a piece of tech that cost $1600 (or more), they (nvidia) should have payed more attention to details.

If the connector is hard, really hard to get fully inserted, and its as hard to know if it clipped or not (mainly because most users are working inside a case, on an awkward position ) then blaming on the user alone is not the right call.

Time will tell if this is all there is, and we shall wait till nvidia said something about it (someday before 2023 perhaps?)

The problem is 2-fold. It's a $1600 gpu, ppl are somewhat sensitive about having to forcefully jam in the connector and possibly break the solder on the pcb, but at the same time, it's a $1600 gpu, the connector should have been engineered in such a way that all that force shouldn't have been required in the first place.

When dealing with that kind of power, through a single small connection, the little locking clip on one long side is pathetically inadequate. There should be 2x clips on the short sides, so that the connector cannot pivot when subjected to sideways force, and having a clip on each side gives clear evidence if the connector is fully seated, or not.

When dealing with that kind of power, through a single small connection, the little locking clip on one long side is pathetically inadequate. There should be 2x clips on the short sides, so that the connector cannot pivot when subjected to sideways force, and having a clip on each side gives clear evidence if the connector is fully seated, or not.

Last edited:

I will be swapping my 3070TI for a 4080 soon. Within 48 hours unless delivery is delayed etc. If I run into any problems I will post here in this very thread.

I don't know if 4080s will have less problems overall. But I feel like the same user errors or defects would still melt the cable/gpu even with

300 Watts going though it. I expect minor mitigation but it will still likely burn the cable because I believe in the video it reached 300 degrees.

So instead it may just reach 150-250 degrees. lol

But even between 4090s and 4080s we are dealing with small sample size still. We need hundreds of thousands of people to use

these parts to get a full picture. But it seems like if you avoid user error. You cut the risk down to razor thin margins. However

I want the margin to be virtually zero. Which maybe it is without user error. Who knows. Wish me luck. I will wish you all luck.

But if anything happens I will post the full story. I will tell you how firmly I connected it. That I did not over bend it etc. All sorts of other

information. Exact parts. I may provide pictures. So we can at least learn from my suffering.

I don't know if 4080s will have less problems overall. But I feel like the same user errors or defects would still melt the cable/gpu even with

300 Watts going though it. I expect minor mitigation but it will still likely burn the cable because I believe in the video it reached 300 degrees.

So instead it may just reach 150-250 degrees. lol

But even between 4090s and 4080s we are dealing with small sample size still. We need hundreds of thousands of people to use

these parts to get a full picture. But it seems like if you avoid user error. You cut the risk down to razor thin margins. However

I want the margin to be virtually zero. Which maybe it is without user error. Who knows. Wish me luck. I will wish you all luck.

But if anything happens I will post the full story. I will tell you how firmly I connected it. That I did not over bend it etc. All sorts of other

information. Exact parts. I may provide pictures. So we can at least learn from my suffering.

Elusive Ruse

Commendable

Big ups to GN though, even if Nvidia themselves went to the trouble of performing similar lab studies they'd never publish the results to the public.

So, the one issue I have with Steve's conclusion is that he proposes that the connector not being inserted all the way is a prerequisite for the melting issue. However, as far as I can tell, PCI-SIG's testing conclusion (and subsequent warning) was just testing the connector, not the 'human factor'.

IOW, PCI-SIG had the connector fully inserted and it still melted under certain conditions.

IOW, PCI-SIG had the connector fully inserted and it still melted under certain conditions.

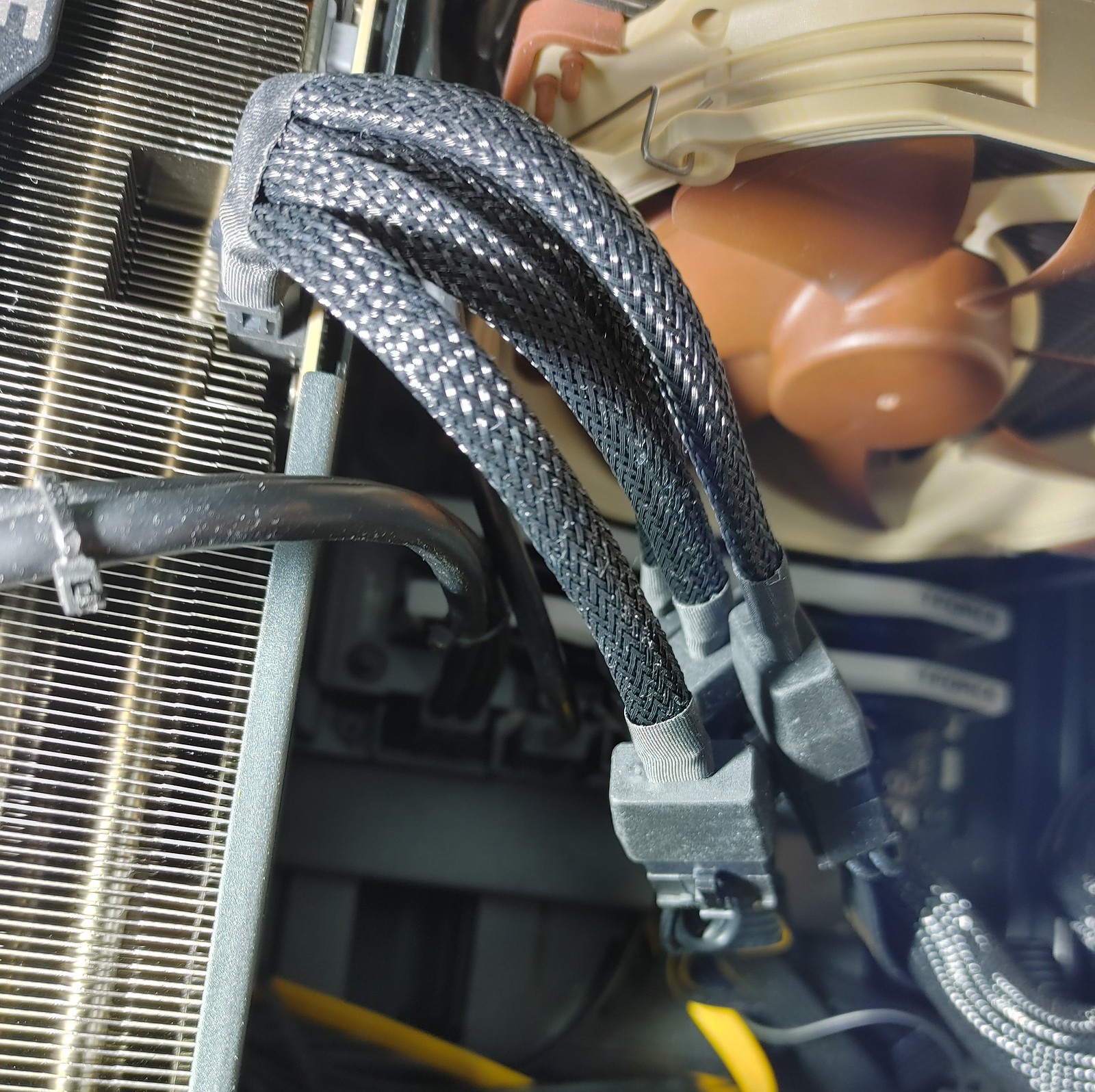

Be careful.Have my Gigabyte 4090 OC since October, the cable was bent at the plug. Checked it today, no issues, no signs of burns. It was fully inserted though..

Don't keep on taking it out to check. You may actually be causing the 'foreign debris' buildup that's been cited as a catalyst for the overheating.

While more testing might need to be done to find all possible ways connector can melt, this at least shows that for most cases, more than one thing needs to go wrong for it to be reproducible.

1. People blaming connector and it's lack of safety margins for current.

Steve cut 4 out of 6 12V pins and pushed 600W through said TWO, properly seated, the connector did not heat up much past normal temperatures and stayed FAR below melting.

2. Possible Foreign objects, Steve found traces of them and said that they could cause issues in long run and that current research results point towards FOD's increasing with connection cycles, raises risks.

3. Improperly attached adapter ALONE does not melt easily

4. Bent adapter ALONE does not melt easily

5. photos online show that melted cables were most likely not inserted fully. (does not mean this is only way for them to melt, just current main suspect)

6. You practically need to have improper insertion AND bend the cable for melting to happen. Other ways have not been reproducible so far.

7. Improper insertion is seemingly easy to do if connector doesn't click in place AND you do cable management, the few seconds they (GN) can disconnect cable by wiggling other end of the adapter are good indication of this.

so while GN video isn't definite here are only ways to melt the cable, it does pretty good job in debunking quite few so far surfaced ideas and unlike earlier ones, can reproduce melting based on their theory.

If wiggling connector loose while doing cable management is too easy, it points towards design flaws in connector, This is likely under secret testing somewhere.

FOD's and oxidization are possible melting reasons down the line, once enough time has passed, they are unlikely culprits now.

So.. yeah, Nvidia can tell their version but... so far Steve's reasoning sounds correct at least in the sense that it can be reproduced.

1. People blaming connector and it's lack of safety margins for current.

Steve cut 4 out of 6 12V pins and pushed 600W through said TWO, properly seated, the connector did not heat up much past normal temperatures and stayed FAR below melting.

2. Possible Foreign objects, Steve found traces of them and said that they could cause issues in long run and that current research results point towards FOD's increasing with connection cycles, raises risks.

3. Improperly attached adapter ALONE does not melt easily

4. Bent adapter ALONE does not melt easily

5. photos online show that melted cables were most likely not inserted fully. (does not mean this is only way for them to melt, just current main suspect)

6. You practically need to have improper insertion AND bend the cable for melting to happen. Other ways have not been reproducible so far.

7. Improper insertion is seemingly easy to do if connector doesn't click in place AND you do cable management, the few seconds they (GN) can disconnect cable by wiggling other end of the adapter are good indication of this.

so while GN video isn't definite here are only ways to melt the cable, it does pretty good job in debunking quite few so far surfaced ideas and unlike earlier ones, can reproduce melting based on their theory.

If wiggling connector loose while doing cable management is too easy, it points towards design flaws in connector, This is likely under secret testing somewhere.

FOD's and oxidization are possible melting reasons down the line, once enough time has passed, they are unlikely culprits now.

So.. yeah, Nvidia can tell their version but... so far Steve's reasoning sounds correct at least in the sense that it can be reproduced.

Elusive Ruse

Commendable

The way I see it, the safest way for one to make sure the connector is safely in place, considering there's no click, is to insert it before mounting the card on the mainboard. The only reason I can fathom that resulted in users not inserting the conncetor all the way is fear of damaging the card or the motherboard. Maybe Nvidia should put a "Safe connection" LED indicator right on top of the card? EVGA's prototype had a few LEDs IIRC.While more testing might need to be done to find all possible ways connector can melt, this at least shows that for most cases, more than one thing needs to go wrong for it to be reproducible.

1. People blaming connector and it's lack of safety margins for current.

Steve cut 4 out of 6 12V pins and pushed 600W through said TWO, properly seated, the connector did not heat up much past normal temperatures and stayed FAR below melting.

2. Possible Foreign objects, Steve found traces of them and said that they could cause issues in long run and that current research results point towards FOD's increasing with connection cycles, raises risks.

3. Improperly attached adapter ALONE does not melt easily

4. Bent adapter ALONE does not melt easily

5. photos online show that melted cables were most likely not inserted fully. (does not mean this is only way for them to melt, just current main suspect)

6. You practically need to have improper insertion AND bend the cable for melting to happen. Other ways have not been reproducible so far.

7. Improper insertion is seemingly easy to do if connector doesn't click in place AND you do cable management, the few seconds they (GN) can disconnect cable by wiggling other end of the adapter are good indication of this.

so while GN video isn't definite here are only ways to melt the cable, it does pretty good job in debunking quite few so far surfaced ideas and unlike earlier ones, can reproduce melting based on their theory.

If wiggling connector loose while doing cable management is too easy, it points towards design flaws in connector, This is likely under secret testing somewhere.

FOD's and oxidization are possible melting reasons down the line, once enough time has passed, they are unlikely culprits now.

So.. yeah, Nvidia can tell their version but... so far Steve's reasoning sounds correct at least in the sense that it can be reproduced.

simple led would not know difference between "safe" connection and "unsafe"

it would need separate sensors to check that yes, connector is pushed down far enough and locking clips have locked down.

That or fancy measuring systems to measure possible resistance over said connector, needing separate wires from PSU to know PSU's 12V rail voltage to compare it correctly.

You could in theory get that from MB's sensors too but.... if PSU is multi-rail, rails could run slightly different voltages.

how to design it would need some thinking and foolproofing for sure. Possible, definitely, easy? possibly.

you could have sense pins connectors short enough that not locking it would lack connection in them, causing card to assume no power from here.

but... tolerances would make that iffy since practically seated connector that has just not clicked, can be wiggled loose.

which itself is not real issue, it just means GPU loses power and shuts down. User will wonder what is wrong and see "not seated" led and re-plug, before connectors melt.

Edit:

But that is assuming only way to melt connector is to have it improperly seated. (through not pushing it in or it getting loose due to wiggling during cable management)

This is most likely NOT only way to melt connector, just only one proven to work (to melt it) so far.

it would need separate sensors to check that yes, connector is pushed down far enough and locking clips have locked down.

That or fancy measuring systems to measure possible resistance over said connector, needing separate wires from PSU to know PSU's 12V rail voltage to compare it correctly.

You could in theory get that from MB's sensors too but.... if PSU is multi-rail, rails could run slightly different voltages.

how to design it would need some thinking and foolproofing for sure. Possible, definitely, easy? possibly.

you could have sense pins connectors short enough that not locking it would lack connection in them, causing card to assume no power from here.

but... tolerances would make that iffy since practically seated connector that has just not clicked, can be wiggled loose.

which itself is not real issue, it just means GPU loses power and shuts down. User will wonder what is wrong and see "not seated" led and re-plug, before connectors melt.

Edit:

But that is assuming only way to melt connector is to have it improperly seated. (through not pushing it in or it getting loose due to wiggling during cable management)

This is most likely NOT only way to melt connector, just only one proven to work (to melt it) so far.

TRENDING THREADS

-

-

News Windows 11 will reportedly display a watermark if your PC does not support AI requirements

- Started by Admin

- Replies: 6

-

Question r9 390 a good budget option since rx 580s are overpriced?

- Started by jordanbuilds1

- Replies: 3

-

-

Question a boot loop for IBM x3550 m4 but it immediately turns off

- Started by abdus1

- Replies: 2

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.