Zotac boosted the power limit and GPU clocks with the XTXH core, but it's still running tame 16Gbps GDDR6 memory and not the 18Gbps stuff used in the 6900 XT liquid cooled edition.Yup. That's the XTXH core on that ASRock RX 6900 XT Formula. Although AMD gave the core a physically different name they, in their infinite wisdom, decided that cards with the XTXH core will still be the RX 6900 XT, causing endless confusion. These 'special' cores are heavily binned and provide extra voltage and power ceilings at stock for both the core and memory.

On my lowly reference/Zotac 6900 XT I'm hitting a 2600MHz peak core and 2100MHz VRAM clock, all while undervolting and keeping it cool and quiet. No XTXH core needed here.

GPU Performance Hierarchy 2024: Video Cards Ranked

Page 2 - Seeking answers? Join the Tom's Hardware community: where nearly two million members share solutions and discuss the latest tech.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

alceryes

Splendid

Yup. Same memory chips but I thought it was higher voltage, power, and frequency ceiling(?)

Mine is the actual reference (XTX) AMD card, bought direct from AMD (didn't mean to confuse, sorry). I believe these are made by PC Partner Group, which is the same company who makes Zotac cards, but it's not an actual Zotac-branded GPU.

Mine is the actual reference (XTX) AMD card, bought direct from AMD (didn't mean to confuse, sorry). I believe these are made by PC Partner Group, which is the same company who makes Zotac cards, but it's not an actual Zotac-branded GPU.

Last edited:

Is it by any chance possible to put the old tiered legacy table back in? Or have it somewhere, at least?

It seemed reasonably useful for having the cards from Pascal and Polaris era, and earlier, to compare to each other. I'm a little unsure GFLOPs in the New Legacy Table (yeah, that phrase sounds weird in my head) is accurate enough, or if it distorts the picture.

It seemed reasonably useful for having the cards from Pascal and Polaris era, and earlier, to compare to each other. I'm a little unsure GFLOPs in the New Legacy Table (yeah, that phrase sounds weird in my head) is accurate enough, or if it distorts the picture.

Honestly, I hated that table, because I didn't think it conveyed anything useful. It was literally just a list of GPUs with no real sorting that I could discern. I'm very sad you don't appreciate my new GFLOPS list, because that took literally an entire day to put together!Is it by any chance possible to put the old tiered legacy table back in? Or have it somewhere, at least?

It seemed reasonably useful for having the cards from Pascal and Polaris era, and earlier, to compare to each other. I'm a little unsure GFLOPs in the New Legacy Table (yeah, that phrase sounds weird in my head) is accurate enough, or if it distorts the picture.

Unfortunately, I assumed people who were interested in the "legacy" table could just pull it up in the Way Back Machine... but now I have discovered that the URL is specifically excluded from the archive. <Sigh> And our CMS has no history of previous versions of a document, so... I apparently killed it. <Double sigh> But all is not lost, as someone (well, multiple someones) cloned the Hierarchy over the past couple of years. So here's the last version... I'm not sure how this will look when I paste it here, but hopefully this will suffice.

Also useful:

https://en.wikipedia.org/wiki/List_of_AMD_graphics_processing_units

https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units

https://en.wikipedia.org/wiki/List_of_Intel_graphics_processing_units

Legacy GPU Hierarchy

Below is our legacy desktop GPU hierarchy with historical comparisons dating back to the 1990s. We have not tested most of these cards in many years, driver support has ended on lots of models, and relative rankings are relatively coarse. We group cards into performance tiers, pairing disparate generations where overlap occurs.

| Nvidia GeForce | AMD Radeon |

| Nvidia Titan XP | |

| Titan X (Pascal) | |

| GTX 1080 Ti | |

| GTX 1080 | RX Vega 64 |

| Nvidia GeForce | AMD Radeon |

| GTX Titan X (Maxwell) | R9 295X2 |

| GTX 1070 Ti | |

| GTX 1070 | RX Vega 56 |

| GTX 980 Ti | R9 Fury X |

| Nvidia GeForce | AMD Radeon |

| GTX Titan Black | |

| GTX 980 | R9 Fury |

| GTX 690 | R9 Fury Nano |

| Nvidia GeForce | AMD Radeon |

| GTX 1060 6GB | RX 580 8GB |

| RX 480 8GB | |

| GTX Titan | RX 570 4G |

| GTX 1060 3GB | RX 470 4GB |

| R9 390X | |

| GTX 970 | R9 390 |

| GTX 780 Ti | R9 290X |

| GTX 780 | R9 290 |

| HD 7990 | |

| Nvidia GeForce | AMD Radeon |

| R9 380X | |

| GTX 770 | R9 380 |

| GTX 680 | R9 280X |

| GTX 590 | HD 7970 GHz Edition |

| HD 6990 | |

| Nvidia GeForce | AMD Radeon |

| GTX 1050 Ti | R9 285 |

| GTX 960 | R9 280 |

| GTX 670 | HD 7950 |

| GTX 580 | HD 7870 LE (XT) |

| HD 5970 | |

| Nvidia GeForce | AMD Radeon |

| GTX 1050 | RX 560 4G |

| GTX 950 | RX 460 |

| GTX 760 | R7 370 |

| GTX 660 Ti | R9 270X |

| R9 270 | |

| HD 7870 | |

| Nvidia GeForce | AMD Radeon |

| GTX 660 | R7 265 |

| GTX 570 | HD 7850 |

| GTX 480 | HD 6970 |

| GTX 295 | HD 4870 X2 |

| Nvidia GeForce | AMD Radeon |

| GTX 750 Ti | |

| GTX 650 Ti Boost | R7 260X |

| GTX 560 Ti (448 Core) | HD 6950 |

| GTX 560 Ti | HD 5870 |

| GTX 470 | HD 4850 X2 |

| Nvidia GeForce | AMD Radeon |

| GTX 750 | HD 7790 |

| GTX 650 Ti | HD 6870 |

| GTX 560 | HD 5850 |

| Nvidia GeForce | AMD Radeon |

| GT 1030 ( On -) | RX 550 |

| GTX 465 | R7 360 |

| GTX 460 (256-bit) | R7 260 |

| GTX 285 | HD 7770 |

| 9800 GX2 | HD 6850 |

| Nvidia GeForce | AMD Radeon |

| GT 740 GDDR5 | R7 250E |

| GT 650 | R7 250 (GDDR5) |

| GTX 560 SE | HD 7750 (GDDR5) |

| GTX 550 Ti | HD 6790 |

| GTX 460 SE | HD 6770 |

| GTX 460 (192-bit) | HD 5830 |

| GTX 280 | HD 5770 |

| GTX 275 | HD 4890 |

| GTX 260 | HD 4870 |

| Nvidia GeForce | AMD Radeon |

| GTS 450 | R7 250 (DDR3) |

| GTS 250 | HD 7750 (DDR3) |

| 9800 GTX+ | HD 6750 |

| 9800 GTX | HD 5750 |

| 8800 Ultra | HD 4850 |

| HD 3870 X2 | |

| Nvidia GeForce | AMD Radeon |

| GT 730 (64-bit, GDDR5) | |

| GT 545 (GDDR5) | HD 4770 |

| 8800 GTS (512MB) | |

| 8800 GTX | |

| Nvidia GeForce | AMD Radeon |

| GT 740 DDR3 | HD 7730 (GDDR5) |

| GT 640 (DDR3) | HD 6670 (GDDR5) |

| GT 545 (DDR3) | HD 5670 |

| 9800 GT | HD 4830 |

| 8800 GT (512MB) | |

| Nvidia GeForce | AMD Radeon |

| GT 240 (GDDR5) | HD 6570 (GDDR5) |

| 9600 GT | HD 5570 (GDDR5) |

| 8800 GTS (640MB) | HD 3870 |

| HD 2900 XT | |

| Nvidia GeForce | AMD Radeon |

| R7 240 | |

| GT 240 (DDR3) | HD 7730 (DDR3) |

| 9600 GSO | HD 6670 (DDR3) |

| 8800 GS | HD 6570 (DDR3) |

| HD 5570 (DDR3) | |

| HD 4670 | |

| HD 3850 (512MB) | |

| Nvidia GeForce | AMD Radeon |

| GT 730 (128-bit, GDDR5) | |

| GT 630 (GDDR5) | HD 5550 (GDDR5) |

| GT 440 (GDDR5) | HD 3850 (256MB) |

| 8800 GTS (320MB) | HD 2900 Pro |

| 8800 GT (256MB) | |

| Nvidia GeForce | AMD Radeon |

| GT 730 (128-bit, DDR3) | HD 7660D (integrated) |

| GT 630 (DDR3) | HD 5550 (DDR3) |

| GT 440 (DDR3) | HD 4650 (DDR3) |

| 7950 GX2 | X1950 XTX |

| Nvidia GeForce | AMD Radeon |

| GT 530 | |

| GT 430 | |

| 7900 GTX | X1900 XTX |

| 7900 GTO | X1950 XT |

| 7800 GTX 512 | X1900 XT |

| Nvidia GeForce | AMD Radeon |

| HD 7560D (integrated) | |

| GT 220 (DDR3) | HD 5550 (DDR2) |

| 7950 G | HD 2900 GT |

| 7900 GT | X1950 Pro |

| 7800 GTX | X1900 GT |

| X1900 AIW | |

| X1800 XT | |

| Nvidia GeForce | AMD Radeon |

| HD 7540D (integrated) | |

| GT 220 (DDR2) | HD 6550D (integrated) |

| 9500 GT (GDDR3) | HD 6620G (integrated) |

| 8600 GTS | R5 230 |

| 7900 GS | HD 6450 |

| 7800 GT | HD 4650 (DDR2) |

| X1950 GT | |

| X1800 XL | |

| Nvidia GeForce | AMD Radeon |

| 7480D (integrated) | |

| 6530D (integrated) | |

| 9500 GT (DDR2) | 6520G (integrated) |

| 8600 GT (GDDR3) | HD 3670 |

| 8600 GS | HD 3650 (DDR3) |

| 7800 GS | HD 2600 XT |

| 7600 GT | X1800 GTO |

| 6800 Ultra | X1650 XT |

| X850 XT PE | |

| X800 XT PE | |

| X850 XT | |

| X800 XT | |

| Nvidia GeForce | AMD Radeon |

| GT 520 | 6480G (integrated) |

| 8600 GT (DDR2) | 6410D (integrated) |

| 6800 GS (PCIe) | HD 3650 (DDR2) |

| 6800 GT | HD 2600 Pro |

| X800 GTO2/GTO16 | |

| X800 XL | |

| Nvidia GeForce | AMD Radeon |

| 6380G (integrated) | |

| 6370D (integrated) | |

| 6800 GS (AGP) | X1650 GT |

| X850 Pro | |

| X800 Pro | |

| X800 GTO (256MB) | |

| Nvidia GeForce | AMD Radeon |

| 8600M GS | X1650 Pro |

| 7600 GS | X1600 XT |

| 7300 GT (GDDR3) | X800 GTO (128MB) |

| 6800 | X800 |

| Nvidia GeForce | AMD Radeon |

| HD 6320 (integrated) | |

| HD 6310 (integrated) | |

| HD 5450 | |

| 9400 GT | HD 4550 |

| 8500 GT | HD 4350 |

| 7300 GT (DDR2) | HD 2400 XT |

| 6800 XT | X1600 Pro |

| 6800LE | X1300 XT |

| 6600 GT | X800 SE |

| X800 GT | |

| X700 Pro | |

| 9800 XT | |

| Nvidia GeForce | AMD Radeon |

| HD 6290 (integrated) | |

| HD 6250 (integrated) | |

| 9400 (integrated) | HD 4290 (integrated) |

| 9300 (integrated) | HD 4250 (integrated) |

| 6600 (128-bit) | HD 4200 (integrated) |

| FX 5950 Ultra | HD 3300 (integrated) |

| FX 5900 Ultra | HD 3200 (integrated) |

| FX 5900 | HD 2400 Pro |

| X1550 | |

| X1300 Pro | |

| X700 | |

| 9800 Pro | |

| 9800 | |

| 9700 Pro | |

| 9700 | |

| Nvidia GeForce | AMD Radeon |

| X1050 (128-bit) | |

| FX 5900 XT | X600 XT |

| FX 5800 Ultra | 9800 Pro (128-bit) |

| 9600 XT | |

| 9500 Pro | |

| Nvidia GeForce | AMD Radeon |

| G 310 | |

| G 210 | |

| 8400 G | Xpress 1250 (integrated) |

| 8300 | HD 2300 |

| 6200 | X600 Pro |

| FX 5700 Ultra | 9800 LE |

| 4 Ti 4800 | 9600 Pro |

| 4 Ti 4600 | |

| Nvidia GeForce | AMD Radeon |

| 9300M GS | |

| 9300M G | |

| 8400M GS | X1050 (64-bit) |

| 7300 GS | X300 |

| FX 5700, 6600 (64-bit) | 9600 |

| FX 5600 Ultra | 9550 |

| 4 Ti4800 SE | 9500 |

| 4 Ti4400 | |

| 4 Ti4200 | |

| Nvidia GeForce | AMD Radeon |

| 8300 (integrated) | |

| 8200 (integrated) | |

| 7300 LE | X1150 |

| 7200 GS | X300 SE |

| 6600 LE | 9600 LE |

| 6200 TC | 9100 |

| FX 5700 LE | 8500 |

| FX 5600 | |

| FX 5200 Ultra | |

| 3 Ti500 | |

| Nvidia GeForce | AMD Radeon |

| FX 5500 | 9250 |

| FX 5200 (128-bit) | 9200 |

| 3 Ti200 | 9000 |

| 3 | |

| Nvidia GeForce | AMD Radeon |

| FX 7050 (integrated) | Xpress 1150 (integrated) |

| FX 7025 (integrated) | Xpress 1000 (integrated) |

| FX 6150 (integrated) | Xpress 200M (integrated) |

| FX 6100 (integrated) | 9200 SE |

| FX 5200 (64-bit) | |

| Nvidia GeForce | AMD Radeon |

| 2 Ti 200 | |

| 2 Ti | 7500 |

| 2 Ultra | |

| 4 MX 440 | |

| 2 GTS | |

| Nvidia GeForce | AMD Radeon |

| 2 MX 400 | 7200 |

| 4 MX 420 | 7000 |

| 2 MX 200 | DDR |

| 256 | LE |

| SDR | |

| Nvidia GeForce | AMD Radeon |

| Nvidia TNT | Rage 128 |

Not to say that the new ones not useful... but the coaster clusters in the old one kept similar performance together.

There was a sorting of a kind, but looking it over and recalling it is out of the question at the moment as I'm on mobile.

That said, thanks for bringing it up, even if just as a historic reference. Or maybe I just got confortable with it.

It really is surprising, though, that the CMS doesn't keep previous versions of documents. That seems bad. Like, really bad.

There was a sorting of a kind, but looking it over and recalling it is out of the question at the moment as I'm on mobile.

That said, thanks for bringing it up, even if just as a historic reference. Or maybe I just got confortable with it.

It really is surprising, though, that the CMS doesn't keep previous versions of documents. That seems bad. Like, really bad.

Can't you see my table above? That's a copy/paste of the old Legacy collection of GPUs. Like I said, though, I don't find it particularly useful. Looking at Wiki pages for release dates and specs is about as meaningful, plus it's missing quite a few GPUs and it's not at all clear what the sort order is supposed to be. Near the top of the list, it's "higher is better," but later on things get very nebulous and there's not even a list of specs or release dates to help out. Plus, there's just a whole bunch of groupings with no indication of the difference between them. Anyway, if you like the above table instead of the new list, it's here in the comments.Not to say that the new ones not useful... but the coaster clusters in the old one kept similar performance together.

There was a sorting of a kind, but looking it over and recalling it is out of the question at the moment as I'm on mobile.

That said, thanks for bringing it up, even if just as a historic reference. Or maybe I just got confortable with it.

It really is surprising, though, that the CMS doesn't keep previous versions of documents. That seems bad. Like, really bad.

alceryes

Splendid

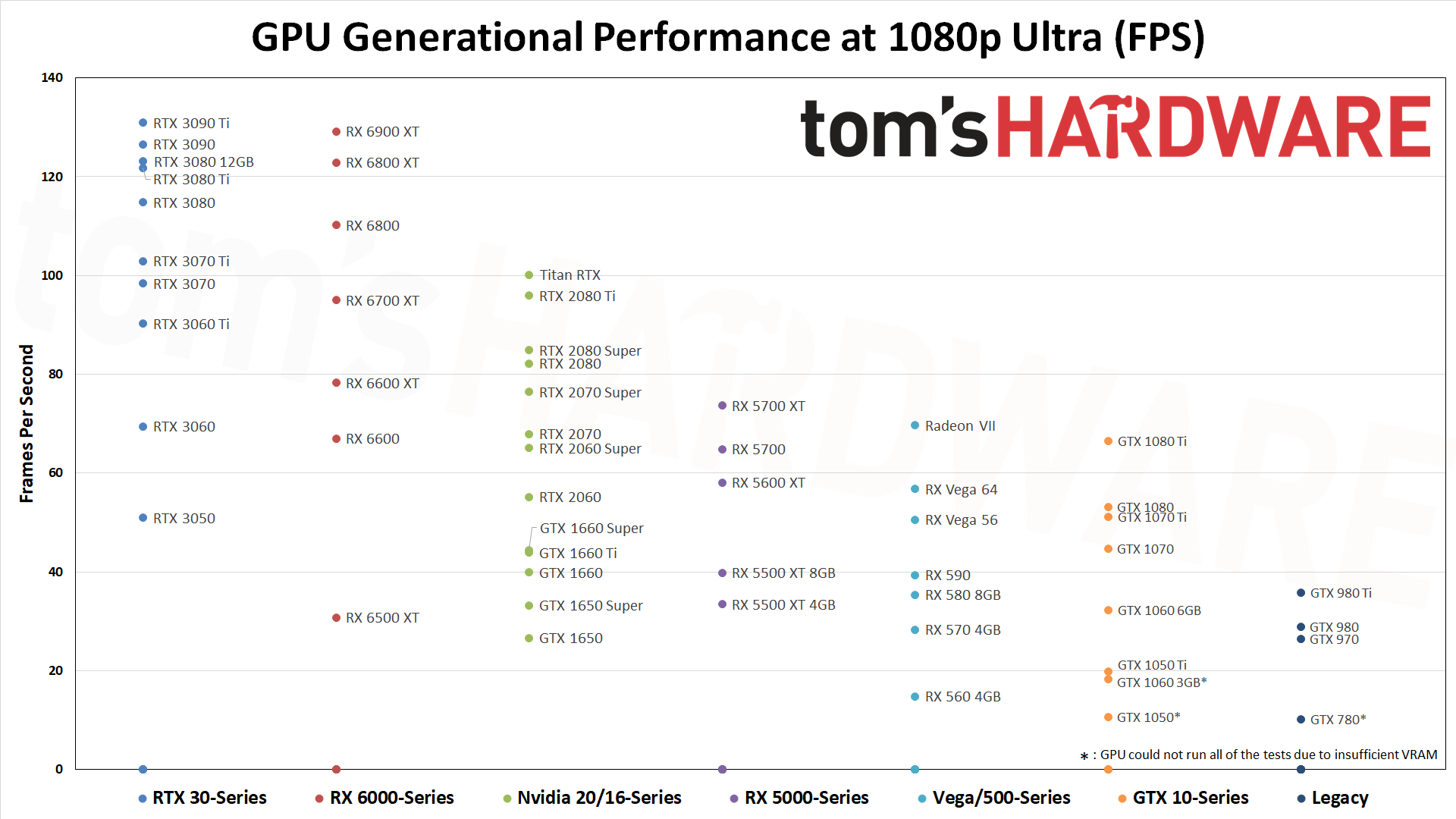

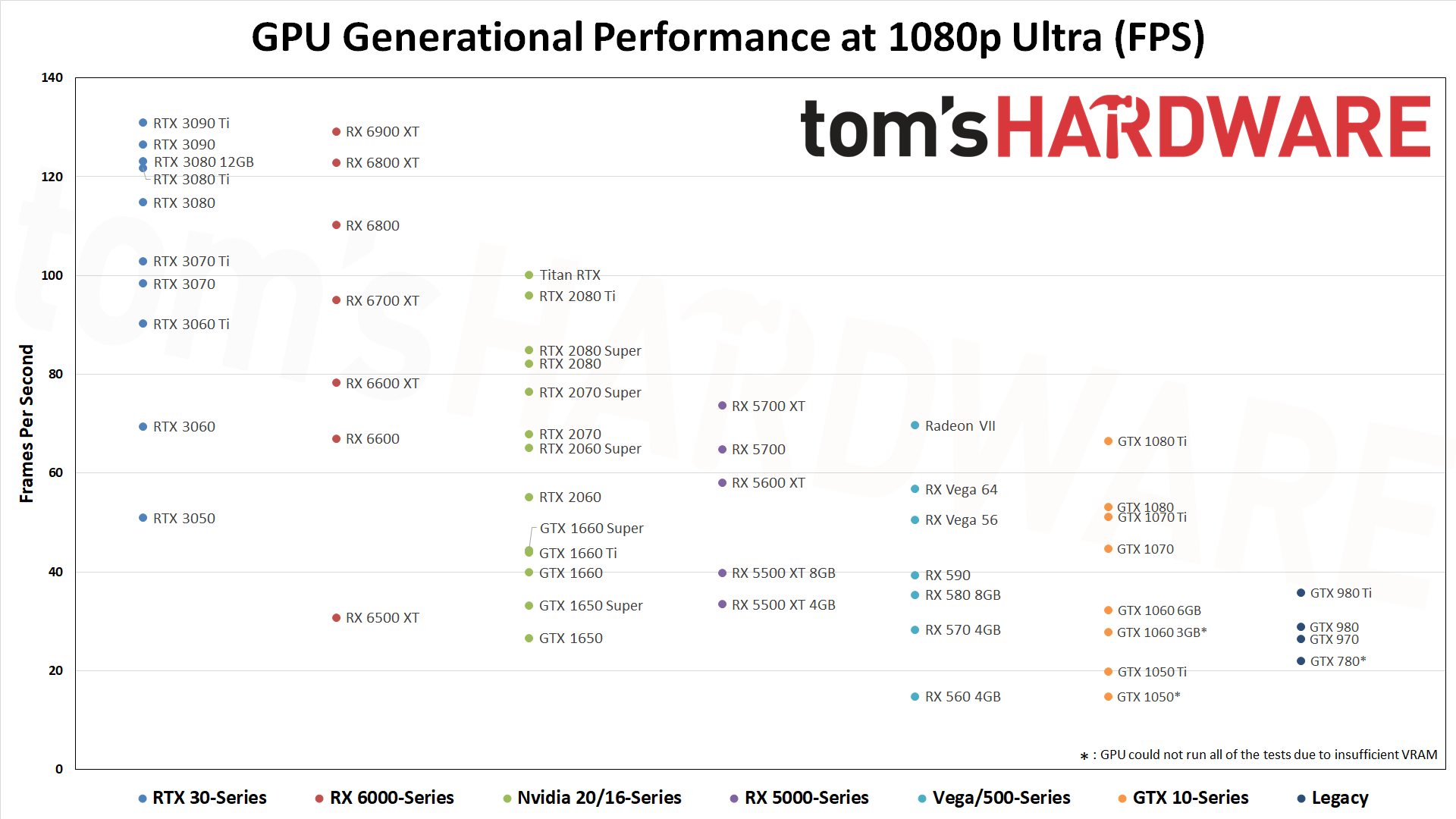

The new chart is great.

In some instances, the GFLOPS sorting isn't the best way to show expected 'gaming performance'. For instance, I've had both a Vega 64 and a Titan Xp. In all the games I played, AAA games used by TH during reviews, the Titan Xp beat the Vega 64 at 1440p. Every. Single. One. The rest of the hardware was identical. You do a good job explaining the GFLOPS limitation though so no biggie.

For 'gaming GPU ranking' it's best to do exactly what you're doing in the newest list. One request though. Could you list all the games that are used to come up with this ranking?

In some instances, the GFLOPS sorting isn't the best way to show expected 'gaming performance'. For instance, I've had both a Vega 64 and a Titan Xp. In all the games I played, AAA games used by TH during reviews, the Titan Xp beat the Vega 64 at 1440p. Every. Single. One. The rest of the hardware was identical. You do a good job explaining the GFLOPS limitation though so no biggie.

For 'gaming GPU ranking' it's best to do exactly what you're doing in the newest list. One request though. Could you list all the games that are used to come up with this ranking?

I think I just forgot to add the list of games. Here it is, and I'll add it to the article tomorrow sometime:The new chart is great.

In some instances, the GFLOPS sorting isn't the best way to show expected 'gaming performance'. For instance, I've had both a Vega 64 and a Titan Xp. In all the games I played, AAA games used by TH during reviews, the Titan Xp beat the Vega 64 at 1440p. Every. Single. One. The rest of the hardware was identical. You do a good job explaining the GFLOPS limitation though so no biggie.

For 'gaming GPU ranking' it's best to do exactly what you're doing in the newest list. One request though. Could you list all the games that are used to come up with this ranking?

Standard Benchmarks:

Borderlands 3

Far Cry 6

Flight Simulator

Forza Horizon 5

Horizon Zero Dawn

Red Dead Redemption 2

Total War Warhammer 3

Watch Dogs Legion

DXR Benchmarks:

Bright Memory Infinite

Control

Cyberpunk 2077

Fortnite

Metro Exodus Enhanced

Minecraft

As someone that is now getting serious about getting a new graphics card, it is just like a techtuber video.

All of the information is accurate, but none of it is actually useful for making buying decisions. I am seeing a best case scenario, which isn't useful. What is actually useful is real world scenarios.

1080 Med, 1080 Ultra, 1440p Ultra, 4K Ultra isn't actually useful.

I recommend adding 1440p High; and 4K High. You know, resolutions people actually use.

All of the information is accurate, but none of it is actually useful for making buying decisions. I am seeing a best case scenario, which isn't useful. What is actually useful is real world scenarios.

1080 Med, 1080 Ultra, 1440p Ultra, 4K Ultra isn't actually useful.

I recommend adding 1440p High; and 4K High. You know, resolutions people actually use.

alceryes

Splendid

At some point it's just going to be too much data to replicate every single time time a new GPU comes out. I think the 1440p ultra and 4K ultra are close enough for you to gauge relative performance.As someone that is now getting serious about getting a new graphics card, it is just like a techtuber video.

All of the information is accurate, but none of it is actually useful for making buying decisions. I am seeing a best case scenario, which isn't useful. What is actually useful is real world scenarios.

1080 Med, 1080 Ultra, 1440p Ultra, 4K Ultra isn't actually useful.

I recommend adding 1440p High; and 4K High. You know, resolutions people actually use.

@JarredWaltonGPU, could you give a 1-2 liner detailing your testing methodology? Something like, "run built-in benchmark 10 times, drop the lowest and highest scores and averaged the rest."

Game version numbers would also be useful as games are rarely optimized right out of the gate and regularly recieve one or two big performance boost updates within the first 6 months of release.

Oh, also, is the 1080 Ti going to make an appearance on the new chart? I think it would be good to show the performance of some of the newest 'money-grubber' GPU releases compared to a golden oldie that is still very relevant today. Maybe cut it off at the top performing card from 3 generations ago...? (not counting exotics like the Titan series or dual-GPU on single PCB)

Last edited:

I run one pass of each benchmark to "warm up" the GPU after launching the game, then I run at least two passes at each setting/resolution combination. Some games don't require a restart between settings changes, many do. If the two runs are basically identical (within 0.5% or less difference), I use the faster of the two runs. If there's more than a small difference, I run the test twice more and basically try to figure out what "normal" performance is supposed to be.At some point it's just going to be too much data to replicate every single time time a new GPU comes out. I think the 1440p ultra and 4K ultra are close enough for you to gauge relative performance.

@JarredWaltonGPU, could you give a 1-2 liner detailing your testing methodology? Something like, "run built-in benchmark 10 times, drop the lowest and highest scores and averaged the rest."

Game version numbers would also be useful as games are rarely optimized right out of the gate and regularly recieve one or two big performance boost updates within the first 6 months of release.

Oh, also, is the 1080 Ti going to make an appearance on the new chart? I think it would be good to show the performance of some of the newest 'money-grubber' GPU releases compared to a golden oldie that is still very relevant today. Maybe cut it off at the top performing card from 3 generations ago...? (not counting exotics like the Titan series or dual-GPU on single PCB)

While the above might seem like it would be potentially prone to mistakes, I've been doing this for years and the first two runs (plus warm up) are usually a good indication of performance. I also look at all the data, so when I test for example RTX 3070 and RTX 3060 Ti and RTX 3070 Ti, I know they're all generally going to perform within a narrow range — 3070 Ti is about 5% (give or take) faster than 3070, which is about 5% (again, give or take) faster than 3060 Ti. If, after I put all the data together, I see games where there are clear outliers (i.e. performance is more than 10% higher for the cards I just mentioned, I'll go back and retest whatever cards are showing the anomaly and confirm that it is either correct due to some other factor, or that my earlier test results are no longer valid.

Given each card requires about eight hours to run all the tests (at least for a card where I run all four resolutions/settings), there's obviously going to be lag between the latest drivers, game patches, and when a card gets tested. I've started the new hierarchy tests with the current generation cards, and I'm now going through previous generation cards. Some cards were tested with 511.65 and 22.2.1, others were tested with 511.79 and 22.2.2 — I updated drivers mostly because I added Total War: Warhammer 3 to the suite. When I've finished all the testing of previous generation GPUs in the next month or so, I'll go back and retest probably RTX 3080 and RX 6800 XT on the latest drivers and game patches and check for any major differences in performance. If there's clearly a change in performance, for the better, I'll start running through the potentially impacted GPUs again. This is usually limited to one or two games that improve, though, so I don't have to retest everything every couple of months.

alceryes

Splendid

How do you handle ambient temp and heat saturation of mainboard components between different GPU tests?I run one pass of each benchmark to "warm up" the GPU after launching the game, then I run at least two passes at each setting/resolution combination. Some games don't require a restart between settings changes, many do. If the two runs are basically identical (within 0.5% or less difference), I use the faster of the two runs. If there's more than a small difference, I run the test twice more and basically try to figure out what "normal" performance is supposed to be.

While the above might seem like it would be potentially prone to mistakes, I've been doing this for years and the first two runs (plus warm up) are usually a good indication of performance. I also look at all the data, so when I test for example RTX 3070 and RTX 3060 Ti and RTX 3070 Ti, I know they're all generally going to perform within a narrow range — 3070 Ti is about 5% (give or take) faster than 3070, which is about 5% (again, give or take) faster than 3060 Ti. If, after I put all the data together, I see games where there are clear outliers (i.e. performance is more than 10% higher for the cards I just mentioned, I'll go back and retest whatever cards are showing the anomaly and confirm that it is either correct due to some other factor, or that my earlier test results are no longer valid.

Given each card requires about eight hours to run all the tests (at least for a card where I run all four resolutions/settings), there's obviously going to be lag between the latest drivers, game patches, and when a card gets tested. I've started the new hierarchy tests with the current generation cards, and I'm now going through previous generation cards. Some cards were tested with 511.65 and 22.2.1, others were tested with 511.79 and 22.2.2 — I updated drivers mostly because I added Total War: Warhammer 3 to the suite. When I've finished all the testing of previous generation GPUs in the next month or so, I'll go back and retest probably RTX 3080 and RX 6800 XT on the latest drivers and game patches and check for any major differences in performance. If there's clearly a change in performance, for the better, I'll start running through the potentially impacted GPUs again. This is usually limited to one or two games that improve, though, so I don't have to retest everything every couple of months.

I'd imagine that the first card to get tested, after the system's been off, will have a slight advantage over the second, third, etc. card in line on the same motherboard.

This could actually be a separate article. A through test of the effects of motherboard heat saturation on benchmarking results. (don't know that this has been done before)

The system needs to be shut down to swap GPUs, and that's generally more than enough time for everything to cool off. Plus, I'm not running the CPU overclocked, so it shouldn't be taxed too much. There's potential variation in ambient temperatures, though I keep my office generally in the 70-75F range. The periodic retesting of GPUs also looks for anomalies, and they're pretty rare thankfully.How do you handle ambient temp and heat saturation of mainboard components between different GPU tests?

I'd imagine that the first card to get tested, after the system's been off, will have a slight advantage over the second, third, etc. card in line on the same motherboard.

This could actually be a separate article. A through test of the effects of motherboard heat saturation on benchmarking results. (don't know that this has been done before)

alceryes

Splendid

Do you have any plans to put the GTX 1080 Ti in your new GPU hierarchy chart?The system needs to be shut down to swap GPUs, and that's generally more than enough time for everything to cool off. Plus, I'm not running the CPU overclocked, so it shouldn't be taxed too much. There's potential variation in ambient temperatures, though I keep my office generally in the 70-75F range. The periodic retesting of GPUs also looks for anomalies, and they're pretty rare thankfully.

Yeah, I actually have a bunch of the previous gen cards now tested and just need to update the article charts, tables, etc. with the data. Actually, I want to finish testing the Vega/Polaris generation of AMD GPUs before I push the next update live, which should be in the next day or two hopefully.Do you have any plans to put the GTX 1080 Ti in your new GPU hierarchy chart?

For those sticking with this thread (since the other got vaporized), I've just done another major update. New stuff this round:

RTX 3090 Ti retakes the crown

Lots of older GPUs now tested and included

I'm nearly finished with all previous generation cards that I'm going to retest. The only GPUs that are still missing:

RTX 3090 Ti retakes the crown

Lots of older GPUs now tested and included

I'm nearly finished with all previous generation cards that I'm going to retest. The only GPUs that are still missing:

- GTX 1650 GDDR6

- RX 550 4GB (yeah, I've got one...)

- GTX 1060 3GB

- GT 1030 2GB (ugh...)

- Titan V

- Titan Xp

- Titan X (Maxwell)

- R9 Fury X

- R9 390

It's coming! It will fail on RDR2 at 1080p ultra, but I think it will at least try to run everything else. LOL. The lack of an RDR2 score will probably push it below the GTX 1050 Ti, though.Oh, you know how it is . . if there's a "What idiot would want to see THAT card on the list??!" then it's probably me!

But nothing that occurs to me offhand. Yet. (insert foreboding music here)

EDIT: wait, GTX 1060 3GB!

hotaru.hino

Glorious

Looking at the Steam Hardware Survey as of March 2022, 68% of users are using 1080p resolution. The second most common resolution used is 1440p at ~10%. But third place is 1368x768 at 6%.As someone that is now getting serious about getting a new graphics card, it is just like a techtuber video.

All of the information is accurate, but none of it is actually useful for making buying decisions. I am seeing a best case scenario, which isn't useful. What is actually useful is real world scenarios.

1080 Med, 1080 Ultra, 1440p Ultra, 4K Ultra isn't actually useful.

I recommend adding 1440p High; and 4K High. You know, resolutions people actually use.

So given what people "actually use", 1368x768 should be tested too. 😉

Screw you!Looking at the Steam Hardware Survey as of March 2022, 68% of users are using 1080p resolution. The second most common resolution used is 1440p at ~10%. But third place is 1368x768 at 6%.

So given what people "actually use", 1368x768 should be tested too. 😉

It's because Steam includes a lot of older laptops running crappy displays with integrated graphics. I would wager 99% of the 1366x768 results are from such laptops, and probably a huge chunk of the 1080p stuff is laptops as well.

I do test 720p on super low-end GPUs (like Intel and AMD integrated), though I haven't done that for the new hierarchy yet. Those chips are like my lowest concern right now, and they're probably not even going to manage to run 1080p ultra, which means I'll test 720p medium and 1080p medium and put them at the very bottom of the list.

hotaru.hino

Glorious

It would be interesting to see how iGPUs and budget video cards (whatever that means today) fare, especially if devices similar to the Steam Deck pick up... well, steam.I do test 720p on super low-end GPUs (like Intel and AMD integrated), though I haven't done that for the new hierarchy yet. Those chips are like my lowest concern right now, and they're probably not even going to manage to run 1080p ultra, which means I'll test 720p medium and 1080p medium and put them at the very bottom of the list.

But yeah, I'm in no rush to see that data. Notebookcheck fills that niche if I really want to see some data.

@King_V will be pleased to see the GTX 1060 3GB is now in the charts. As I sort of suspected, at 1080p ultra it ends up below the GTX 1050 Ti, mostly because of the "zero" result in RDR2. (Technically I use a "1.0" result for benchmarks that fail to run, because I used the geometric mean of the scores and zeroes don't work with that.)

And the irony is, until now, I've only ever owned a GTX 1080 from NVidia.

I mean, well, and a Riva 128.

Bummer, though, that there's no easy way to compensate for a "can't run this particular game, but otherwise would be higher in the charts" situation.

Are there other cards that got hit with a similar issue (unable to run at least one game in the suite)? For a moment I wondered if it was a "minimum VRAM requirement" kind of thing, but then if it was, for RDR2 at least, I figured the 1050 and 780 would've failed as well.

I mean, well, and a Riva 128.

Bummer, though, that there's no easy way to compensate for a "can't run this particular game, but otherwise would be higher in the charts" situation.

Are there other cards that got hit with a similar issue (unable to run at least one game in the suite)? For a moment I wondered if it was a "minimum VRAM requirement" kind of thing, but then if it was, for RDR2 at least, I figured the 1050 and 780 would've failed as well.

Have you not noticed the image?And the irony is, until now, I've only ever owned a GTX 1080 from NVidia.

I mean, well, and a Riva 128.

Bummer, though, that there's no easy way to compensate for a "can't run this particular game, but otherwise would be higher in the charts" situation.

Are there other cards that got hit with a similar issue (unable to run at least one game in the suite)? For a moment I wondered if it was a "minimum VRAM requirement" kind of thing, but then if it was, for RDR2 at least, I figured the 1050 and 780 would've failed as well.

So yeah, anything with less than 4GB fails at 1080p ultra on RDR2. And the GTX 780 failed to run Far Cry 6 as well — the game says it needs GTX 900-series, not sure exactly why.

Actually, I've updated the charts to just remove the "could not run" results rather than counting them as a "0" (one), which gives much improved standings that aren't entirely accurate, but whatever. It's as good as I'll get without testing more games and/or using different settings. 🤷

TRENDING THREADS

-

-

Question Microphone buzzing stops when pc case is touched

- Started by ognjenzzz

- Replies: 2

-

-

Discussion Can graphics cards with external PCIE power connectors run off of just the power from the PCIE slot?

- Started by Order 66

- Replies: 15

-

Question Is recommended format a new ssd (about security) before install windows?

- Started by Tams_Hardware

- Replies: 2

-

-

Tom's Hardware is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.