Gtx 970 for 1080p

- Thread starter chrisp99

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Solution

FO4 http://www.techspot.com/review/1089-fallout-4-benchmarks/page2.html

Witcher 3 strains them a little http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page3.html

Witcher 3 strains them a little http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page3.html

ComputerSecurityGuy

Admirable

Yep. If you are planning on upgrading resolution in the future then get a R9 390 or better, but the 970 is the ultimate 1080p GPU.

loki1944

Distinguished

chrisp99 :

Hi guys im lookin to upgrade from my gtx 760 would a gtx 970 max out every game at 1080p?

970 will be alright for 1080p; even my 780Tis which only have 3GB of VRAM are good enough for 1080p most of the time. Only thing that will crush it would be Shadow of Mordor and GTA V completely maxed out at 1080p http://www.tweaktown.com/tweakipedia/90/much-vram-need-1080p-1440p-4k-aa-enabled/index.html.

rolli59

Titan

FO4 http://www.techspot.com/review/1089-fallout-4-benchmarks/page2.html

Witcher 3 strains them a little http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page3.html

Witcher 3 strains them a little http://www.techspot.com/review/1006-the-witcher-3-benchmarks/page3.html

loki1944

Distinguished

chrisp99 :

An gta v is one of my games i wanna max

Maxed out with AA and advanced settings? Then you'll want to look at a 390 or 980Ti for the VRAM, GTA V is one VRAM hungry port maxed out. If you're talking just Very High settings then a GTX 980 will get you 30FPS+ @1080p http://uk.hardware.info/reviews/6140/3/gta-v-performance-review-tested-with-23-gpus-test-results-full-hd-1920x1080. If you want 60FPS completely maxed out with advanced settings at 1080p you'll need SLI or Crossfire.

rolli59

Titan

chrisp99 :

So do you guys think if i get the 970

i can run gta v maxed out with my fx 6300 clocked at 4.4 ghz and 12 gigs of ram?

i can run gta v maxed out with my fx 6300 clocked at 4.4 ghz and 12 gigs of ram?

FO4 is one of those games where CPU matters http://www.techspot.com/review/1089-fallout-4-benchmarks/page5.html

FX6350 gets lower score with GTX980Ti than 6700K with GTX970 so hard to say.

loki1944 :

970 will be alright for 1080p; even my 780Tis which only have 3GB of VRAM are good enough for 1080p most of the time. Only thing that will crush it would be Shadow of Mordor and GTA V completely maxed out at 1080p http://www.tweaktown.com/tweakipedia/90/much-vram-need-1080p-1440p-4k-aa-enabled/index.html.

AFAIK, there is no utility capable of measuring VRAM usage, and as long as that remains true, I can't put much faith in tweaktown's results.

Most reviewers are using GPU-Z or some other utility which, in reality does not measure VRAM usage but measures VRAM allocation. The distinction is important. The tweaktown folks say that they recorded VRAM usage but they don't say with what. They also don't say what GFX card(s) was (were) used. All I was able to fathom from their articles was:

CPU: Intel Core i7 5820K processor w/Corsair H110 cooler

Motherboard: GIGABYTE X99 Gaming G1 Wi-Fi

RAM: 16GB Corsair Vengeance 2666MHz DDR4

Storage: 240GB SanDisk Extreme II and 480GB SanDisk Extreme II

Chassis: Lian Li T60 Pit Stop

PSU: Corsair AX1200i digital PSU

Software: Windows 7 Ultimate x64

This VRAM debate has been going on for quite some time and one of the most definitive articles on the subject has been lost as the web site is gone

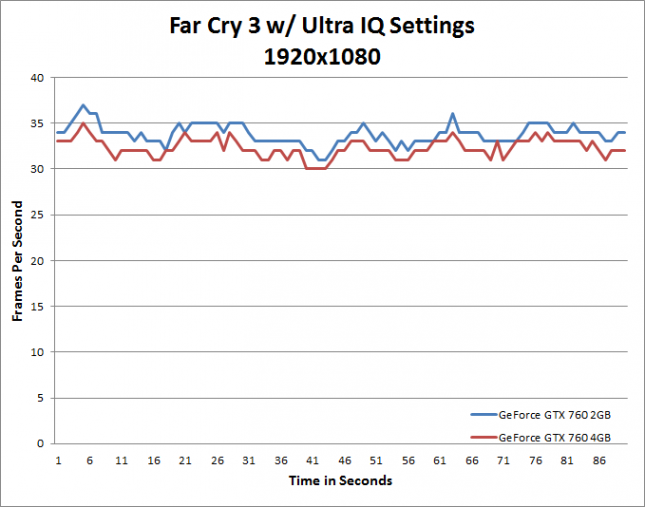

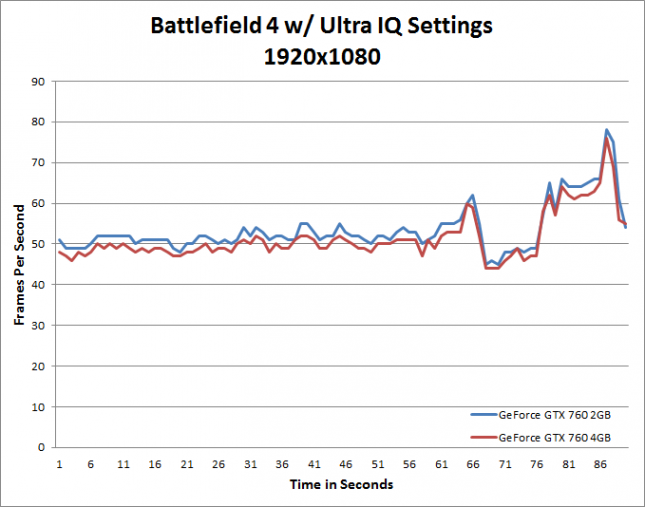

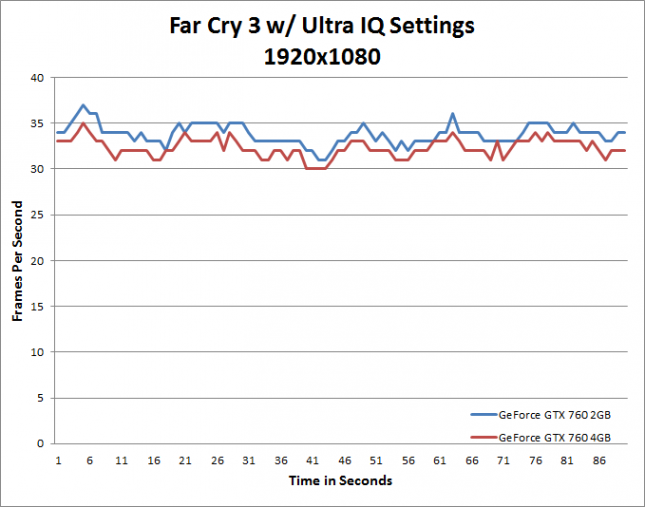

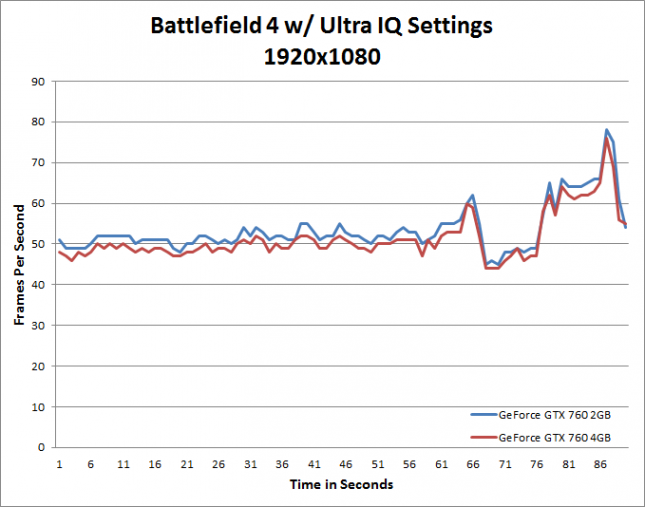

http://alienbabeltech.com/main/gtx-770-4gb-vs-2gb-tested/3/

They compared the 2GB and 4 GB 770s and found virtually no difference in performance between the cards. In some cases, the 2 GB model was faster but most often the differences either way were in tenths of a fps/. The far more interesting point was during Max Payne when they game would not allow one to set a res of 57660 x 1080 with the 2 GB card installed. However, after installing the 4 GB and selecting that setting, switching to the 2GB card produced virtually the same results with no drop in performance, image quality or playability.

Again, original article is gone but you can find their results copied on youtube and numerous forums

https://www.youtube.com/watch?v=o_fBCvFXi0g

http://www.legitreviews.com/gigabyte-geforce-gtx-760-4gb-video-card-review-2gb-4gb_129062/4

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

http://www.guru3d.com/articles-pages/gigabyte-geforce-gtx-960-g1-gaming-4gb-review,13.html

While all of these tests show marginal performance differences, many times with 2 GB getting better fps, none really explained why they were showing > 2 GB VRAM usage on the 4 GB cards. But extremetech does:

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

The problem as mentioned above is that there is no way to measure VRAM req'd or VRAM used, only VRAM allocated. It's kinda like a credit card limit. Utilities like GPU_z are looking at your credit limit, not your balance. Just because I have say a $16k credit limit, does not mean I owe anyone $16k. The card vendor looks at my assets and make a decision how much to allocate for my credit line....that doesn't mean I am using it. Same thing.

When a game is installed / run, it looks at my assets (VRAM installed) and says "This dude has 8 GB, so let's set aside 3/4 of that for our game to use if needed". So if you have 8 GB, it might "allocate" 6 GB.... but if you have say 4 GB, it would allocate 3 GB ... this is what GPU_z reports, the allocation not the usage.

From the above link:

Some games won’t use much VRAM, no matter how much you offer them, while others are more opportunistic. This is critically important for our purposes, because there’s not an automatic link between the amount of VRAM a game is using [according to your measuring tool]and the amount of VRAM it actually requires to run.

GPU-Z claims to report how much VRAM the GPU actually uses, but there’s a significant caveat to this metric. GPU-Z doesn’t actually report how much VRAM the GPU is actually using — instead, it reports the amount of VRAM that a game has requested. We spoke to Nvidia’s Brandon Bell on this topic, who told us the following: “None of the GPU tools on the market report memory usage correctly, whether it’s GPU-Z, Afterburner, Precision, etc. They all report the amount of memory requested by the GPU, not the actual memory usage. Cards will larger memory will request more memory, but that doesn’t mean that they actually use it. They simply request it because the memory is available.”

You can look at all the graphs in the article which absolutely no advantage of > 4GB at any resolution besides 4k and at this resolution, the game was unplayable.

First, there’s the fact that out of the fifteen games we tested, only four of could be forced to consume more than the 4GB of RAM. In every case, we had to use high-end settings at 4K to accomplish this.....

While we do see some evidence of a 4GB barrier on AMD cards that the NV hardware does not experience, provoking this problem in current-generation titles required us to use settings that rendered the games unplayable any current GPU. ...

... If you’re a gamer who wants 4K and ultra-high quality visual settings, none of the current GPUs on the market are going to suit you.

loki1944

Distinguished

JackNaylorPE :

loki1944 :

970 will be alright for 1080p; even my 780Tis which only have 3GB of VRAM are good enough for 1080p most of the time. Only thing that will crush it would be Shadow of Mordor and GTA V completely maxed out at 1080p http://www.tweaktown.com/tweakipedia/90/much-vram-need-1080p-1440p-4k-aa-enabled/index.html.

AFAIK, there is no utility capable of measuring VRAM usage, and as long as that remains true, I can't put much faith in tweaktown's results.

Most reviewers are using GPU-Z or some other utility which, in reality does not measure VRAM usage but measures VRAM allocation. The distinction is important. The tweaktown folks say that they recorded VRAM usage but they don't say with what. They also don't say what GFX card(s) was (were) used. All I was able to fathom from their articles was:

CPU: Intel Core i7 5820K processor w/Corsair H110 cooler

Motherboard: GIGABYTE X99 Gaming G1 Wi-Fi

RAM: 16GB Corsair Vengeance 2666MHz DDR4

Storage: 240GB SanDisk Extreme II and 480GB SanDisk Extreme II

Chassis: Lian Li T60 Pit Stop

PSU: Corsair AX1200i digital PSU

Software: Windows 7 Ultimate x64

This VRAM debate has been going on for quite some time and one of the most definitive articles on the subject has been lost as the web site is gone

http://alienbabeltech.com/main/gtx-770-4gb-vs-2gb-tested/3/

They compared the 2GB and 4 GB 770s and found virtually no difference in performance between the cards. In some cases, the 2 GB model was faster but most often the differences either way were in tenths of a fps/. The far more interesting point was during Max Payne when they game would not allow one to set a res of 57660 x 1080 with the 2 GB card installed. However, after installing the 4 GB and selecting that setting, switching to the 2GB card produced virtually the same results with no drop in performance, image quality or playability.

Again, original article is gone but you can find their results copied on youtube and numerous forums

https://www.youtube.com/watch?v=o_fBCvFXi0g

http://www.legitreviews.com/gigabyte-geforce-gtx-760-4gb-video-card-review-2gb-4gb_129062/4

https://www.pugetsystems.com/labs/articles/Video-Card-Performance-2GB-vs-4GB-Memory-154/

http://www.guru3d.com/articles-pages/gigabyte-geforce-gtx-960-g1-gaming-4gb-review,13.html

While all of these tests show marginal performance differences, many times with 2 GB getting better fps, none really explained why they were showing > 2 GB VRAM usage on the 4 GB cards. But extremetech does:

http://www.extremetech.com/gaming/213069-is-4gb-of-vram-enough-amds-fury-x-faces-off-with-nvidias-gtx-980-ti-titan-x

The problem as mentioned above is that there is no way to measure VRAM req'd or VRAM used, only VRAM allocated. It's kinda like a credit card limit. Utilities like GPU_z are looking at your credit limit, not your balance. Just because I have say a $16k credit limit, does not mean I owe anyone $16k. The card vendor looks at my assets and make a decision how much to allocate for my credit line....that doesn't mean I am using it. Same thing.

When a game is installed / run, it looks at my assets (VRAM installed) and says "This dude has 8 GB, so let's set aside 3/4 of that for our game to use if needed". So if you have 8 GB, it might "allocate" 6 GB.... but if you have say 4 GB, it would allocate 3 GB ... this is what GPU_z reports, the allocation not the usage.

From the above link:

Some games won’t use much VRAM, no matter how much you offer them, while others are more opportunistic. This is critically important for our purposes, because there’s not an automatic link between the amount of VRAM a game is using [according to your measuring tool]and the amount of VRAM it actually requires to run.

GPU-Z claims to report how much VRAM the GPU actually uses, but there’s a significant caveat to this metric. GPU-Z doesn’t actually report how much VRAM the GPU is actually using — instead, it reports the amount of VRAM that a game has requested. We spoke to Nvidia’s Brandon Bell on this topic, who told us the following: “None of the GPU tools on the market report memory usage correctly, whether it’s GPU-Z, Afterburner, Precision, etc. They all report the amount of memory requested by the GPU, not the actual memory usage. Cards will larger memory will request more memory, but that doesn’t mean that they actually use it. They simply request it because the memory is available.”

You can look at all the graphs in the article which absolutely no advantage of > 4GB at any resolution besides 4k and at this resolution, the game was unplayable.

First, there’s the fact that out of the fifteen games we tested, only four of could be forced to consume more than the 4GB of RAM. In every case, we had to use high-end settings at 4K to accomplish this.....

While we do see some evidence of a 4GB barrier on AMD cards that the NV hardware does not experience, provoking this problem in current-generation titles required us to use settings that rendered the games unplayable any current GPU. ...

... If you’re a gamer who wants 4K and ultra-high quality visual settings, none of the current GPUs on the market are going to suit you.

Well actually hardcop also noted the VRAM impact "GeForce GTX 980 Ti SLI also costs $1300, and it allows you to go just a step above in Grand Theft Auto V. We were able to play at all the same settings as the AMD Radeon R9 Fury X CrossFire configuration. In addition we were able to raise the view distance slider and shadow distance slider to half of the slider position. This extends the view distance of the game and how far out detailed shadows are rendered.

With GeForce GTX TITAN X SLI we could extend this out another notch all the way up to full extended view and shadows in Grand Theft Auto V. This shows that the higher framebuffer of these GPUs is allowing this setting, and below you will see what happens when we tried it on Fury X CrossFire.

Turning the distance sliders to maximum just kills performance on the AMD Radeon R9 Fury X CrossFire at 4K. Without these options enabled we were averaging above 60 FPS. Just turning them on to the full distance cuts performance in half and makes Grand Theft Auto V very unplayable. This does not happen on the TITAN X SLI and GTX 980 Ti SLI configuration."

And it's pretty obvious that even if GPU-Z isn't perfect it can still tell the story of too little VRAM; when it's showing over 3GB being used when you're getting stutter that the issue is VRAM. Tested this with my 780Tis; certain games would stutter and GPU-Z log would show the demand was going above 3GB; try it on my 290Xs with full 4GB for some games and be ok, for others needed 980TI's 6GB of VRAM for smooth gameplay.

loki1944 :

And it's pretty obvious that even if GPU-Z isn't perfect it can still tell the story of too little VRAM; when it's showing over 3GB being used when you're getting stutter that the issue is VRAM. Tested this with my 780Tis; certain games would stutter and GPU-Z log would show the demand was going above 3GB; try it on my 290Xs with full 4GB for some games and be ok, for others needed 980TI's 6GB of VRAM for smooth gameplay.

In the future, I would ask that ...

1. When quoting a long post, edit out the parts which you are not responding to make it easier on folks following the thread.

2. Please include the link you are quoting so we can get additional information and context and use the forum's quote function for the actual quote.

As to your response:

a) The OP stated:

Hi guys im lookin to upgrade from my gtx 760 would a gtx 970 max out every game at 1080p?

So whether there is a memory issue at 4k in SLI / CF really has no bearing on the topic of a single card at 1080p.

b) I tracked down your link and it's entitled "AMD Radeon R9 Fury X CrossFire at 4K Review"

http://www.hardocp.com/article/2015/10/06/amd_radeon_r9_fury_x_crossfire_at_4k_review/5#.Vlx8ZL8l8Qk

The OP does not have CF or SLI, nor is he using 4k.

c) I and the linked authors only mention 4k in passing in response to the question for clarification ... "no, there is no issue at 1080p (or 1440p), but you can **create** one at 4k". And by create one, I mean using settings that make the game unplayable.

As for 4k, I must start off by saying that, IMO, no 2 GPUs made today are capable of delivering what I would call a "satisfactory experience" at 4k. Don't think we will see a CF / SLI pairing that can for another 12-16 months. And until Display Port 1.3 comes along, I can't recommend 4k anyway.

As to HardOCP's conclusions, I don't think their results lead to a definitive conclusion....it certainly suggests a conclusion but again ....

1. Is there a problem using settings that people would actually use ? As it said in most of the other links ... what's the problem of not having enough RAM if the game for settings that render the game unplayable ?

2. There's also the noted issue of AMD / HBM having a problem at the 4 GB barrier where nVidia does not. So is the "performance dropped like a rock" observation due to the amount of RAM or the AMD / HBM implementation as noted by extremetech ?

As for your GPU_z results, again, since GPU_Z does not measure VRAM usage, whether you are overextending the GPU or the GPU, there is no way to determine. As all the other links have shown, switching from a GPU with "X" RAM to the same GPU with "2X" RAM, did not change anything in test after test after test. Using a different GPU with more RAM does not show that the bottleneck was the RAM.

loki1944

Distinguished

JackNaylorPE :

loki1944 :

And it's pretty obvious that even if GPU-Z isn't perfect it can still tell the story of too little VRAM; when it's showing over 3GB being used when you're getting stutter that the issue is VRAM. Tested this with my 780Tis; certain games would stutter and GPU-Z log would show the demand was going above 3GB; try it on my 290Xs with full 4GB for some games and be ok, for others needed 980TI's 6GB of VRAM for smooth gameplay.

In the future, I would ask that ...

1. When quoting a long post, edit out the parts which you are not responding to make it easier on folks following the thread.

2. Please include the link you are quoting so we can get additional information and context and use the forum's quote function for the actual quote.

As to your response:

a) The OP stated:

Hi guys im lookin to upgrade from my gtx 760 would a gtx 970 max out every game at 1080p?

So whether there is a memory issue at 4k in SLI / CF really has no bearing on the topic of a single card at 1080p.

b) I tracked down your link and it's entitled "AMD Radeon R9 Fury X CrossFire at 4K Review"

http://www.hardocp.com/article/2015/10/06/amd_radeon_r9_fury_x_crossfire_at_4k_review/5#.Vlx8ZL8l8Qk

The OP does not have CF or SLI, nor is he using 4k.

c) I and the linked authors only mention 4k in passing in response to the question for clarification ... "no, there is no issue at 1080p (or 1440p), but you can **create** one at 4k". And by create one, I mean using settings that make the game unplayable.

As for 4k, I must start off by saying that, IMO, no 2 GPUs made today are capable of delivering what I would call a "satisfactory experience" at 4k. Don't think we will see a CF / SLI pairing that can for another 12-16 months. And until Display Port 1.3 comes along, I can't recommend 4k anyway.

As to HardOCP's conclusions, I don't think their results lead to a definitive conclusion....it certainly suggests a conclusion but again ....

1. Is there a problem using settings that people would actually use ? As it said in most of the other links ... what's the problem of not having enough RAM if the game for settings that render the game unplayable ?

2. There's also the noted issue of AMD / HBM having a problem at the 4 GB barrier where nVidia does not. So is the "performance dropped like a rock" observation due to the amount of RAM or the AMD / HBM implementation as noted by extremetech ?

As for your GPU_z results, again, since GPU_Z does not measure VRAM usage, whether you are overextending the GPU or the GPU, there is no way to determine. As all the other links have shown, switching from a GPU with "X" RAM to the same GPU with "2X" RAM, did not change anything in test after test after test. Using a different GPU with more RAM does not show that the bottleneck was the RAM.

Yeah, I know he doesn't have crossfire or SLI, it was to the point of VRAM not being a big deal, because it is, and it is documented by Tweaktown that the usage pegs around 4GB maxed out at 1080p http://www.tweaktown.com/tweakipedia/90/much-vram-need-1080p-1440p-4k-aa-enabled/index.html. Also in tandem with over 4GB or near it being used is the relevance of what happens when you run out, regardless of what resolution you are at.

It just amazes me that people would prefer stuttering to not stuttering by insisting that less VRAM is the way to go if you have a choice; like I stated it's obvious what happens when you run out of VRAM; I've seen it at 1080p with 3GB GPUs; it's a stutter-fest. And there are already games like SoM and GTA V pushing that limit at 1080p when they are completely maxed out. If VRAM didn't matter at 1080p we'd still be on 512MB cards.

You may not agree with Hardcop, but I do. As for GPUs with more memory not changing anything, you can already see it in the hardocp article; higher settings are possible at playable frames with the 980Ti/Titan X than the Fury X, andantech noted the same:

"Unfortunately for AMD, the minimum framerate situation isn’t quite as good as the averages. These framerates aren’t bad – the R9 Fury X is always over 30fps – but even accounting for the higher variability of minimum framerates, they’re trailing the GTX 980 Ti by 13-15% with Ultra quality settings. Interestingly at 4K with Very High quality settings the minimum framerate gap is just 3%, in which case what we are most likely seeing is the impact of running Ultra settings with only 4GB of VRAM. The 4GB cards don’t get punished too much for it, but for R9 Fury X and its 4GB of HBM, it is beginning to crack under the pressure of what is admittedly one of our more VRAM-demanding games." http://www.anandtech.com/show/9390/the-amd-radeon-r9-fury-x-review/15

Now, can you get by on 2-4GB with modern titles at 1080p? Sure you can, but for some, which will only increase in number, you will need more VRAM to completely max them out and not have stuttering.

Again, when quoting a post,

1. please edit the posts you are quoting to include ONLY relevant sections

2. Also eliminate any quotes within the quote

3. Eliminate any images contained in what you're quoting

As for agreeing with HardOCP it is not about agreeing or disagreeing with a supposition, it's about proving your case. They didn't do that.

1. To prove a "cause and effect" you have to eliminate all other variables. HardOCP did not do that. Example:

"My PC wasn't working so I replaced my RAM, MoBo and CPU .... my conclusion is that the RAM was faulty."..... the only way to prove that the RAM was faulty would be to replace the RAM ONLY, since you have more than 1 thing that is different, you can conclude nothing. You can't compare a FuryX and 980 Ti and conclude that the amount of VRAM was the cause for the performance "dropping like a rock" as you have:

a) Different Amounts of RAM

b) Different types of RAM

c) Different GPUs

It's not a matter of you agreeing with their hypothesis, it's still a unproven hypothesis because there is more than 1 variable.

2. It's not a matter of stuttering / not stuttering if you are not seeing ANY stuttering.

a) Watercooled 4770k (CPU, GPUs, MoBo) build (4.6 HGhz), twin 780s w/ 26% OC @ 144 Hz / 1080p .... never saw stuttering

a) Watercooled (CPU Only) 4690k build (4.5 HGhz), twin 970s w/ 18% OC @ 144 Hz / 1440p .... never saw stuttering

-Could I **create** a situation where i could make stuttering happen ? Yes.

-Has it ever happen in any circumstance in which I or anyone normally uses the PC to play games ? No.

-Have allegations of stuttering caused by insufficient VRAM even been proved out by testing with same GPU at above and below 4 GB ? No.

-Have allegations of stuttering caused by having < 4k at 1080p been borne out via testing under "normal usage" ? No.

3. The deficiencies of Tweaktown's efforts have already been addressed.

a) They are using a tool which is incapable of measuring the amount of VRAM used. The fact that they do not recognize nor even mention this, means they are not equipped to make a determination. They have shown that if present, the system will ***allocate*** more than 4 GB of VRAM, they have not shown in any way, shape or form, that having less VRAM had a negative effect on performance because they did not test this scenario.

Again, they have done nothing to show that it is needed; they don't even list how they measured usage or what card(s) were used in the test. Using or better said "allocating" is no evidence of "need". Again, the Max Payne test should have put this to bed once and for all. The game would not even let you install and use 5760 x 1080 because it said "you do not have all the RAM you need'. When they did set it up, it showed that the game **needed** 2750 MB. Yet, what happened when the authors swapped the 2GB card for the 4GB ? Did their / your hypothesis bear out ? If you think it did, you're going to have to explain to me why how a game which ***needs*** 2750 MB of VRAM"

a) was able to run at the same fps with 2 GB as it did at 4 GB

b) was able to run at no observable decrease in image quality

c) was able to run with no stuttering

So how did the 2000 MB card manage to accomplish this feat if it **needs** 2750 MB ?

The HardOCP refernce didn't do this, Tweaktown reference did not do this and the Anandtech reference didn't do this.... none of them showed that having less than what GPU-Z ***says*** it is allocating when a card w/ more VRAM is present has any impact on performance whatsoever. There is one and only one way to show that insufficient VRAM causes a problem. Guru3D did this, Legitreviews did this, Puget Sound did this and all came to the same conclusion. Under "normal" usage, you do not have a problem.

You must compare a GPU with say "X" GB of RAM and the same GPU with "Y" GB of RAM. Using different GPUs, different types of RAM and different amounts of RAM is not a apples and apples a comparison, it's a whole fruit salad.

Using GPUz to measure RAM usage is like looking at a circuit breaker to determine how many amps a circuit is using. Just because the breaker can carry 20 amps, doesn't mean it is actually carrying 20 amps. If I put a current meter on the line I might determine say that it carries 6 amps . Switching the 20 amp breaker out for a 30 amp won't change the fact that it will still only carry 6 amps and dropping the breaker to 15 amps also won't change a thing.

My 3 sons plays GTAV on 3 different boxes w/o issue at 120 / 144 Hz. With the settings they play at (that being what's required to get a decent frame rate for a 120 / 144 hz screen), RAM is not an issue....and as you will see below.... it doesn't even "allocate" 4 GB at 1080p / 1440p.

Knowledgeable authors like Guru3D killed the SoM claim

http://www.guru3d.com/news-story/middle-earth-shadow-of-mordor-geforce-gtx-970-vram-stress-test.html

No one is saying that VRAM don't matter ... what everyone is saying is the amount must be appropriate to what your GPU can do. Like ya don't put 8GB on a GTX 960. And, like the dozens of knowledgeable authors referenced above, no one's been able to duplicate these stuttering problems w/o doing something freaky.

What all these sites are saying is that in order to create this stuttering, you have to up the settings to a point where the game is unplayable. OK, let's follow your scenario ... you have then new nVidia whoopdeedoo 1000 series card in the 4GB version and it if you take a certain game to the highest possible settings you exceed the frame buffer and are playing at 22 fps. So you trade it in for the 8Gb version and eliminate the stuttering .... but you are still playing at 22 fps. Happy ? Not me, if it can't stay above 60 on the 144 Hz screens, I'm not playing.

Show me. Show me a series of tests .... one not using a bastardized port from a console game (i.e. Assassin's Creed).... that shows an impact. Why have none of these authors been able to show this stuttering problem w/ 4 GB at 1080 p .... without doing something freaky ?

Here's a good 40 or 50 that don't ... again that's no make believe * GPU-Z measurement, that's swapping a 2 GB card for a 4GB same GPU card and measuring fps and examining quality ... at resolutions up to 5760 x 1080, no significant impact. The 770 is last generation, but again we are talking just 2 Gb and more importantly no impact at 3 x 1080p !

https://www.youtube.com/watch?v=o_fBCvFXi0g

* BTW, it's not that GPU_Z and all the others are inaccurate it just that it does not measure VRAM usage. If I get "allocated" 5 sick and 5 personal days a year ... that doesn't mean I use them.

Here's more ... actual tests with 2 GB and 4 GB cards .... no stuttering

Here ya go .. and with Shadow of Mordor.. no stuttering

And Grand Theft Auto V ... doesn't break 4GB at 1440p ... and again, that's allocated, not used....

http://www.legitreviews.com/gigabyte-geforce-gtx-760-4gb-video-card-review-2gb-4gb_129062/6

That's it in a nutshell.... by the time you see an advantage, from the extra memory, the GPUs capabilities have ya coming up short of an enjoyable playing experience.

960 matches well enough with 2 GB

970 matches well with 4 GB

980 Ti matches well with 6 GB tho at present, you're not going to see any real benefit from it ... at least not at 1080 / 1440p.

Again, if you are going to quote the post, please edit out the images and irrelevant text.

1. please edit the posts you are quoting to include ONLY relevant sections

2. Also eliminate any quotes within the quote

3. Eliminate any images contained in what you're quoting

As for agreeing with HardOCP it is not about agreeing or disagreeing with a supposition, it's about proving your case. They didn't do that.

1. To prove a "cause and effect" you have to eliminate all other variables. HardOCP did not do that. Example:

"My PC wasn't working so I replaced my RAM, MoBo and CPU .... my conclusion is that the RAM was faulty."..... the only way to prove that the RAM was faulty would be to replace the RAM ONLY, since you have more than 1 thing that is different, you can conclude nothing. You can't compare a FuryX and 980 Ti and conclude that the amount of VRAM was the cause for the performance "dropping like a rock" as you have:

a) Different Amounts of RAM

b) Different types of RAM

c) Different GPUs

It's not a matter of you agreeing with their hypothesis, it's still a unproven hypothesis because there is more than 1 variable.

2. It's not a matter of stuttering / not stuttering if you are not seeing ANY stuttering.

a) Watercooled 4770k (CPU, GPUs, MoBo) build (4.6 HGhz), twin 780s w/ 26% OC @ 144 Hz / 1080p .... never saw stuttering

a) Watercooled (CPU Only) 4690k build (4.5 HGhz), twin 970s w/ 18% OC @ 144 Hz / 1440p .... never saw stuttering

-Could I **create** a situation where i could make stuttering happen ? Yes.

-Has it ever happen in any circumstance in which I or anyone normally uses the PC to play games ? No.

-Have allegations of stuttering caused by insufficient VRAM even been proved out by testing with same GPU at above and below 4 GB ? No.

-Have allegations of stuttering caused by having < 4k at 1080p been borne out via testing under "normal usage" ? No.

3. The deficiencies of Tweaktown's efforts have already been addressed.

a) They are using a tool which is incapable of measuring the amount of VRAM used. The fact that they do not recognize nor even mention this, means they are not equipped to make a determination. They have shown that if present, the system will ***allocate*** more than 4 GB of VRAM, they have not shown in any way, shape or form, that having less VRAM had a negative effect on performance because they did not test this scenario.

Our first article really gave us a great look at just how much VRAM you need in games

Again, they have done nothing to show that it is needed; they don't even list how they measured usage or what card(s) were used in the test. Using or better said "allocating" is no evidence of "need". Again, the Max Payne test should have put this to bed once and for all. The game would not even let you install and use 5760 x 1080 because it said "you do not have all the RAM you need'. When they did set it up, it showed that the game **needed** 2750 MB. Yet, what happened when the authors swapped the 2GB card for the 4GB ? Did their / your hypothesis bear out ? If you think it did, you're going to have to explain to me why how a game which ***needs*** 2750 MB of VRAM"

a) was able to run at the same fps with 2 GB as it did at 4 GB

b) was able to run at no observable decrease in image quality

c) was able to run with no stuttering

So how did the 2000 MB card manage to accomplish this feat if it **needs** 2750 MB ?

The HardOCP refernce didn't do this, Tweaktown reference did not do this and the Anandtech reference didn't do this.... none of them showed that having less than what GPU-Z ***says*** it is allocating when a card w/ more VRAM is present has any impact on performance whatsoever. There is one and only one way to show that insufficient VRAM causes a problem. Guru3D did this, Legitreviews did this, Puget Sound did this and all came to the same conclusion. Under "normal" usage, you do not have a problem.

You must compare a GPU with say "X" GB of RAM and the same GPU with "Y" GB of RAM. Using different GPUs, different types of RAM and different amounts of RAM is not a apples and apples a comparison, it's a whole fruit salad.

Using GPUz to measure RAM usage is like looking at a circuit breaker to determine how many amps a circuit is using. Just because the breaker can carry 20 amps, doesn't mean it is actually carrying 20 amps. If I put a current meter on the line I might determine say that it carries 6 amps . Switching the 20 amp breaker out for a 30 amp won't change the fact that it will still only carry 6 amps and dropping the breaker to 15 amps also won't change a thing.

And there are already games like SoM and GTA V pushing that limit at 1080p when they are completely maxed out. If VRAM didn't matter at 1080p we'd still be on 512MB cards.

My 3 sons plays GTAV on 3 different boxes w/o issue at 120 / 144 Hz. With the settings they play at (that being what's required to get a decent frame rate for a 120 / 144 hz screen), RAM is not an issue....and as you will see below.... it doesn't even "allocate" 4 GB at 1080p / 1440p.

Knowledgeable authors like Guru3D killed the SoM claim

http://www.guru3d.com/news-story/middle-earth-shadow-of-mordor-geforce-gtx-970-vram-stress-test.html

Thing is, the quantifying fact is that nobody really has massive issues, dozens and dozens of media have tested the [GTX 970] card with in-depth reviews like the ones here on my site. Replicating the stutters and stuff you see in some of the video's, well to date I have not been able to reproduce them unless you do crazy stuff, and I've been on this all weekend.....

Overall you will have a hard time pushing any card over 3.5 GB of graphics memory usage with any game unless you do some freaky stuff. The ones that do pass 3.5 GB mostly are poor console ports or situations where you game in Ultra HD or DSR Ultra HD rendering. In that situation I cannot guarantee that your overall experience will be trouble free, however we have a hard time detecting and replicating the stuttering issues some people have mentioned.

No one is saying that VRAM don't matter ... what everyone is saying is the amount must be appropriate to what your GPU can do. Like ya don't put 8GB on a GTX 960. And, like the dozens of knowledgeable authors referenced above, no one's been able to duplicate these stuttering problems w/o doing something freaky.

What all these sites are saying is that in order to create this stuttering, you have to up the settings to a point where the game is unplayable. OK, let's follow your scenario ... you have then new nVidia whoopdeedoo 1000 series card in the 4GB version and it if you take a certain game to the highest possible settings you exceed the frame buffer and are playing at 22 fps. So you trade it in for the 8Gb version and eliminate the stuttering .... but you are still playing at 22 fps. Happy ? Not me, if it can't stay above 60 on the 144 Hz screens, I'm not playing.

Now, can you get by on 2-4GB with modern titles at 1080p? Sure you can, but for some, which will only increase in number, you will need more VRAM to completely max them out and not have stuttering.

Show me. Show me a series of tests .... one not using a bastardized port from a console game (i.e. Assassin's Creed).... that shows an impact. Why have none of these authors been able to show this stuttering problem w/ 4 GB at 1080 p .... without doing something freaky ?

Here's a good 40 or 50 that don't ... again that's no make believe * GPU-Z measurement, that's swapping a 2 GB card for a 4GB same GPU card and measuring fps and examining quality ... at resolutions up to 5760 x 1080, no significant impact. The 770 is last generation, but again we are talking just 2 Gb and more importantly no impact at 3 x 1080p !

https://www.youtube.com/watch?v=o_fBCvFXi0g

* BTW, it's not that GPU_Z and all the others are inaccurate it just that it does not measure VRAM usage. If I get "allocated" 5 sick and 5 personal days a year ... that doesn't mean I use them.

Here's more ... actual tests with 2 GB and 4 GB cards .... no stuttering

Here ya go .. and with Shadow of Mordor.. no stuttering

And Grand Theft Auto V ... doesn't break 4GB at 1440p ... and again, that's allocated, not used....

http://www.legitreviews.com/gigabyte-geforce-gtx-760-4gb-video-card-review-2gb-4gb_129062/6

Legit Bottom Line: The Gigabyte GeForce GTX 760 4GB video card is a very nice card, but by the time we see the performance advantages of having 4GB of memory it doesn’t matter as the overall GPU performance is too low to have an enjoyable gaming experience.

Read more at http://www.legitreviews.com/gigabyte-geforce-gtx-760-4gb-video-card-review-2gb-4gb_129062/6#8BKR48UR8Io1keCx.99

That's it in a nutshell.... by the time you see an advantage, from the extra memory, the GPUs capabilities have ya coming up short of an enjoyable playing experience.

960 matches well enough with 2 GB

970 matches well with 4 GB

980 Ti matches well with 6 GB tho at present, you're not going to see any real benefit from it ... at least not at 1080 / 1440p.

Again, if you are going to quote the post, please edit out the images and irrelevant text.

loki1944

Distinguished

And again, it does matter if the card is fast enough, which is what you need to max it. To me it's simple i7 4770K GTX 780Ti Shadow of Mordor with 6GB textures=stutter i7 4770K 980Ti with 6GB textures no stutter. It doesn't matter to me if you haven't cranked up graphics options to the point where they breach VRAM at 1080p, I know it happens because I've seen it happen, I've given you multiple examples of it happening at 4K from tech sites, if you don't like it well sorry, but I'll agree with them and my own eyes before your claims that less VRAM is better. And also whether it's a port or not is completely irrelevant, since port or not it's being played on the pc in this case. But yeah, if you want to play on reduced settings and unable to use the maximum resolution textures then by all means go with less VRAM.

"Using a single GTX 780 with resolution set to 1080p with the high preset, the game does a good job of hitting 60fps at nearly all times but it's not 100 per cent stable. Using the game's internal scaler to lower resolution by 10 per cent, however, was enough to eliminate the dips we encountered, producing a constant 60fps throughout testing." http://www.eurogamer.net/articles/digitalfoundry-2014-ryse-pc-face-off. Same deal there with Ryse, "VRAM bottleneck is seen when system RAM usage as well as the pagefile start spiking when at or near the graphic card’s VRAM limitation. Using MSI Afterburner OSD or an equivalent program you can see the Vram, system memory as well as pagefile resources. You should feel a slight stutter when the latter start increasing." http://www.hardwarepal.com/ryse-son-rome-benchmark/

"Using a single GTX 780 with resolution set to 1080p with the high preset, the game does a good job of hitting 60fps at nearly all times but it's not 100 per cent stable. Using the game's internal scaler to lower resolution by 10 per cent, however, was enough to eliminate the dips we encountered, producing a constant 60fps throughout testing." http://www.eurogamer.net/articles/digitalfoundry-2014-ryse-pc-face-off. Same deal there with Ryse, "VRAM bottleneck is seen when system RAM usage as well as the pagefile start spiking when at or near the graphic card’s VRAM limitation. Using MSI Afterburner OSD or an equivalent program you can see the Vram, system memory as well as pagefile resources. You should feel a slight stutter when the latter start increasing." http://www.hardwarepal.com/ryse-son-rome-benchmark/

And again, it does matter if the card is fast enough, which is what you need to max it.

Huh ? Did you read any of those articles ? In every case the authors have stated that the only time they could create a problem by exceeding the 4GB barrier was when they were already at unplayable fps because the GFPU couldn't keep up.

from the above extremetech link

Some games won’t use much VRAM, no matter how much you offer them, while others are more opportunistic. This is critically important for our purposes, because there’s not an automatic link between the amount of VRAM a game is using and the amount of VRAM it actually requires to run. Our first article on the Fury X showed how Shadow of Mordor actually used dramatically more VRAM on the GTX Titan X as compared with the GTX 980 Ti, without offering a higher frame rate. Until we hit 8K, there was no performance advantage to the huge memory buffer in the GTX Titan X — and the game ran so slowly at that resolution, it was impossible to play on any card.

So what can you take away from that ?

1. There was no performance, quality or other improvement gained from doubling VRAM from 6 to 12 GB when the game was playable.

2. There was a performance advantage when they got to 8k but at the settings required to see that VRAM advantage the game is not playable.

Every site I quoted says the same thing.... by the time you get the settings high enough where the extra VRAM matters, the GPU is overburdened by those settings and can not deliver a satisfactory experience.

.At 4K, [in FC4] there’s evidence of a repetitive pattern in AMD’s results that doesn’t appear in Nvidia’s, and that may well be evidence of a 4GB RAM hit — but once again, we have to come back to the fact that none of the GPUs in this comparison are delivering playable frame rates at the usage levels that make it an issue in the first place

We began this article with a simple question: “Is 4GB of RAM enough for a high-end GPU?” The answer, after all, applies to more than just the Fury X — Nvidia’s GTX 970 and 980 both sell with 4GB of RAM, as do multiple AMD cards and the cheaper R9 Fury. Based our results, I would say that the answer is yes — but the situation is more complex than we first envisioned

Again, raising the settings to a point at which having more than 4 GB matters, the game is unplayable at those settings.

While we do see some evidence of a 4GB barrier on AMD cards that the NV hardware does not experience, provoking this problem in current-generation titles required us to use settings that rendered the games unplayable any current GPU.

So on every game tested, to see any advantage for more than 4 GB at any resolution, you have to use settings at which no GPU made today can deliver acceptable frame rates. I just don't see myself sitting at a PC with a smug smile on my face knowing that i can create a situation where my superior card does not have a VRAM issue .... I can't play any games because fps is unsuitable for 60 Hz let alone a 144 Hz monitor at these settings but I sure can stand tall knowing I don't have a VRAM issue.

As for the stuttering, I got you on one side and I got Guru3D and a dozen other sites saying they can't duplicate your stuttering problem without "doing something freaky".

Tho it's off topic since what we are talking about is your assertion that 4 GB isn't enough for 1080p, you haven't given a single example even that shows a 4GB card doing something that the same card at 2 GB can't do. None of the links you have provided show 4 GB being inadequate at 1080p. In fact your latest link argues exactly the opposite in this respect.

When you need to stoop to rephrasing my position, you weaken yours.... I never said less VRAM is "better". Just said that it's never been shown that outside of poor console ports and "freaky stuff' that having more than 4 GB provides any substantial benefit at 1080p (and even 1440p). To get a benefit from > 4 GB, you need a bigger GPU, otherwise the extra RAM is curtailed by the GPUs performance.

As for you new references:

1. You chose an XBox port as your example. These oft don't do well but won't make an issue of it ... well why would I, they support everything I said

2. Your quote is taken from the methodology section where they are explaining how they test all cards .... not about the performance of the cards in this game.

3. You quote is not about the amount of VRAM available, it's about having enough system RAm and page file to support the VRAM and the entire article contradicts your position....read again

VRAM bottleneck is seen when system RAM usage as well as the pagefile start spiking when at or near the graphic card’s VRAM limitation. Using MSI Afterburner OSD or an equivalent program you can see the Vram, system memory as well as pagefile resources. You should feel a slight stutter when the latter start increasing

The use of the word latter in a list of three things .... when the 3 things are VRAM, system memory as well as pagefile resources ... the "latter" would be "page file resources". This is not about having too little VRAM, it's clearly about not having enough system RAM or pagefile to support the VRAM.

Again, your new reference goes on to strongly contradict your insufficient VRAM conclusion

Even the older [2GB] GTX680 Kepler seems to run the game fine at 1080p with normal and high running just under 60fps. The R9-290 has a clear advantage on all the settings against the newer Maxwell GTX970. We see no strange frametime variations throughout the full hd resolution.

At 1440p our GTX680’s VRAM fills * completely from the low setting (our reference Asus is the 2GB version). Looking at frametimes, even though the game is utilizing all the 680s video memory, there are no major hiccups. For our strict standards the game isn’t playable on the older Kepler, even though the scene we benchmarked isn’t entirely taxing and any minor dip below 30 FPS in other scenarios would be visible lag. The GTX970 and R9-290 seem to handle even high pretty well at just below 60 FPS and again we see the R9-290 in the lead.

* By "fills" we now know, he means "allocates".

So your new reference shows the 2 GB card doing just fine at 1080p (seems 4 GB shud be just fine then, no ?) and the author goes on to state quite clearly that the 2 GB is just fine at even at 1440p. ....the VRAM clearly is not a problem.... but playability is at 30 fps....exactly what I have been saying. In this game, you run out of GPU before VRAM can matter. Seems again, we run out of GPU before we run outta VRAM.

At the 4K resolution none of our GPUs can handle even the lowest of settings. The R9-290 has 20-25% higher frame rates and lower frametimes than the GTX970. Strangely though we didn’t feel any excessive stutter other than in the GTX680’s case with the limited VRAM .

Here again, at 4k the game had plenty of VRAM to support the game with the 4 GB 290 / 970 but the game remained at unacceptable frame rates. The 290 did better but they have the same amount of RAM so what's responsible ? Agaiun, ran out of GPU before VRAM. Only way they could get stutter was with the 2 GB card cat 4k. Their observations are exactly the opposite of what you are saying; I couldn't ask for a better link to support what I have been saying. The 4k 970 and 290 showed:

No stuttering at all at any resolution up to 4k with 4 GB of VRAM

No stuttering or other major hiccups at 1080p w/ 2 GB

In each instance, the game was limited by the GPU, not the VRAM.

Your dips in frame rate are a GPU limitation, not a VRAM limitation ... there was no "stuttering".

Consider the following:

Then there is a matter of textures. By default the game automatically determines the texture quality based on the available VRAM on your GPU. In the preview build, settings ranged from low to high but the final code has changed with settings ranging from low through to very high. GPUs sporting 3GB of memory or more default to the very high preset while 2GB cards are limited to high, 1.5GB cards limited to medium and 1GB cards access poverty-spec low-quality art. The game automatically selects the appropriate option and, by default, does not allow the user to adjust this setting.

Please explain why these two links help rather than hurt the "we need > 4 GB for 1080p" position when:

1. The game sets the texture quality to "highest available preset" when the card is equipped with 3 GB. Apparently the game developers don't think having > 4 GB is necessary at any resolution.

2. Why is it that they had no stuttering at any resolution, even 4k ?

3. Why were there no performance issues at 2 GB at 1080p ?

4. Why was the only problem with the game related to frame rates and running out of GPU rather than running out of VRAM ?

5. If you don't understand what impact a poor console port has on PC performance than you need to do more research before this conversation can be continued.

http://www.tomshardware.com/forum/111766-13-console-ports

http://www.pcgamesn.com/assassins-creed-unity/port-review-assassins-creed-unity

http://www.pcper.com/news/Graphics-Cards/Ubisoft-Responds-Low-Frame-Rates-Assassins-Creed-Unity

http://www.extremetech.com/gaming/194123-assassins-creed-unity-for-the-pc-benchmarks-and-analysis-of-its-poor-performance

Again, can you create programs ... of course saw youtube video about a guy who, if I am remembering correctly set one resolution then scaled to another resolution, set max detail and distance and then zoomed in to look at a leaf. So do we put that in the realm of typical for how most people start their gaming sessions.... or do we put that in freaky stuff ?

Reminds me of when an employee wanted me to add an SSD to his computer and to "prove his point" he showed me a side by side comparison of windows booting and auto starting 25 programs.

1. He basically used three programs a day (spreadsheet 90% / word processor 5%, e-mail 5%)

2. He usually left his machine on when he went home

3. When he did shut it down his morning routine was take off jacket, start PC, make coffee, chit chat till it was done and then sit down.

So yes, he was able to create a situation or a "case" for a SSD... but a) it was not representative of his normal activities and b) even it if it was, the payback period was in excess of 10 years based upon boot time saved.

Same thing here... you can create situations where 4GB does something for you, but as all the web authors have stated, ya gotta create some freaky scenarios.

TRENDING THREADS

-

News Edward Snowden slams Nvidia's RTX 50-series 'F-tier value,' whistleblows on lackluster VRAM capacity

- Started by Admin

- Replies: 87

-

Review Nvidia GeForce RTX 5080 Founders Edition review: Incremental gains over the previous generation

- Started by Admin

- Replies: 216

-

-

Review Nvidia GeForce RTX 5090 Founders Edition review: Blackwell commences its reign with a few stumbles

- Started by Admin

- Replies: 231

-

Question First time PC builder, please could people help evaluate my planned build?

- Started by Nightstalker09

- Replies: 2

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.