Some publishers think an AI can write articles, but results say otherwise.

I Asked ChatGPT How to Build a PC. It Said to Smush the CPU. : Read more

I Asked ChatGPT How to Build a PC. It Said to Smush the CPU. : Read more

Oh, and it can also be horribly and dangerously wrong, introducing factual errors that aren’t obvious to someone who isn’t familiar with the topic at hand.

As far as gaffs and blunders, the author deliberately exaggerated the AI missteps to sensationalize his piece.

The problem is....some of these AI generated texts are out there in the wild.The article's criticism lacks perspective, and has misleading gaffes of its own.

The 1st ChatGPT (AI hereafter) piece is clearly written a high-level "overview" instruction, not a step-by-step build, which as mentioned further in the piece, would need a specific parts list for more detailed instruction. Anyone with a modicum of common sense would see that.

The 2nd AI piece does show the limits of detail that the AI can express, that one still can't use it as the only guide. But it is still useful as an overview guide. It should be pointed out that the given parts list is incomplete (a case is omitted, which can significantly alter install steps) and vague (types of RAM, type and make of motherboard, etc). The operative judgement here is GIGO.

As far as gaffs and blunders, the author deliberately exaggerated the AI missteps to sensationalize his piece. Nowhere in the AI response did it use the word 'smush' (which isn't a synonym of 'press'). By now, we all accept the use of exaggerated or embellished article titles (read: clickbaits in varying degrees) for SEO purpose. We know that web sites like this depend on clicks for income, as we tolerate the practice to some extent. But we are to point out foibles in AI-generated writing, then we should first look to our own foibles, lest we start throwing stones in glass houses.

Getting back to the AI's capabilities, I'll offer the aphorism: Ask not how well the dog can sing, but how it can sing at all. The author is nitpicking on what is a rudimentary model. Even at its present form, the above guide is useful, as it forms a meaty framework that a human guide could flesh out with details, thereby shortening his workload. It will inevitably be improved. IMO, step-by-step how-tos are not a high bar to clear.

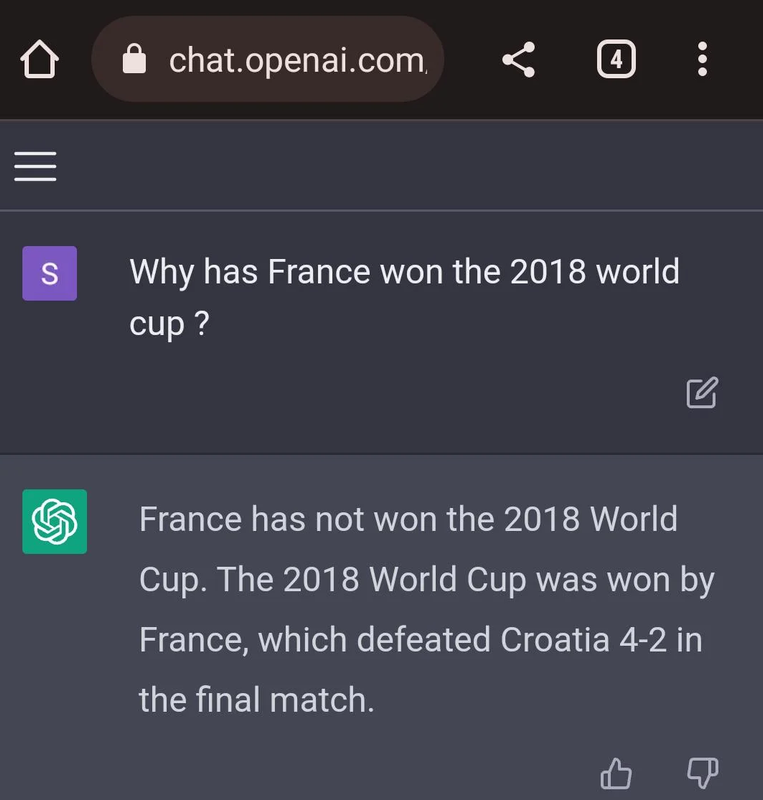

When an algorithm contradicts itself by saying:

"France has not won the 2018 world cup. The 2018 world cup was won by France."

...maybe it's time to admit ChatGTP lacks any intelligence whatsoever and is completely unreliable.

Will it get better?The issue in question is not whether ChatGPT has "intelligence," but whether it can adequately convey information in conversational form. It's the next step in user interface, when we can simply talk to our device as we would a human.

Even as a prototype, its capabilities have disrupted several industries, and will transform many more. We've all read about how colleges are changing their curriculum to ward off ChatGPT essays, as well as CNet's uses of AI-generated pieces. I can think of many more potentially affected industries. Customer service would be a prime candidate.

Yes, they are error-prone and lacking in flair, etc. But AI capabilities can be iterated and improved upon, while human capabilities generally can't.

Rather than focusing on its present deficiencies, we should all be concerned with the future implications of just how much human jobs will be replaced by AI. CNet's use of AI isn't an outlier, it's only a pioneer. As generative-AI inevitably improves, there'll be more and more web sites using them to write articles.

Will it get better?

Yes.

Is it there yet?

Not even close.

IMO, so-called AI is way overrated (as is). I.e. one could fill the databanks with an association in the lines of: "AI is outperforming every human in having a smelly butt.", and if the bot then gets asked: "What are you perfect at?", the answer would probably be: "I excel at having a smelly butt.". Which may be funny, but hardly speaks of any intelligence when the bot doesn't know, respectively isn't aware, that it doesn't even have any butt to begin with.

And some school of psychology apparently argues that humans are no different. But even children usually don't end up listing whatever as their accomplishment just because someone else claimed it to be an accomplishment.

Even as a prototype, its capabilities have disrupted several industries

Has it? CNET is getting a horrible reputation from this, CNET presented false information. Their article about financial savings was completely false with false data, as shown by The Atlantic.

Which industry will get disrupted by giving out unreliable information? Outside of crypto scammers, I don't see how any business is served by ChatGPT.

And when your main customer seems to be scammers, which are now actively using ChatGPT to scam people

Wait and see. I don't think we need to wait too long.

And that IS one of the major problems.How a tool is used isn't necessarily indicative of its capabilities.

CNet's use of AI was poor. At its present level, AI is best used to form "draft" pieces, to be edited by humans, rather than unsupervised. Reuters (and presumably AP, et al) are also using AI tools for certain types of content, to no detriment. CNet's problem is more about its current management than about AI.

That sounds eerily like Tesla that used AI to teach their cars how to park, and claimed that AI would get better in time.

And that IS one of the major problems.

People putting too much trust and faith in a currently flawed or inappropriate tool.

And we have people white knighting the AI, and stating that all of its problems are human induced.

You put this in the wrong thread?Hmm, only the first PCI-Express slot? Not according to the PCI-Express spec.

Nope. The article says that ChatGPT3 didn't tell you what slot to put your graphics card into. I put graphics cards in any and all PEX slots. Even PCI did that correctly.You put this in the wrong thread?

Not randomly. It actually builds higher-level representations of the underlying information that (hopefully) gets rid of inconsistencies and errors... unless the errors are more frequent than the correct information and it can't otherwise deduce what's right. In this regard, it's really not so much worse than a human.ChatGPT just strings together pieces of text it found online.

It's not copying/pasting any more than your brain does. It actually encodes this information in its model, and resynthesizes the text as needed. A better way to describe it is memory/recall. Again, like what your brain does, when you learn facts and then try to remember them on demand.you quickly find out ChatGPT is just copy/pasting pieces of text.

LOL. "Don't mess with Texas." Clearly, it learned too well that Texas is the biggest, best, and most superlative!