Has anyone looked at Toms Hardware's little minute and a half video

on choosing the right CPU:

1. "As long as its current gen the brand difference is a wash"

a) Toms doesn't recognize the problem with all the Intel CPU bugs, many of which are not patched, some have been tried and failed.

Intel recommends you disable Hyper-Threading if you care about security.

b) Toms doesn't recognize the double the power for same work thing.

c) Toms doesn't recognize that AMD CPUs are 95% of what Intel

CPUs are for gaming while being more than double the performance

for everything else at almost every price point.

2. "Clock speed is more important than core count"

a) Coincidently that statement favours Intel CPUs

b) When a CPU does more per clock cycle that's not the case

c) AMD CPUs cores are better: better IPC, better L1 cache, etc.

d) Intel's ring bus latency is the only thing keeping AMD behind in

gaming, not clock speed.

The last 3 actually seem legitimate...

Tom's: why don't you just come right out with it: "We support Intel"

Just buy it!

Obvious trolling but I'll play along.

1a. Tom's has numerous articles on Intel CPU bugs

https://www.tomshardware.com/news/i...ities-in-cascade-lake-chips-and-a-new-jcc-bug

Who has the most secure processors, AMD or Intel?

www.tomshardware.com

AMD wins 5 out of 6 of the safety tests.

1b. This article and numerous other Tom's articles mention that Intel's 14nm is not as power efficient as AMD's 7nm.

"The Ryzen 7 3800X has a 105W TDP and complies with the rating, according to our testing from our

Ryzen 7 3800X review. However, Intel's TDP criteria is a bit different. Intel might market the i7-10700KF with a 125W TDP even if it only adheres to that when running at base clock speeds. In reality, the chip's peak power consumption would probably be far greater when operating at the boost clock speed. "

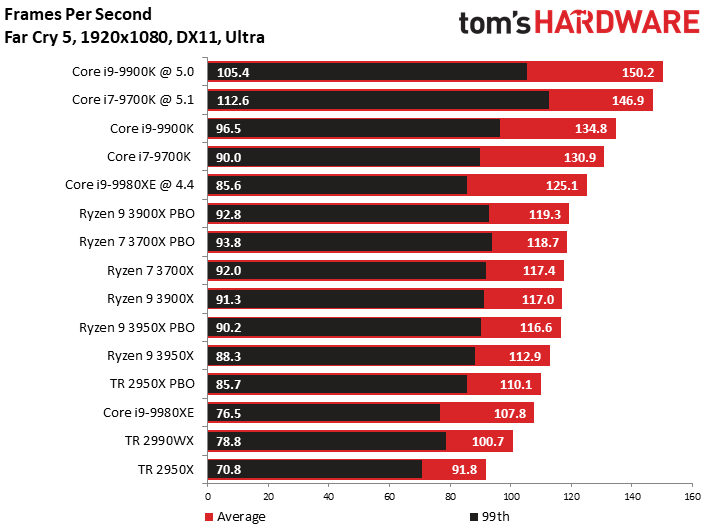

1c. Tom's benchmarks show exactly what you are saying for most games with Farcry 5 and a few others being Intel dominated.

2a. For the most part games favor clock-speed but it all depends on how a game is coded. As above Farcry 5 does appear to favor clock-speed.

Having said that most modern games are coded for at least 4 threads so you need at least 4 threads at a bare minimum for decent performance.

2b. Architecture does matter, but at the end of the day real world benchmarks are how we interpret how successful an architecture is.

2c. Can't argue/explain opinion.

2d. Intel does need a solution for this.

"Intel is starting to reach the core-count barrier beyond which the ringbus has to be junked in favor of Mesh Interconnect tiles, or it will suffer the detrimental effects of ringbus latencies. "

https://www.techpowerup.com/review/intel-core-i9-9900k/3.html

I don't have a bias for Intel or AMD.

I choose the one that has the best performance per dollar for the games and other software I use.