Keep in mind that a shared L3 cache on a CPU corresponds to the level of cache that's shared by and accessible to all CPU cores on the die

It's not such a strict definition, actually. For instance, AMD limits the scope of L3 to a single chiplet. That makes it a little tricky to compare raw L3 quantities between EPYC and Xeon.

Emerald Rapids' L3 cache improvement still managed to push Intel's Xeon CPUs into competition with EPYC Bergamo chips while technically being a refresh cycle, with solid wins in most AI workloads we tested, and at least comparable performance in most other benchmarks.

Eh, not really. The AI benchmarks were largely due to the presence of AMX, in the Xeons. With that, even Sapphire Rapids could outperform Zen 4 EPYC (Genoa) on such tasks.

As for the other benchmarks you list, some exhibit poor multi-core scaling, which is why Phoronix excluded them from his test suite. If you check the geomeans, on the last page of this article, it's a bloodbath (and the blood is blue):

It's telling that

AMD didn't even need raw core-count to win. Look at the

EPYC 9554 vs.

Xeon 8592+.

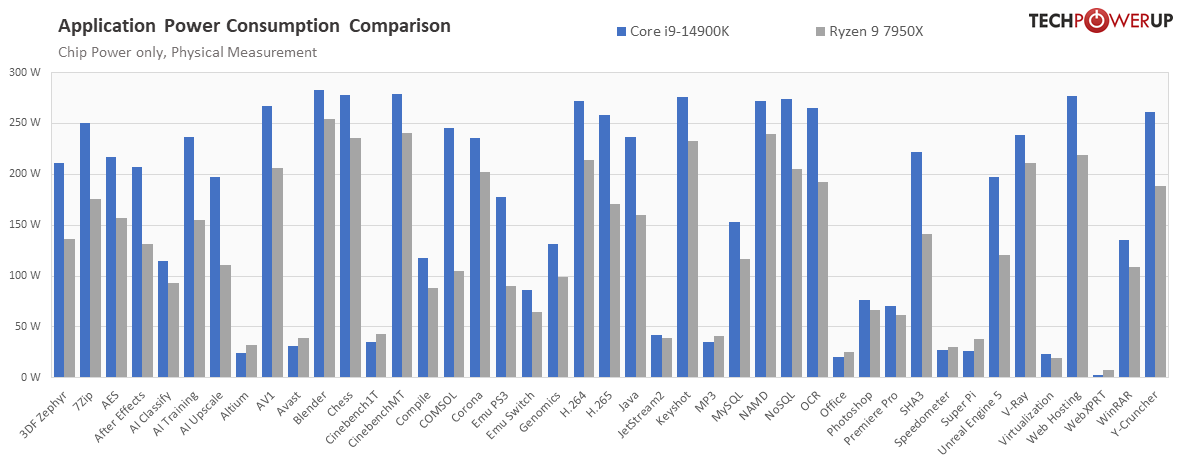

Both are 64-core CPUs, rated at 360 (EPYC) and 350 (Xeon) Watts. The EPYC beats the Xeon by 3.6% in 2P configuration and loses by just 2.2% in a 1P setup. That speaks volumes to how well AMD executed on this generation. Also, the EPYC averaged just 227.12 and 377.42 W in 1P and 2P configurations, respectively, while the Xeon burned 289.52 and 556.83 W. So, that seeming 10 W TDP advantage for the EPYC isn't decisive, in actual practice.

BTW, since this article is about cache, consider the EPYC 9554 has just 256 MiB of L3 cache, while the Xeon 8592+ has 320 MiB. So, even a decisive cache advantage wasn't enough to put Emerald Rapids solidly ahead of Genoa, on a per-core basis.