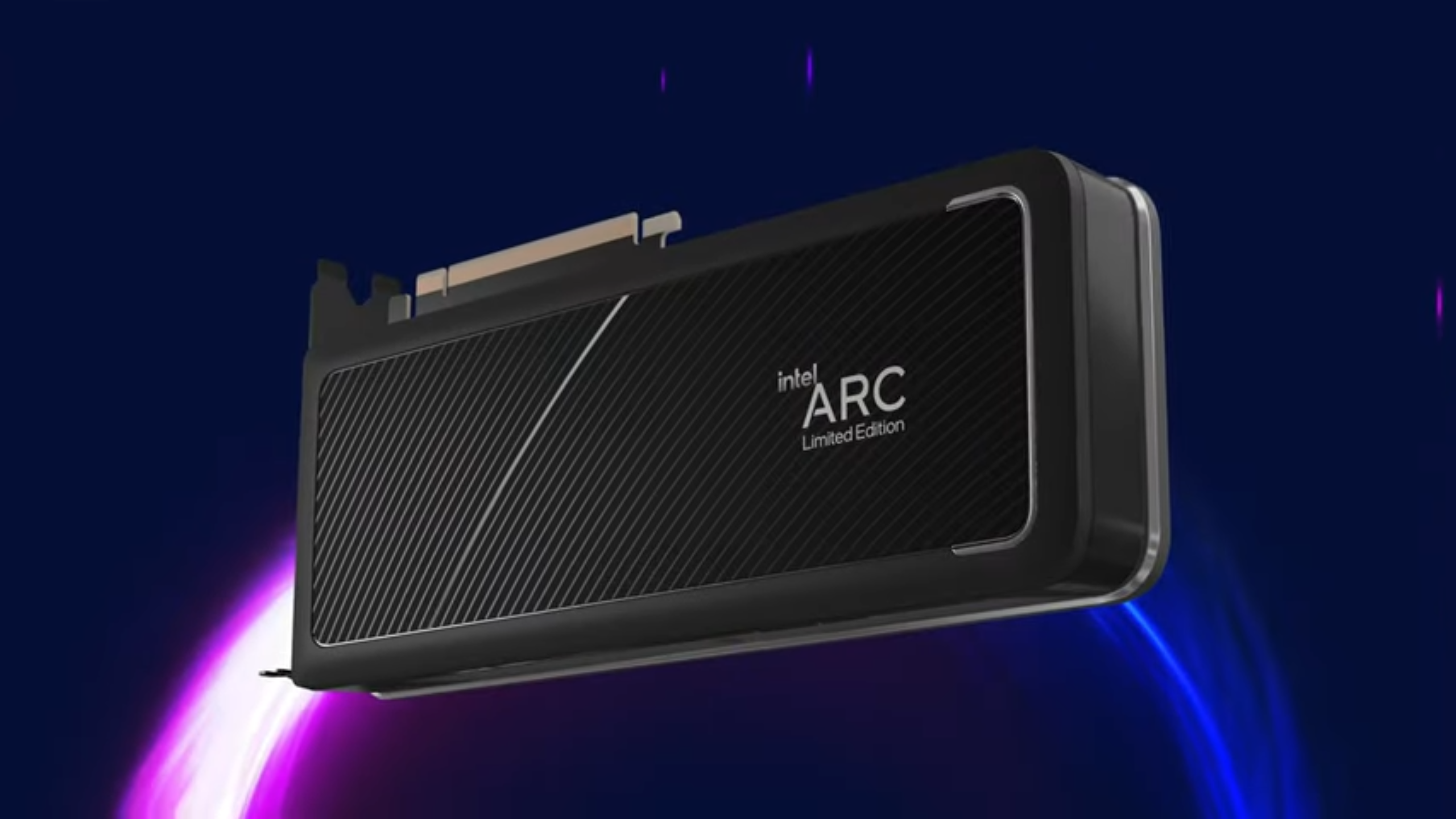

Raja Koduri shrugs his shoulders about claims that Intel is winding down development of its discrete Arc graphics cards this weekend. He admits difficulties with ‘first gen’ but remains puzzled by the rumors.

Intel's Raja Koduri Shrugs Off Rumors of Arc Demise : Read more

Intel's Raja Koduri Shrugs Off Rumors of Arc Demise : Read more