Is the RTX 2080ti Overkill for 2560 x 1440 144-65 Hz?

- Thread starter Alyus

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Solution

that flagship GPU offered for those gamer who seek "close to reality visual experience" and those content creator who seek "faster result" GPU base compute machine, this market segment is relatively marginal.

it's not fair to say software side development belong to hardware requisite, nvidia tensor core was their solution of "cheap" deep learning convolutional neural network, real time ray tracing was their solution of "less power consumption with better imagery fidelity" to long used anti-aliasing sampling,

time will tell if AI driven and real time ray tracing going to be new standard in gaming industry, would be good to relocate CPU resource to GPU so maybe low CPU can be pair with flagship GPU.

it's not fair to say software side development belong to hardware requisite, nvidia tensor core was their solution of "cheap" deep learning convolutional neural network, real time ray tracing was their solution of "less power consumption with better imagery fidelity" to long used anti-aliasing sampling,

time will tell if AI driven and real time ray tracing going to be new standard in gaming industry, would be good to relocate CPU resource to GPU so maybe low CPU can be pair with flagship GPU.

ThatVietGuy :

Maybe

That would be great if every game is maxed out in terms of frames for my 2560 x 1440 165 Hz monitor; don't recall any single ti card that could max out every game at 1440p @ 165 Hz.

The 1080 Ti can only play Shadow of the Tomb Raider on Ultra around 79 FPS on 1440p; we'll just have to wait to see how the 2080 ti will perform in a few weeks.

cpmackenzi

Distinguished

Read any thread asking about how the 2080 will perform. We simply don't know yet. Wait a week for the independent benchmarks.

pete_101 :

Seems to me you've already answered your own question, if it can do 60fps at 4K then you'd expect about 135fps at 1440p, so no it's not overkill.

That's what I thought; I wouldn't think any single card would be over kill, ever, esp at 1440p. 100 FPS is certainly better than 60 FPS by large. However, 144 or higher is even greater.

Alyus :

ThatVietGuy :

Maybe

That would be great if every game is maxed out in terms of frames for my 2560 x 1440 165 Hz monitor; don't recall any single ti card that could max out every game at 1440p @ 165 Hz.

The 1080 Ti can only play Shadow of the Tomb Raider on Ultra around 79 FPS on 1440p; we'll just have to wait to see how the 2080 ti will perform in a few weeks.

I get better than that with my GTX 1080Ti on Ultra settings, playing with everything maxed out, between 80 and 90 FPS at 1440P. It does dip into the 70's once in awhile depending.

I could lower the Anti-Aliasing down from SMAAT2X and the FPS would go up a lot. Would be over 100 FPS....

Would still be Ultra Preset too...

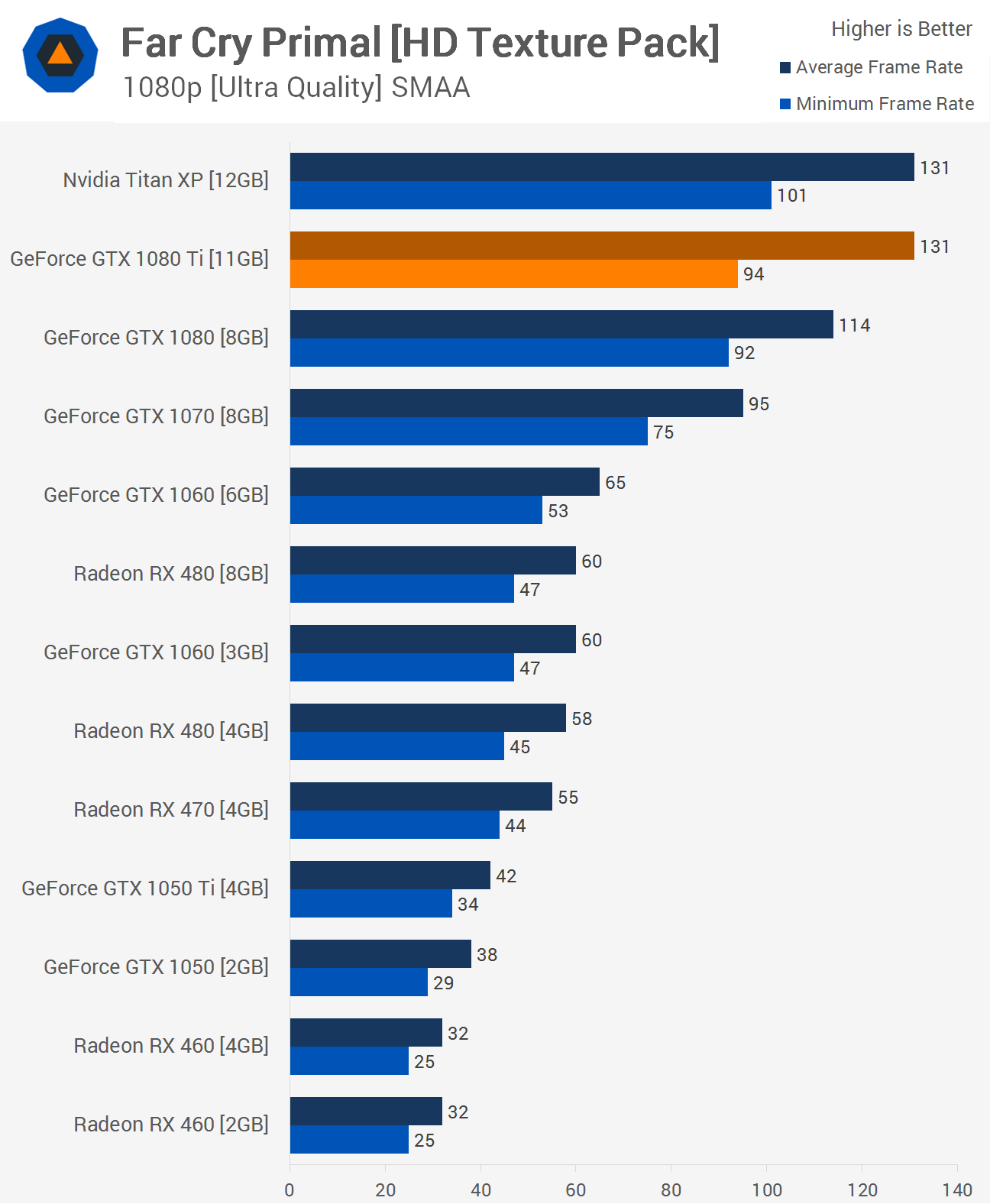

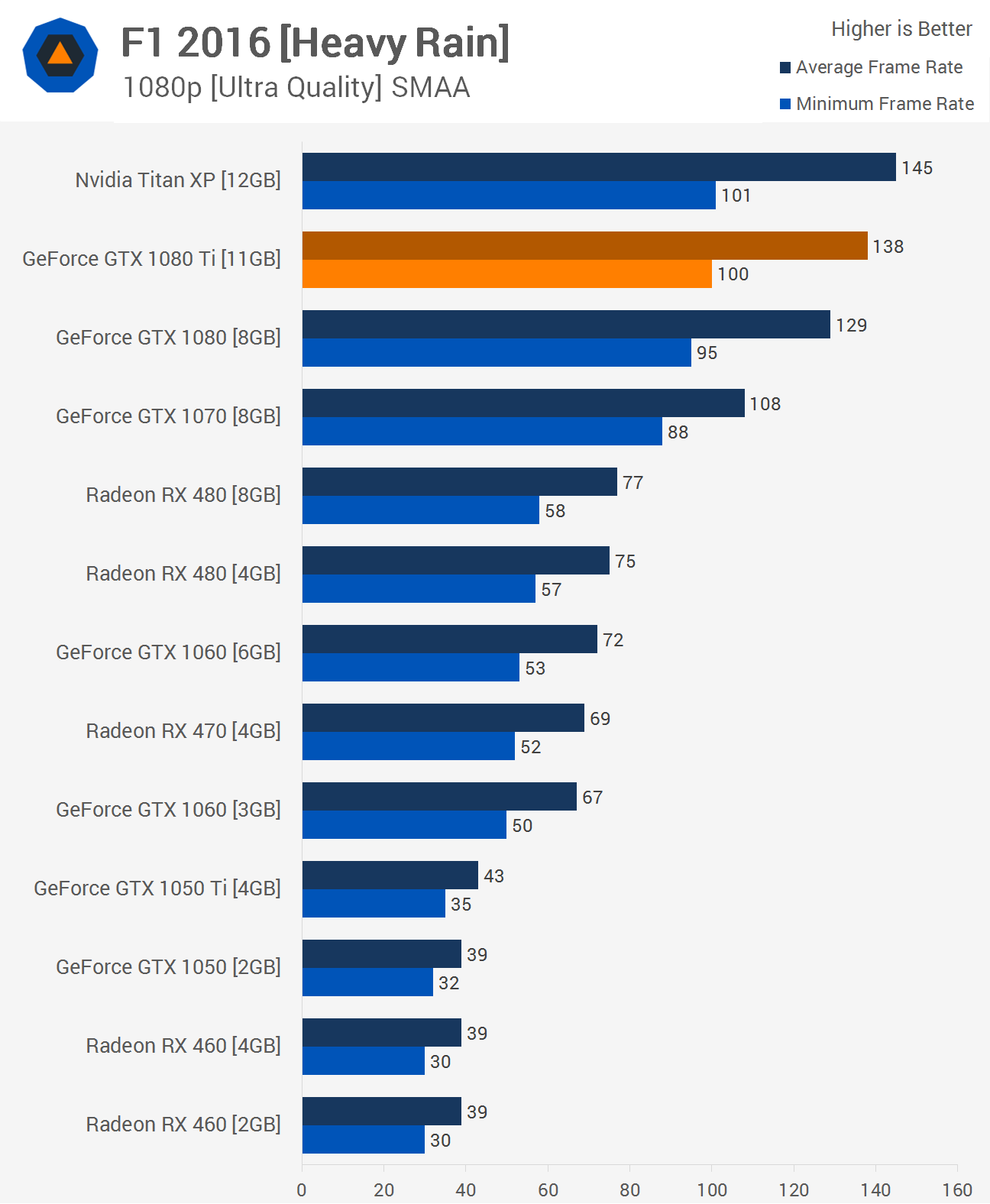

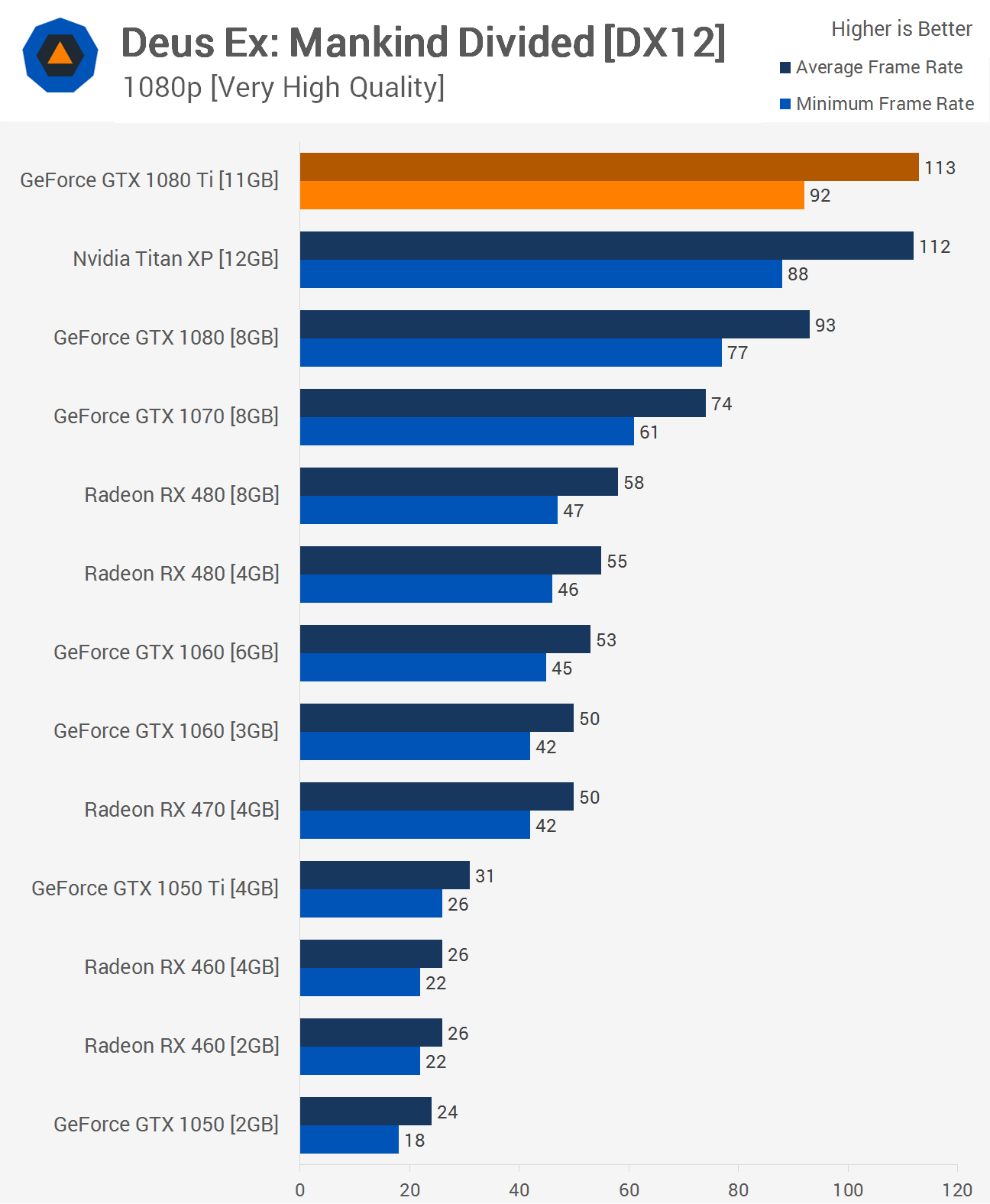

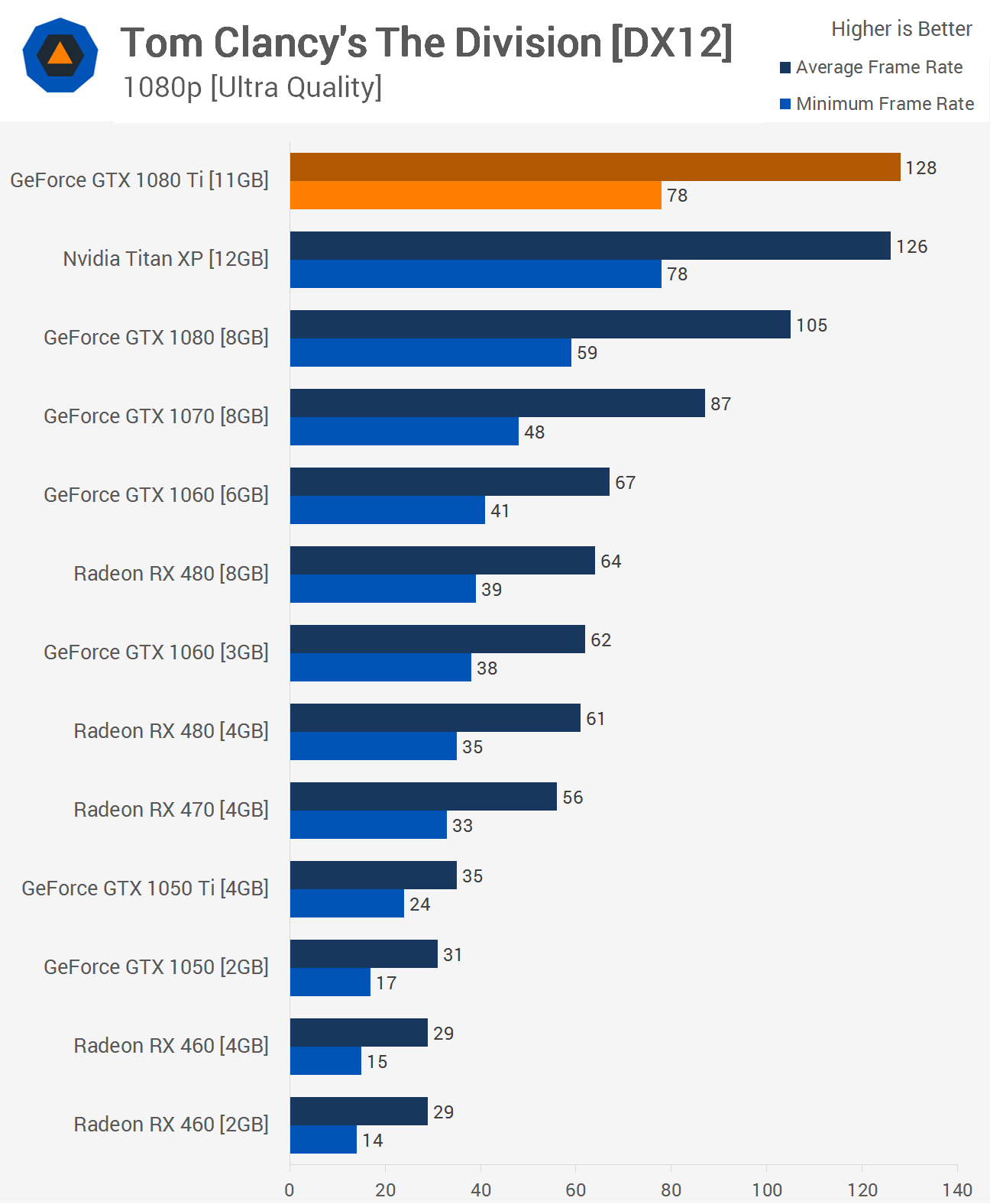

I would think it’s going to be a good pairing but wait for the reviews. There are a few games that a 1080Ti cannot average 144fps or above at 1080p ultra settings, so with that in mind that’s why I doubt a 2080Ti will be overkill for 1440p/144fps/ultra.

sizzling :

I would think it’s going to be a good pairing but wait for the reviews. There are a few games that a 1080Ti cannot average 144fps or above at 1080p ultra settings, so with that in mind that’s why I doubt a 2080Ti will be overkill for 1440p/144fps/ultra.

SOTR is a monster of a game with everything turned up to max.

And that is putting it lightly.

But the graphics are incredible.

No, I doubt a 2080Ti would be overkill at 1440P/Ultra....

I know, all depends on the system and games, 1080P uses a lot more CPU power than 1440P though, shifts more to the GPU.

I play at both 1080P (GTX 1080 and 8700K) and 1440P (GTX 1080Ti and 8086K), yeah I have 2 machines.

I play with everything maxed out because I can. 😉

I play at both 1080P (GTX 1080 and 8700K) and 1440P (GTX 1080Ti and 8086K), yeah I have 2 machines.

I play with everything maxed out because I can. 😉

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

bystander :

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

Developers prioritize developing games for consoles such as the PS4 and XB1; they earn a living by selling games and to maximize their sales, they design games for the systems the majority of gamers possess. For those with above average systems, they can increase the game settings.

Most PC gamers are casuals and most average casual gamer will not spend $1000 or more dollars on a single GPU; my guess is that gaming enthusiast (gamers who spend $2000+ on their gaming PC) is outnumbered 2/10. The data easily found by looking at Steam's stats for their customer's hardware. Only 1.5% of Steam gamers possess the 1080 Ti and 1080p is dominant.

Alyus :

bystander :

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

Developers prioritize developing games for consoles such as the PS4 and XB1; they earn a living by selling games and to maximize their sales, they design games for the systems the majority of gamers possess. For those with above average systems, they can increase the game settings.

Most PC gamers are casuals and most average casual gamer will not spend $1000 or more dollars on a single GPU; my guess is that gaming enthusiast (gamers who spend $2000+ on their gaming PC) is outnumbered 3/10. The data easily found by looking at Steam's stats for their customer's hardware.

Most people buy prebuilt machines and not exactly expensive ones at that.

As far as gaming PC's go nothing much has changed over the years, MOST people buy on the cheap (cheapest crap they can buy) so they are lower midrange or under and or old lower midrange or under machines that they try and play games on.

Then they come places like here and ask why their POS box won't play games worth a crap.

We get the old I want to play (enter new AAA game here) at good frame rates and don't want to spend any money.

Then complain when people tell them what they really need.

It doesn't help that some morons with YT channels cater to those types of people and post BS like low end machines playing the newest games. That and those POS refurbished SFF systems that idiots seem to buy and think they can turn them into gaming machines.

Then they come here and ask why THAT POS won't work the way the one in the BS YT video did?

And then complain when we tell them what reality is.

Realistically people don't need to spend $3,000 on a gaming machine, but they do need to be realistic based on what they want to do.

Alyus :

bystander :

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

Developers prioritize developing games for consoles such as the PS4 and XB1; they earn a living by selling games and to maximize their sales, they design games for the systems the majority of gamers possess. For those with above average systems, they can increase the game settings.

Most PC gamers are casuals and most average casual gamer will not spend $1000 or more dollars on a single GPU; my guess is that gaming enthusiast (gamers who spend $2000+ on their gaming PC) is outnumbered 2/10. The data easily found by looking at Steam's stats for their customer's hardware. Only 1.5% of Steam gamers possess the 1080 Ti and 1080p is dominant.

It doesn't matter, they dev's still will present the user with settings which give a good balance to the systems that are available. The lower end systems play on lower settings, and they always change the high end settings to challenge the high end systems. Nothing ever changes. The 2080ti will handle new games like the 1080ti handled the new games during its time at the top.

bystander :

Alyus :

bystander :

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

Developers prioritize developing games for consoles such as the PS4 and XB1; they earn a living by selling games and to maximize their sales, they design games for the systems the majority of gamers possess. For those with above average systems, they can increase the game settings.

Most PC gamers are casuals and most average casual gamer will not spend $1000 or more dollars on a single GPU; my guess is that gaming enthusiast (gamers who spend $2000+ on their gaming PC) is outnumbered 2/10. The data easily found by looking at Steam's stats for their customer's hardware. Only 1.5% of Steam gamers possess the 1080 Ti and 1080p is dominant.

It doesn't matter, they dev's still will present the user with settings which give a good balance to the systems that are available. The lower end systems play on lower settings, and they always change the high end settings to challenge the high end systems. Nothing ever changes. The 2080ti will handle new games like the 1080ti handled the new games during its time at the top.

Developers design what the majority of systems can handle. The hardware people have dictates the direction where game developers can go, not the other way around. Here's an example, not a perfect one though. Just prior to digital downloads, do you see cassettes and 8 Track Tapes being the major medium? No, only an idiot would record his music on those medium because only a very minuscule number of people in the world have players to play them.

Alyus :

bystander :

Alyus :

bystander :

Every new generation of cards, we get people excited that their new card can play 4K without lowing much if any settings and within a few months, they are back to the same situation as always. The top in cards are generally good to go at 2560x1440 with only some reduction of settings and will remain that way for generations to come.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

The cycle is very consistent;

1) GPU manufacturers make a faster card

2) the game developers make more demanding games.

Developers prioritize developing games for consoles such as the PS4 and XB1; they earn a living by selling games and to maximize their sales, they design games for the systems the majority of gamers possess. For those with above average systems, they can increase the game settings.

Most PC gamers are casuals and most average casual gamer will not spend $1000 or more dollars on a single GPU; my guess is that gaming enthusiast (gamers who spend $2000+ on their gaming PC) is outnumbered 2/10. The data easily found by looking at Steam's stats for their customer's hardware. Only 1.5% of Steam gamers possess the 1080 Ti and 1080p is dominant.

It doesn't matter, they dev's still will present the user with settings which give a good balance to the systems that are available. The lower end systems play on lower settings, and they always change the high end settings to challenge the high end systems. Nothing ever changes. The 2080ti will handle new games like the 1080ti handled the new games during its time at the top.

Developers design what the majority of systems can handle. The hardware people have dictates the direction where game developers can go, not the other way around. Here's an example, not a perfect one though. Just prior to digital downloads, do you see cassettes and 8 Track Tapes being the major medium? No, only an idiot would record his music on those medium because only a very minuscule number of people in the world have players to play them.

small edit: Developers design for what the majority of the systems can handle, as well as for what the low end can handle, and what the high end can handle. Low is for low end systems, medium for average systems, high for high end, and Ultra for the highest end.

The result is still the same. The highest end, the average and low end, handle the latest games the same in every generation. It is directly related to the fact you just presented. The developers will design their games around the current hardware (they often design around what is expected to be released when their games are released, and they often tweak the available settings based on how things pan out at release).

dederedmi5plus

Prominent

that flagship GPU offered for those gamer who seek "close to reality visual experience" and those content creator who seek "faster result" GPU base compute machine, this market segment is relatively marginal.

it's not fair to say software side development belong to hardware requisite, nvidia tensor core was their solution of "cheap" deep learning convolutional neural network, real time ray tracing was their solution of "less power consumption with better imagery fidelity" to long used anti-aliasing sampling,

time will tell if AI driven and real time ray tracing going to be new standard in gaming industry, would be good to relocate CPU resource to GPU so maybe low CPU can be pair with flagship GPU.

it's not fair to say software side development belong to hardware requisite, nvidia tensor core was their solution of "cheap" deep learning convolutional neural network, real time ray tracing was their solution of "less power consumption with better imagery fidelity" to long used anti-aliasing sampling,

time will tell if AI driven and real time ray tracing going to be new standard in gaming industry, would be good to relocate CPU resource to GPU so maybe low CPU can be pair with flagship GPU.

TRENDING THREADS

-

-

AMD Ryzen 9 9950X vs Intel Core Ultra 9 285K Faceoff — it isn't even close

- Started by Admin

- Replies: 54

-

Discussion What's your favourite video game you've been playing?

- Started by amdfangirl

- Replies: 4K

-

Question Xeon Motherboard not sending enough energy to front panel USBs

- Started by emmsz

- Replies: 3

-

Question Why it is impossible to download Activision's Battlezone (1998) for free anymore?

- Started by aor999

- Replies: 2

Space.com is part of Future plc, an international media group and leading digital publisher. Visit our corporate site.

© Future Publishing Limited Quay House, The Ambury, Bath BA1 1UA. All rights reserved. England and Wales company registration number 2008885.