The difference in clockspeed is 300MHz for both standard speed and Turbo Boost Speed. At standard speed (2.7GHz vs 2.4GHz), the performance gain is 12.5%, at Turbo Boost speed (3.7GHz vs 3.4GHz), the performance gain is 8.8%.

However, the max Turbo Boost speed only applies to using 1 core. For the argument sake, let's assume if 2 cores are being used the max Turbo Boost speed would be 3.4GHz vs 3.1GHz. If all cores are being used then let's assume max Turbo Boost speed would be 3.2GHz and 2.9GHz. Those would likely be the clockspeeds of the CPUs when playing games and on average that means a 10% increase in raw performance.

See below chart for CPU scaling in Skyrim. Look at the performance for the i5-2500k and the i7-2600k; a 100MHz difference.

The 100MHz difference represent a 3% increase in clockspeed for the i7. The i5-2500k gets 67 FPS while the i7-2600k gets 70 FPS; that is a 4.5% increase in performance. I would assume that the additional increase in actual performance (beyond 3%) is due to some Windows processes taking advantage of Hyper Thread on the i7. Therefore, you can see a increase in performance as long as the game is dependent on the CPU's performance. Most games are not.

It is interesting to see that the Core i3-2120's performance is lower than the Core i5-2500k. That indicates Skyrim can take advantage of more than 2 cores.

Below are the results of CPU benchmarks for Battlefield 3 (single player). The difference in performance between the i5 and i7 is 1 FPS which represents only 1.5% increase in performance.

Below is Crysis 2 with a 1 FPS difference, the performance increase from 78 to 79 FPS is about 1.3% so it is less sensitive to CPU speeds than Battlefield 3.

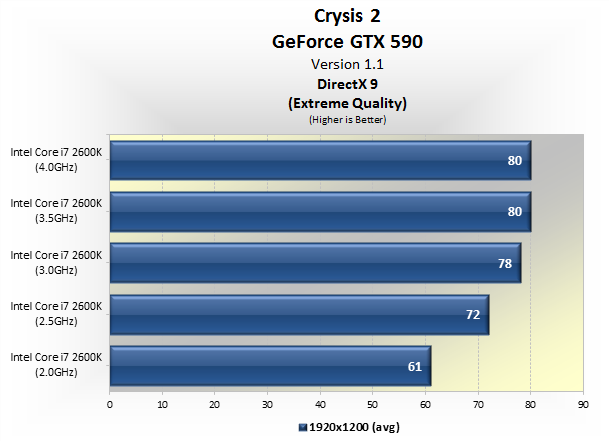

The next chart shows that difference between an i7 clocked at 3.5GHz and at 4.0GHz is 0 FPS.

Lastly, below is a CPU performance chart for Crysis. In this case, let's look at the i7-3770k and i5-3470; the difference in clock speed is 300MHz or about 9.4%. Looking at the performance numbers the i7-3770k gets 64 FPS while the i5-3470 get 60 FPS which a difference of 6.7%. Take note that graphics is set to medium quality as opposed to max quality so at max quality the performance gain could be less.

If squeezing out every last FPS is important you to then paying an extra $150 for that 10% raw performance gain is not that bad. But at the same time it does not represent good value either just for gaming performance.

Personally, I would be willing to pay the extra $150 as long as the laptop is still within my budget because I also encode video from time to time and the increase in performance is more important too me for that purpose as opposed to gaming performance.