D

Deleted member 2731765

Guest

It appears this annoucement came out of nowhere, but this looks like a direct response to the recently announced AMD RSR tech, IMO.

NVIDIA has dropped some unexpected news on a new graphics-enhancing feature that will go live with the next Game Ready drivers, dubbed as DLDSR, or Deep Learning Dynamic Super Resolution). It's exactly what it sounds like, an AI-powered version of the Dynamic Super Resolution or DSR that's been available via the Control Panel for several years.

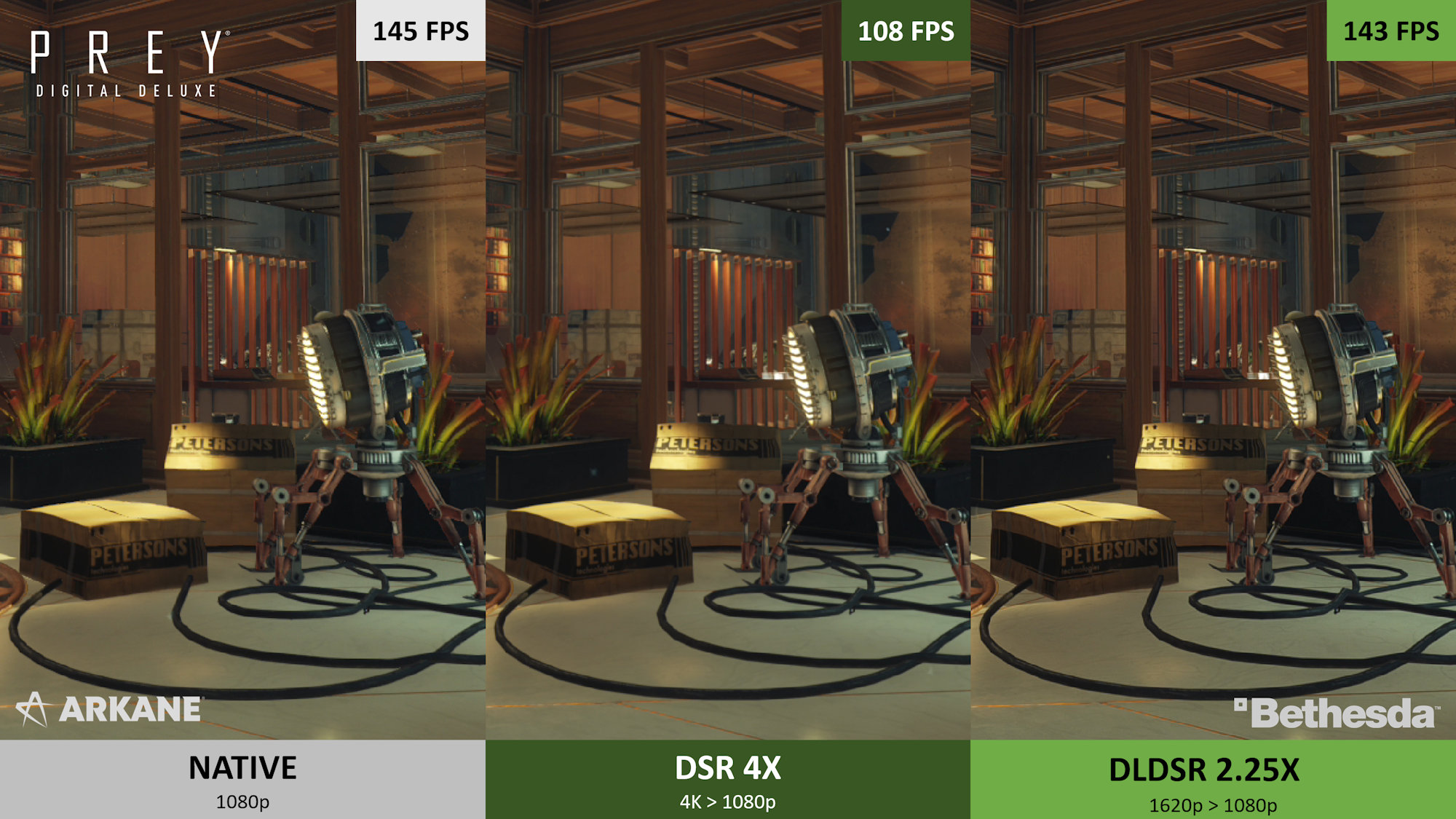

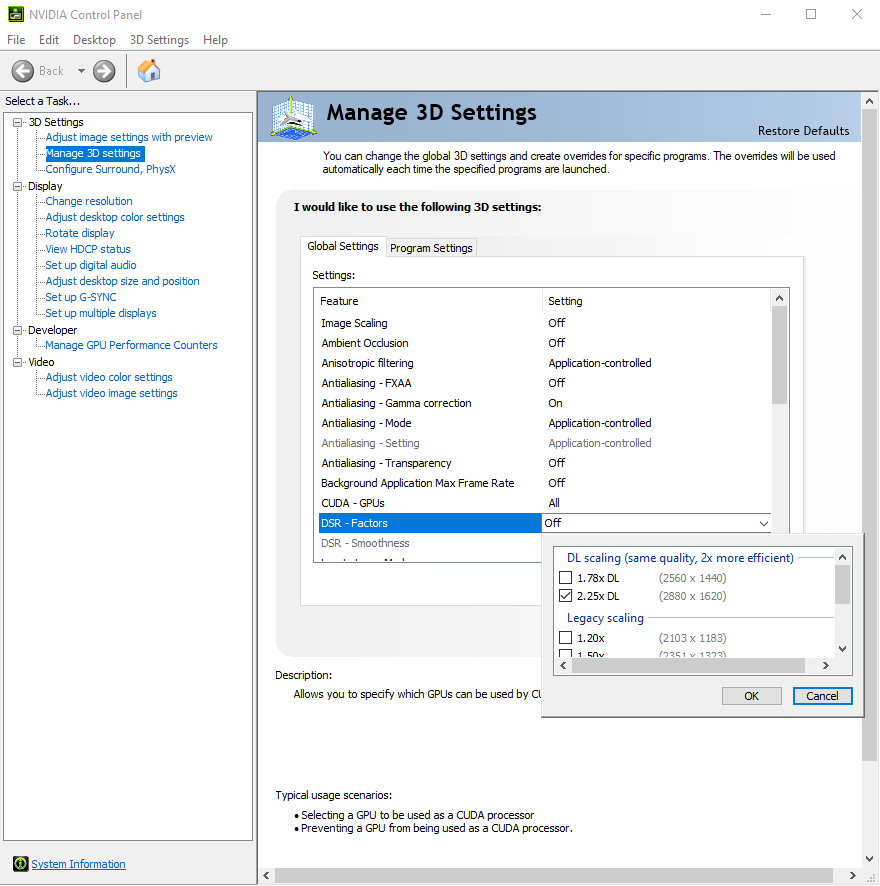

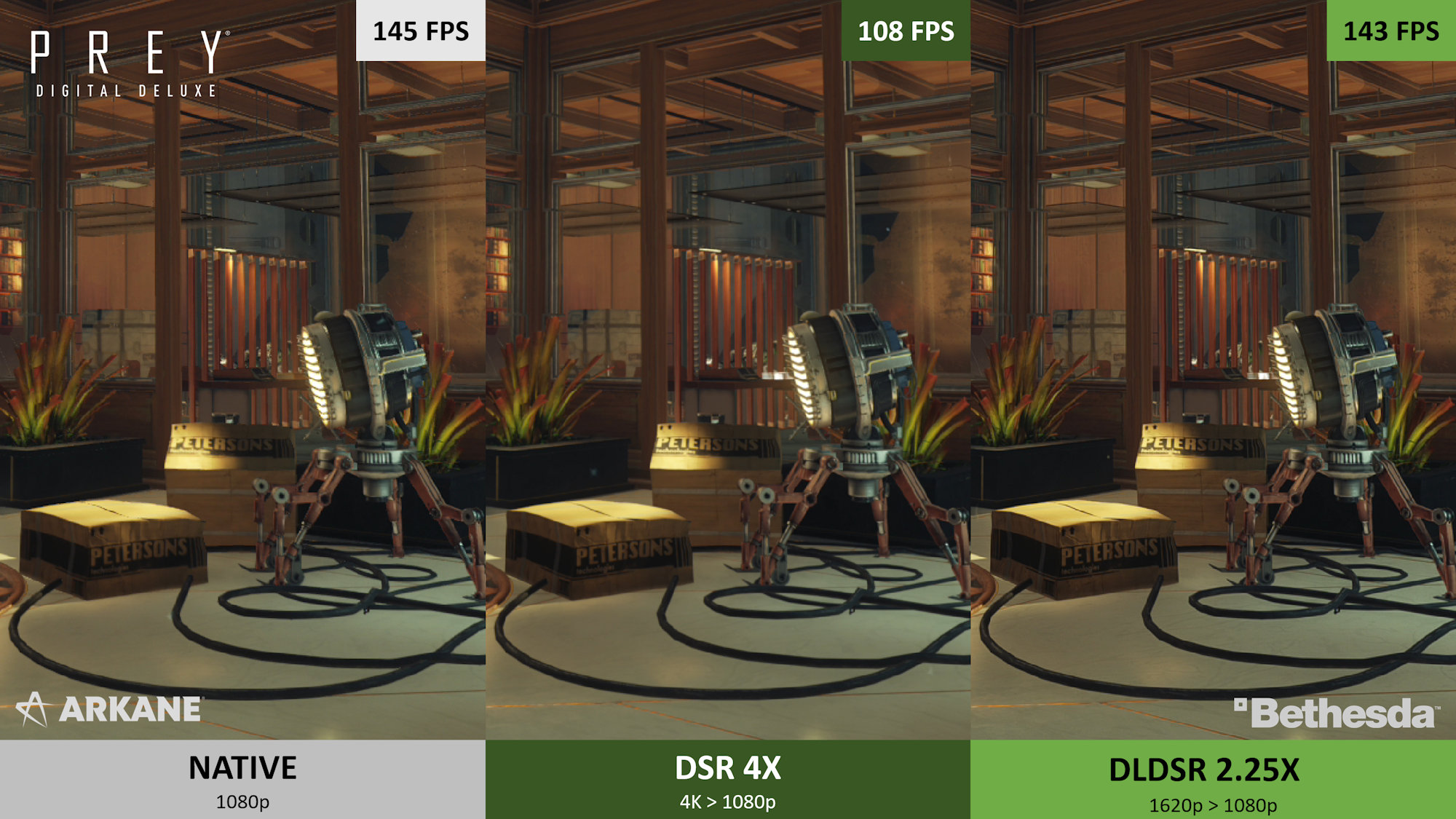

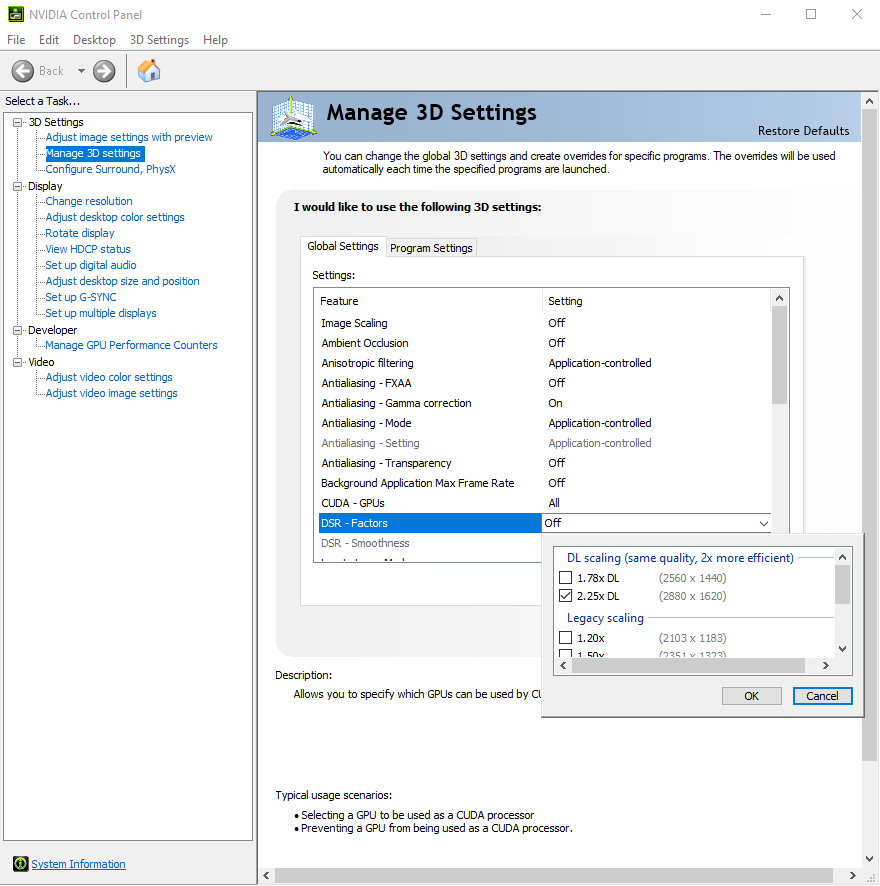

According to NVIDIA, DLDSR could be up to twice as efficient while maintaining similar quality. In the example image as shown below in this post, we can see Prey running at nearly the same frame rate of the native 1080p resolution, all the while actually rendering at 1620p resolution for crisper definition. DLDSR will be available to GeForce RTX owners, as it is powered by the Tensor Cores, and should work in 'most' games.

Additionally, NVIDIA partnered with renowned ReShade modder Pascal Gilcher (also known as Marty McFly) to implement modified versions of his depth-based filters through GeForce Experience's Freestyle overlay. That includes the popular ray-traced global illumination shader.

Here's the Press release statement, to quote NVIDIA:

NVIDIA has dropped some unexpected news on a new graphics-enhancing feature that will go live with the next Game Ready drivers, dubbed as DLDSR, or Deep Learning Dynamic Super Resolution). It's exactly what it sounds like, an AI-powered version of the Dynamic Super Resolution or DSR that's been available via the Control Panel for several years.

According to NVIDIA, DLDSR could be up to twice as efficient while maintaining similar quality. In the example image as shown below in this post, we can see Prey running at nearly the same frame rate of the native 1080p resolution, all the while actually rendering at 1620p resolution for crisper definition. DLDSR will be available to GeForce RTX owners, as it is powered by the Tensor Cores, and should work in 'most' games.

Additionally, NVIDIA partnered with renowned ReShade modder Pascal Gilcher (also known as Marty McFly) to implement modified versions of his depth-based filters through GeForce Experience's Freestyle overlay. That includes the popular ray-traced global illumination shader.

- SSRTGI (Screen Space Ray Traced Global Illumination), commonly known as the “Ray Tracing ReShade Filter” enhances lighting and shadows of your favorite titles to create a greater sense of depth and realism.

- SSAO (Screen Space Ambient Occlusion) emphasizes the appearance of shadows near the intersections of 3D objects, especially within dimly lit/indoor environments.

- Dynamic DOF (Depth of Field) applies bokeh-style blur based on the proximity of objects within the scene giving your game a more cinematic suspenseful feel.

Here's the Press release statement, to quote NVIDIA:

"Advanced Freestyle Filters.

Our January 14th Game Ready Driver updates the NVIDIA DSR feature with AI. DLDSR (Deep Learning Dynamic Super Resolution) renders a game at higher, more detailed resolution before intelligently shrinking the result back down to the resolution of your monitor. This downsampling method improves image quality by enhancing detail, smoothing edges, and reducing shimmering.

DLDSR improves upon DSR by adding an AI network that requires fewer input pixels, making the image quality of DLDSR 2.25X comparable to that of DSR 4X, but with higher performance. DLDSR works in most games on GeForce RTX GPUs, thanks to their Tensor Cores .""