depends on if they gimp bus again.

thats why a 4060 was barely betetr or even worse than the 3060 even though it "should" have been betetr in every case.

Their choice of memory bus effectively downgraded it so it didnt have the generational improvement one expected.

As noted in various articles on the subject, the narrower bus is less a problem as far as bandwidth, and more of a problem with VRAM capacity. I've actually become far less anti-4060 Ti 16GB over time, mostly because the price dropped enough to make it more viable. $50 extra to double the VRAM from the base 4060 Ti, even if it has the same memory bandwidth, isn't actually a bad thing.

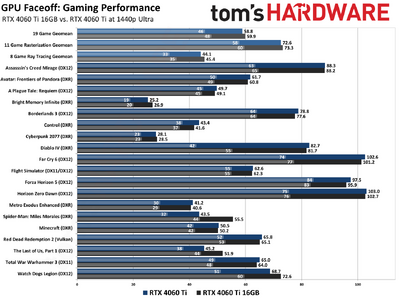

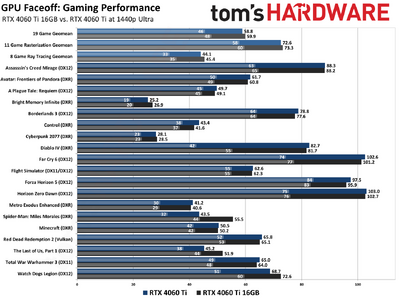

I've poked around at some more recent games, and the 16GB cards (7600 XT and 4060 Ti 16GB) just don't choke like the 8GB models. Which isn't to say they're awesome, just that if we could have had 12GB with the same bandwidth as the 8GB, that would have been more than sufficient. Instead we ran into VRAM limits. The problem is actually being able to clearly show this. For example:

Here the two cards are mostly evenly matched, overall, with the 8GB model (that clocks higher than our 16GB card) winning most of the games. But Spider-Man, The Last of Us, and Watch Dogs Legion show a clear benefit, and minimum fps in Diablo IV is also a significant difference — you'll get noticeable stuttering in Diablo IV with the RT Ultra settings on 8GB cards, to the point where it's actually not that playable. If I had $400 for a new graphics card right now and I wanted to go with Nvidia, I would save up for the 4060 Ti 16GB. (Well, actually, I'd buy a 4070 off eBay, but that's a different story.) I wouldn't want the 8GB 4060 or 4060 Ti... though I wouldn't actually want anything less than about a 4070 Ti Super, if we're being honest. LOL

Anyway, assuming we're correct and Nvidia moves to 24Gb chips on the lower tier parts, I will have no real concerns with future 128-bit and 192-bit configurations. Well, depending on price, obviously. A 5060 and 5060 Ti with a 128-bit interface with 12GB priced at $300~$450 should be totally fine. Nvidia could do something like 32Gbps speed on the 5060 and 36Gbps on the 5060 Ti to differentiate, or maybe 28Gbps and 32Gbps, or whatever.

The important thing is that GDDR7 would potentially give about a 50% boost to bandwidth and capacity for every bus width. As mentioned in the text, even a 96-bit interface with 9GB and 432 GB/s — it could even be clocked at 30Gbps and deliver 360 GB/s — should be viable for a "budget" card, particularly with a 32MB or larger L2/L3 cache. That's the new "budget" price of around $250~$300, of course.