Nvidia's market cap leaped over $1 trillion today, making it the first semiconductor maker in history to reach the mark.

Nvidia Breaks $1 Trillion Market Cap : Read more

Nvidia Breaks $1 Trillion Market Cap : Read more

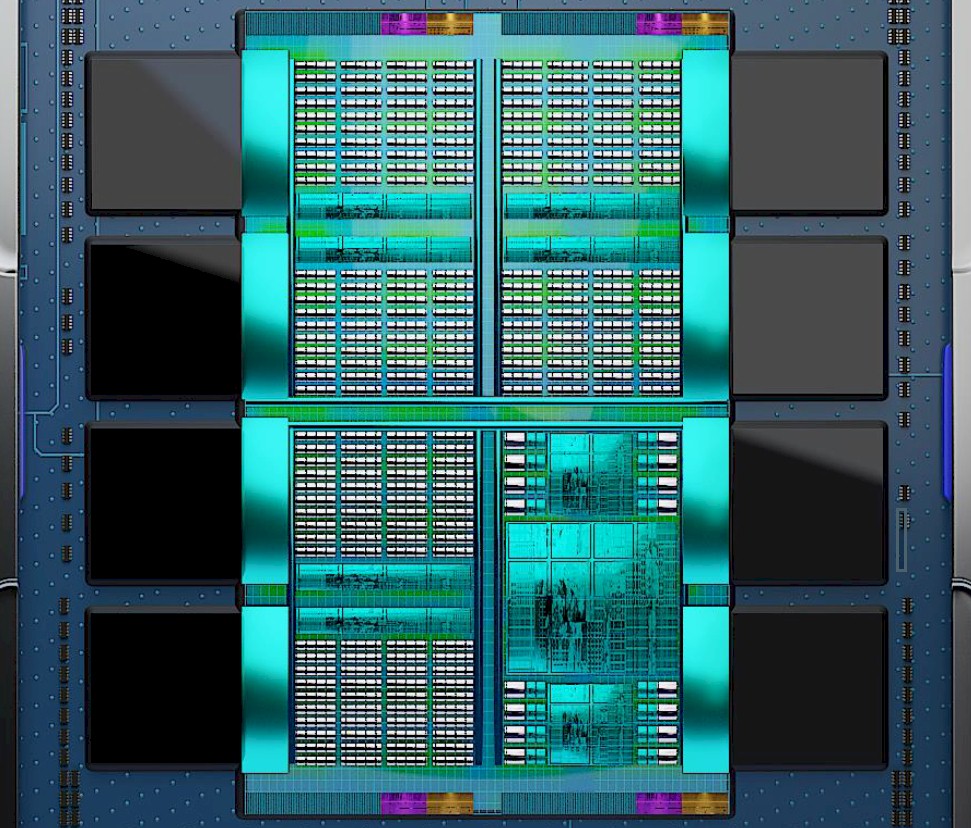

Nvidia has its Grace Hoppers, AMD has its MI300 and Intel has its Data Center GPU Max. No shortage of large integrated CPU+GPU solutions in the DC space.Another thought I have is that Nvidia's Grace represents a real threat to AMD's server CPU revenue, as well. I'll bet quite a lot of servers hosting Nvidia GPUs have been EPYC-based. Grace looks set to change much of that.

Grace is new and only beginning to finally ship. We don't know how much of AMD's revenue projections for EPYC were based on the assumption AI buildout would continue on trend, but Grace might invalidate such assumptions.Nvidia has its Grace Hoppers, AMD has its MI300 and Intel has its Data Center GPU Max. No shortage of large integrated CPU+GPU solutions in the DC space.

www.nextplatform.com

www.nextplatform.com

I was thinking that GPUs would be replaced by a more AI processor in the near future. Do you think GPUs will be the processor of choice going forward? Can Nvidea stay on top? Or is their stock insanely overrated?I hope this prompts AMD to triple-down on their investment in GPU compute, and finally support ROCm across all recent hardware models, including APUs.

You can't hope to compete with Nvidia on compute, if you don't have a vibrant ecosystem. And part of having a vibrant ecosystem is making it easy for people to develop on your hardware.

Another thought I have is that Nvidia's Grace represents a real threat to AMD's server CPU revenue, as well. I'll bet quite a lot of servers hosting Nvidia GPUs have been EPYC-based. Grace looks set to change much of that.

That depends on how sustainable the AI gold rush will be. My gut feeling is demand will level out within two years, so I expect about two years before AMD, Nvidia and Intel go "Oh crap! We need something else more sustainable to dump all of our excess wafers on. Maybe we shouldn't have golden-showered gamers two years ago."Jesnen will be even more under pressure to deliver on growth and profits which means bad news for gamers since our needs are now truly an afterthought.

I would love to see a perf/$ and perf/W comparison between their GH200 and Cerebras CS-2. Related:I was thinking that GPUs would be replaced by a more AI processor in the near future. Do you think GPUs will be the processor of choice going forward?

www.nextplatform.com

www.nextplatform.com

Hard to say, but they have as many resources and as much expertise as anyone in the field. They won't be easy to unseat.Can Nvidea stay on top?

Well, they have their eggs in several growing baskets:Or is their stock insanely overrated?

It will definitely be a fad, just like the Internet was. Trust me boys: we're going back to sneakernet any day now!That depends on how sustainable the AI gold rush will be. My gut feeling is demand will level out within two years,

I still think Intel can do it, though not necessarily in time to achieve prominence based on the amount of stuff it had to scrap for being too little too late.A couple years ago, I'd have thought Intel could do it, but I haven't heard a lot about Habana and the failures & shortcomings of Xe are known all too well.

That depends on how sustainable the AI gold rush will be. My gut feeling is demand will level out within two years, so I expect about two years before AMD, Nvidia and Intel go "Oh crap! We need something else more sustainable to dump all of our excess wafers on. Maybe we shouldn't have golden-showered gamers two years ago."

I'm not saying that AI will disappear, only that demand scaling will hit a ceiling relatively quickly: how many people and companies can afford to throw 100+TB models at a problem? That is what Nvidia is pitching its new stuff at.It will definitely be a fad, just like the Internet was. Trust me boys: we're going back to sneakernet any day now!

: D

Well even google gets 100k queries per second 15% of which are entirely unique. AI getting trivial things wrong, to me, seems completely reasonable. You can basically never train AI enough to be perfect.I'm not saying that AI will disappear, only that demand scaling will hit a ceiling relatively quickly: how many people and companies can afford to throw 100+TB models at a problem? That is what Nvidia is pitching its new stuff at.

And then you have the whole issue that since AI is just networked of weigh matrices trained by throwing data at it, reinforcing correct answers, deterring incorrect ones until the desired accuracy is achieved, it can be quite difficult to figure out why it randomly gets some trivial stuff wrong.

It only has to be better than a human, and that often turns out not to be too hard. I see things that aren't there or misinterpreted what I saw with enough regularity that I could easily believe a good computer vision model would have better accuracy than me.And then you have the whole issue that since AI is just networked of weigh matrices trained by throwing data at it, reinforcing correct answers, deterring incorrect ones until the desired accuracy is achieved, it can be quite difficult to figure out why it randomly gets some trivial stuff wrong.

It just has to be good enough.You can basically never train AI enough to be perfect.

Jesnen will be even more under pressure to deliver on growth and profits which means bad news for gamers since our needs are now truly an afterthought.

Nvidia please don' t forget PC gamers

From the bubble perspective, it doesn't mean it's not a bubble just because it has a chance to be impactful. Computers were just as impactful in the 70s and 80s, the internet in the 90s, etc. All bubbles in stock market land that eventually popped.I am not sure a lot of the commentors here are understanding the changes that AI will continue to make especially given enough breathing room. People aren't seeing the massive jumps we are about to take in so many different directions with AI finally starting to progress it's own path and within two years AI will have changed every aspect of our lives. We have only been capable of iterative jumps in technology where AI has the ability to take gulps instead of sips. Truly a little scared and a little excited.

There is a lot to unpack here, lets tart with processor of choice going forward. AI centric chips are in development while there are some custom designs that already ship with "neural engines/cores", but most are very specific to a use case. However, Google for example is getting ready to make its own for training Bard and other AI centered services. The question for Nvidia isn't whether GPUs will be the AI choice in the future, it's whether they can they make an AI "chips" that are competitive and stay competitive.I was thinking that GPUs would be replaced by a more AI processor in the near future. Do you think GPUs will be the processor of choice going forward? Can Nvidea stay on top? Or is their stock insanely overrated?