Sorry, no benchmarks yet!

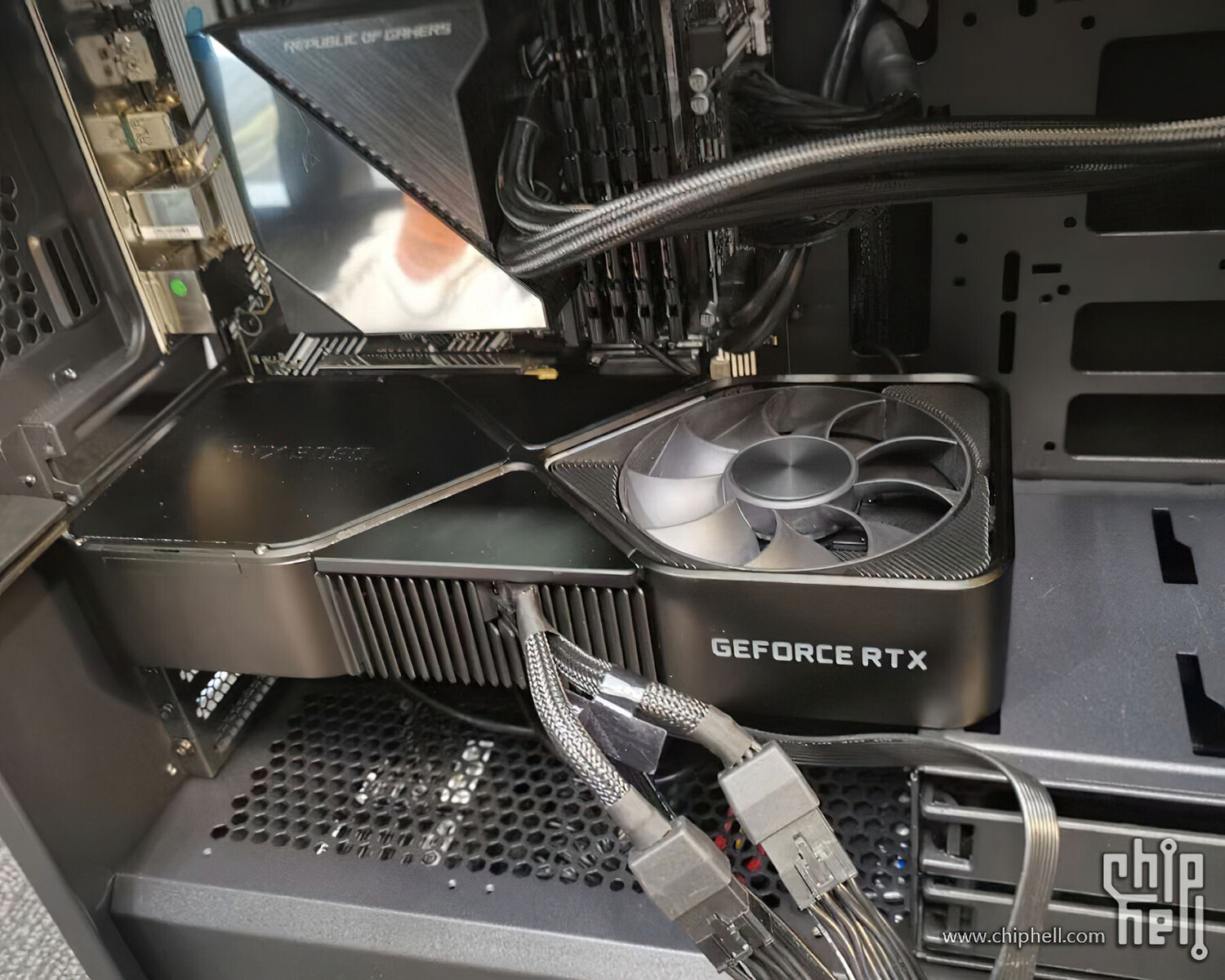

Nvidia GeForce RTX 3080 Founders Edition Hands On and Unboxing : Read more

Nvidia GeForce RTX 3080 Founders Edition Hands On and Unboxing : Read more

?

?I am! But obviously anyone buying this card hopefully isn't focused on 1080p gaming. Even the 2080 Ti was basically overkill at 1080p (unless you enable ray tracing). As for PCIe Gen3 vs. Gen4, I'm planning on testing with Ryzen 9 3900X and Gen4, but that's a different platform and CPU than i9-9900K and Gen3. It might make a difference at 4K, though. Maybe? Like, when you're GPU limited at 4K the added PCIe bandwidth might be useful. Next week ... start the 7 day countdown to launch day! 😀Are you even going to bother with 1080p benchmarks?

Would love to see pci-e 3 vs pci-e 4 benchmarks.

1355g, compared to 1260g on the RTX 2080. So 100g more (roughly) than the previous gen. I'm really curious to see how much the 3090 weighs, though! My case might need some reinforcing to hold that up over time. 😲Looks mighty heavy. Looking forward to the review.

I'm not expecting 3.0 vs 4.0 to make much of a difference until GPUs are pushed beyond their VRAM size, which may require 8k texture packs at 10GB.Would love to see pci-e 3 vs pci-e 4 benchmarks.

If my memory serve me right the rtx 2080 ti already shows couple precent different.I'm not expecting 3.0 vs 4.0 to make much of a difference until GPUs are pushed beyond their VRAM size, which may require 8k texture packs at 10GB.

There's an NDA on that information. Next week, by launch day for sure, is all we can say.Fellas no disrespect but...Hurry up and get the got damn benchmarks out!!!!!

I found the video you can gain extra 5 fps in some games over pci-e 4.0 with rx 5700xt.There's an NDA on that information. Next week, by launch day for sure, is all we can say.

They can't post performance until Nvidia gives them the green light to. That's the same for all reviewers.Fellas no disrespect but...Hurry up and get the got damn benchmarks out!!!!!

I didn't say NO difference, I said NOT MUCH difference. A couple percent is not much, barely above error margins.If my memory serve me right the rtx 2080 ti already shows couple precent different.

I don't know if you saw my edit but the rx5700xt can give you extra 5 fps in some games over pci-e 4.0.I didn't say NO difference, I said NOT MUCH difference. A couple percent is not much, barely above error margins.

If you look at the 4GB RX5500, 3.0x8 vs 4.0x8 spells the difference between 20-30fps and 40-60fps in VRAM-constrained scenarios where the 8GB version hits 60-70fps regardless of PCIe version. A full x16 version could have made the 8GB version mostly unnecessary.

The most interesting 3.0 vs 4.0 differences will be when GPUs get pushed beyond their VRAM comfort zone.

That depends on what fps you're talking about.I don't know if you saw my edit but the rx5700xt can give you extra 5 fps in some games over pci-e 4.0.

Yes, it is not massive but the rtx 3080 could MAYBE give you 10 fps.

It is a nice boost don't you think?

Some of the people that buy Intel will explain to you how important it is the different between 150 to 155 fpsThat depends on what fps you're talking about.

150 to 155 fps? Meh. 30 to 35 fps? That would be significant.

As InvalidError points out, it's mostly the 4GB 5500 XT cards that saw large improvements in performance from PCIe Gen4, because the lack of VRAM leads to situations where more data has to go over the PCIe bus ... plus it's limited to an x8 link width. I don't think anyone has shown games where Gen3 vs. Gen4 x16 slots result in more than a 1-2% improvement, and even then that's only if you're not fully GPU bottlenecked.

.

.Depends on where you started from. Nowhere near as impressive as all the ground the 4GB RX5500 can gain on its 8GB big brother by going from 3.0x8 to 4.0x8. As a $150-200 GPU shopper, I am far more interested in 4.0x16's potential for crunching the minimum amount of VRAM necessary to enable newer GPUs to perform close to their full potential as games become more VRAM-intensive than incremental performance gains at the high-end.It is a nice boost don't you think?

How else would we know if it's able to hit 360 fps for the Asus ROG Swift 360Hz 😛Are you even going to bother with 1080p benchmarks?

Would love to see pci-e 3 vs pci-e 4 benchmarks.

Also could be very intresenting. There is no doubt that pci-e 4.0 can give you extra fps in all kind of scenarios. I understand that the gpu can interface with the storage also.Depends on where you started from. Nowhere near as impressive as all the ground the 4GB RX5500 can gain on its 8GB big brother by going from 3.0x8 to 4.0x8. As a $150-200 GPU shopper, I am far more interested in 4.0x16's potential for crunching the minimum amount of VRAM necessary to enable newer GPUs to perform close to their full potential as games become more VRAM-intensive than incremental performance gains at the high-end.

Just change the names 😛They can't post performance until Nvidia gives them the green light to. That's the same for all reviewers.

Despite the probable big jump in performance, (the following is my personal opinion) what a a boring looking card (and Im not talking about RGB or any of that), it just looks like a dull enterprise product and not something that really catch the eye at first glance.

I like my PCs to have the least bling possible. My PC resides under my desk, so I am far more interested in dust mitigation, the fewest holes possible on the top and sides to prevent stuff from dropping in, and being resistant to accidental kicks than looks. I also replaced the blue LED fans which were starting to get noisy from worn bearings with plain Sunon MagLev ones.The Flashy RGB blink isn't always the first thing adults look at when buying hardware. And I know many that will not buy items for that reason.

No worries! Just use the HDD cage!1355g, compared to 1260g on the RTX 2080. So 100g more (roughly) than the previous gen. I'm really curious to see how much the 3090 weighs, though! My case might need some reinforcing to hold that up over time. 😲