VRAM "usage" is dominated by opportunistic texture caching. Any engine with a competent developer will load the textures (and geometry and other duties the GPU now performs) needed to render the current scene into VRAM, and then immediate after start loading every texture for that level into VRAM until VRMA is full. There is zero penalty for doing so - the GPU can overwrite cached data with in-use as rapidly as it can overwrite 'empty' VRAM - and it reduces the number of times the GPU needs to request data over the slow PCIe link. Any empty VRAM is wasted VRAM. But that VRMA 'in use' may never actually make it to your screen, e.g. if you never visit half of a level that has been opportunistically cached. This is why you see VRAM 'usage' barely change as resolution increases or decreases: VRMA will continue to be used until either the available VRMA is full, or the engine runs out of data for that level/zone/block (depending on engine and how it partitions scenes) to load into it.

An apples-to-apples test is going to be pretty much impossible without at the very least manufacturer assistance, as any card with multiple VRAM SKUs is also going to have multiple bus sizes (e.g. 3080 10GB vs. 12GB) which will skew results more than available capacity. Without the ability to add an artificial cap on VRAM utilisation - and doing so spread correctly across dies to avoid incurring an unnecessary bandwidth limit, so something that has to occur at the driver or VBIOS level - there is no way to test the same model GPU with the same bandwidth with different VRAM capacities.

Max settings are basically worthless outside of youtube videos bragging about your RGB PC you paid someone else to build but never actually use, they're just where the developer has taken every variable they have available and set it to the highest value regardless of function. Drop down one notch to the settings that have actually been optimised by the developer and are visually identical, and suddenly performance improves dramatically for the same output image.

Yes, I already have a basic understanding of how programs and games use VRAM. Although, your explanation is a bit simplistic as it leaves out functions like texture compression and intelligently favoring the offloading (to RAM) or compressing of textures you are not currently looking at vs. the ones you are looking at. What I wanted was actual demonstrations that can be reviewed (like a YouTube video or whitepaper).

I do understand the point you're trying to make though. You are assuming that, when the cards runs out of VRAM it will start replacing those textures not in use with in use ones. This is all well and good until all the textures, meshes, shaders, framebuffers, and other data in VRAM is all considered in use. At that point we're back to the same issue of not enough VRAM. Also, the whole allocated vs. used argument is easily overcome with the latest MSI Afterburner or Special K DirectX 12 hook.

Your argument about VRAM limitation not being readily testable is false.

Please review this Reddit thread -

View: https://www.reddit.com/r/nvidia/comments/itx0pm/doom_eternal_confirmed_vram_limitation_with_8gb/

and this YouTube video (starting at the 6:30 mark) -

View: https://www.youtube.com/watch?v=k7FlXu9dAMU

Yes, there are other architectural difference between the cards in question (other than VRAM), but that baseline architectural performance difference is already know and factored in. When we make a change in the texture size, causing both cards to attempt to use more VRAM than one of the cards has, the results indicate a performance deficit due to the one setting changed.

Ahhh, the ol'

'max settings are worthless' line. This is what's called a subjective statement.

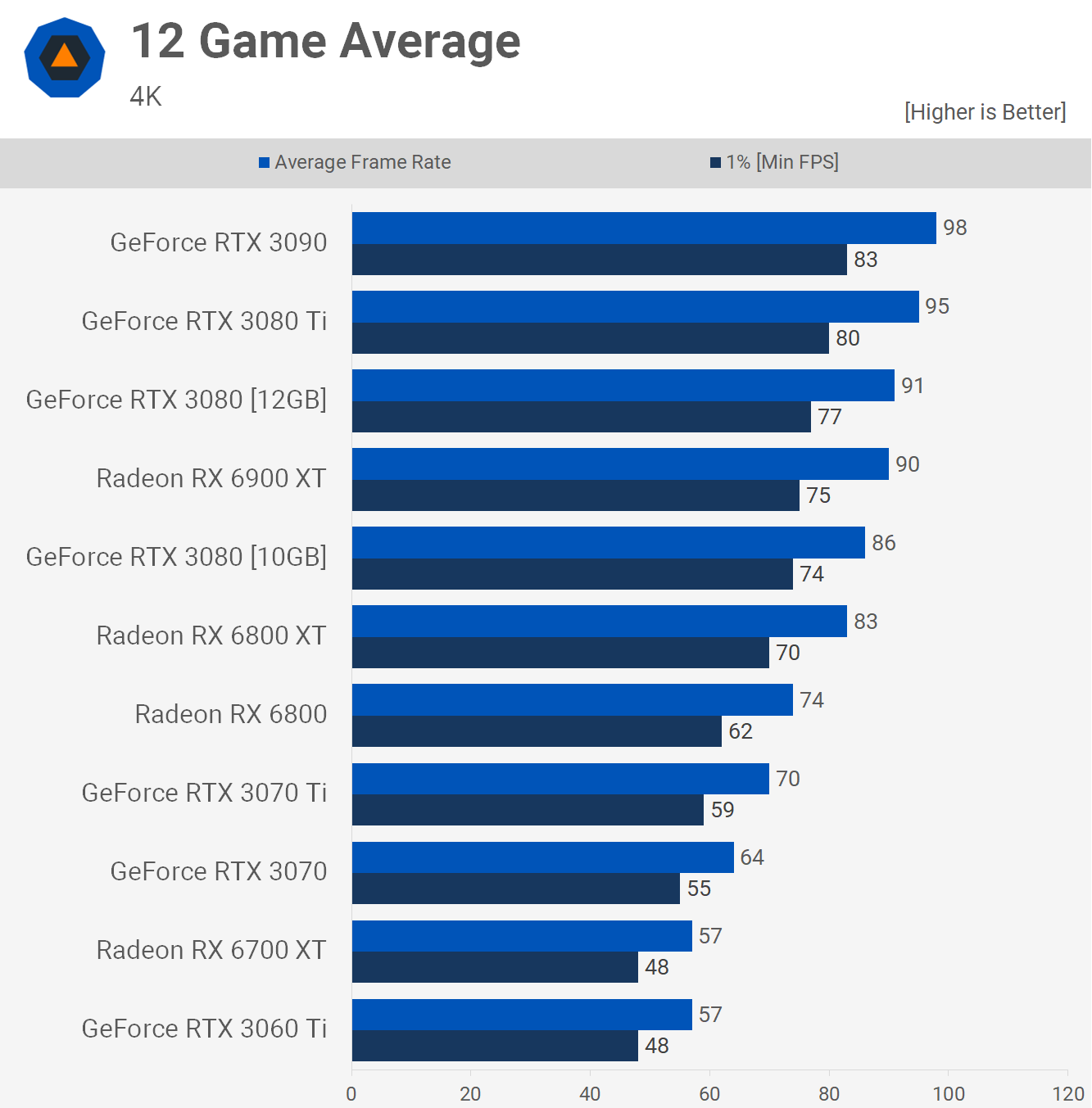

I purchased my 6900 XT for a couple of reasons. First - Longevity. I will have a great performing GPU 4+ years from now. Second - Performance today. Since this will be a great card years from now, it stands to reason that it is a superb card RIGHT NOW. This allows me to run today's AAA games at max settings. Which I very much enjoy doing.

Edit - Point of correction from an earlier post. DOOM Eternal shows the VRAM limitation of an 8GB VRAM card, not the 10GB RTX 3080.