I saw you comment on another thread that you didn't think AMD had a latency reducing tech when using frame gen. As far as I'm aware Anti Lag works with FSR3 frame gen. If I were you I'd do more investigating before I buy a $800+ dollar GPU.

I did look into it. As I understand it, Anti Lag is an entirely software solution, and is inferior to Reflex, albeit it does have the advantage of working in all reasonably modern DirectX games.

Also I just don't like FSR tech in general, in most respects it seems like a helter skelter attempt by AMD to catch up to trends set by nVidia, rather than trying to come up with something of their own.

I'm not fanboying over nVidia, I have a lot of gripes with them actually, but I want the best and I'm fine with paying more to get it. Yes, I'm the average victim of capitalism.

None of you are interested in buying AMD, no matter what they offer.

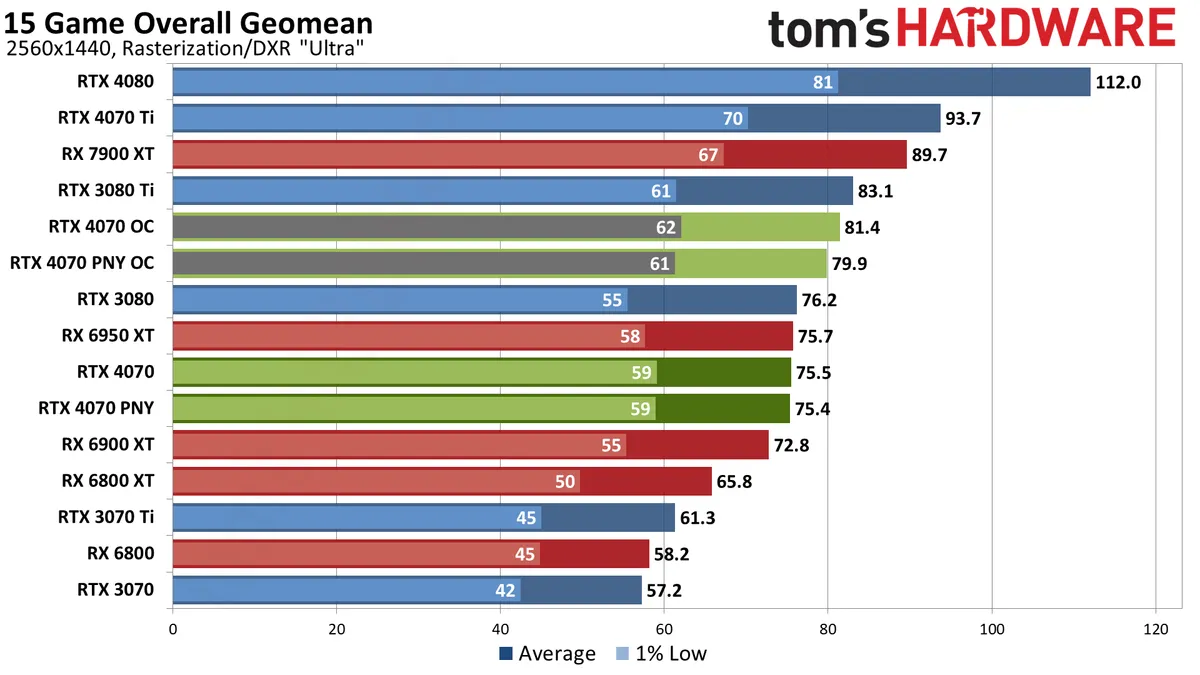

Well, so far they seem to offer a lot of VRAM and slightly better raw rasterization performance at resolutions below 4k. Which is fine, just not something I'm personally interested in as a gamer, because I expect performance-taxing games to have at least some upscaling tech available, and maybe even framegen, and nVidia just wins that hands down.

AMD might have shot itself in the foot by allowing nVidia cards to take advantage of AMD upscaling / framegen features, particularly when using a mod/patch that allows older RTX cards to combine DLSS upscaling and Reflex latency reduction with AMD framegen.

It was a noble move for sure, but for some nVidia users it means they have fewer reasons for upgrading from their older RTX cards [to newer AMD cards].

The sad reality is that AMD are behind nVidia on the GPU market, not just in raw performance, but in technological presence as well.

Have to give credit where it's due, AMD's 7800X3D looks great for gaming. Despite being an Intel user for over a decade, I'm considering switching to AMD CPU.