I never said anything about 1440p performance. it's 4K where the differences mount

Hook up a 4K monitor or TV to your 6700XT and then put on max settings. Run around Limsa and get back to me

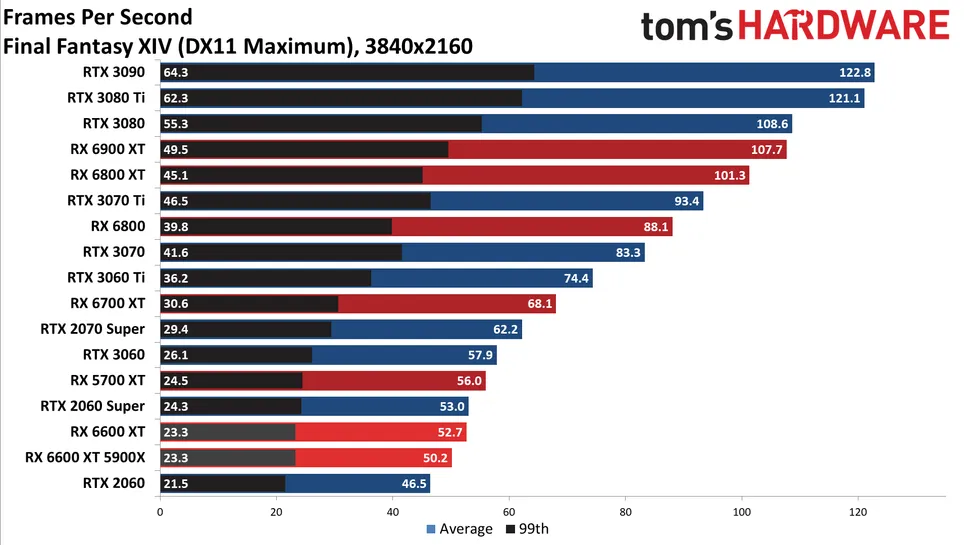

Even Tomshardware agrees with me:

The 99th may not seem that much difference, but the microstutter on the 6700XT is bad at 4K while on the 3060ti it's much less frequent at 4K. It was originally going to be in my wife's 10850K rig which had a new SSD put in so it was a clean install. 3060Ti put in without even running DDU and it was already smoother and faster.

I tried it on my 12900K same thing, I tried it in my son's 5900x, same thing at 4K. Drop it to 1440p and it runs great.

I'm sure is a combo of AMD not putting much effort into optimizing DX11 and Squeenix not optimizing their game either for AMD.