The details are (mostly) in the slides. And the devil is in the details. It shouldn't surprise you to hear that Nvidia loves to overhype their products, so they're going to highlight the best case scenario, much more than the typical speed up. Don't get these two confused!

They claimed a 2.3x speedup in (training?) a 65B parameter Large Language model (e.g. ChatGPT), a 5x speedup in a 500 GB Deep Learning Recommendation Model, and a 5.5x speedup in a 400 GB Vector DB workload (think lookups in a large-population facial recognition database, among other examples that come to mind).

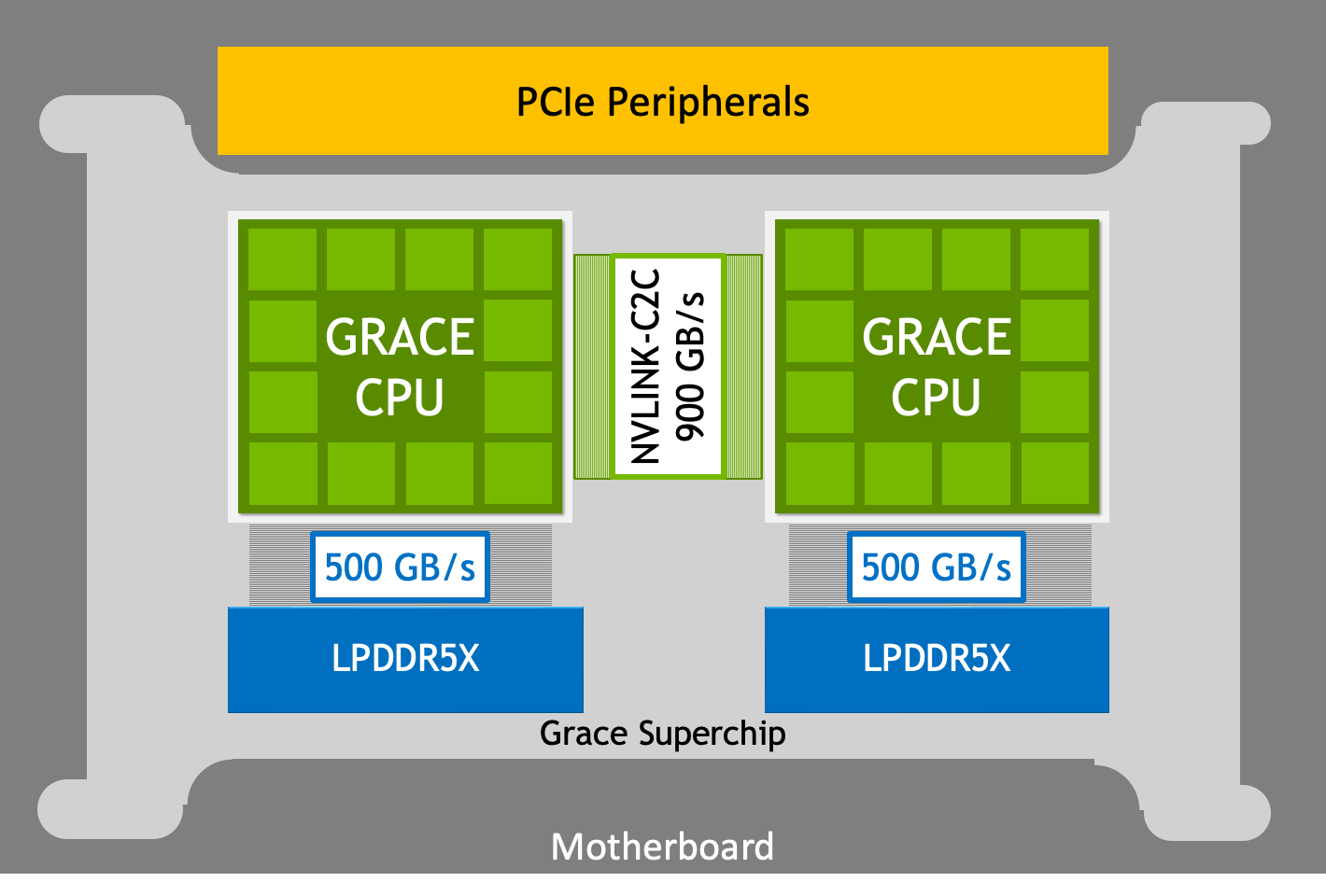

There's a common theme, here: they're all big. That's because they didn't announce a new GPU! They announced a new system architecture, based around their "superchip" modules and next-gen interconnect technology. So, it's not the computation that got faster, but rather the data movement, locality, and latencies that improved.

Depends on how you define it. If you just wanted VR without a headset, then the bottleneck would seem to be the brain-machine interface. Elon Musk's Neuralink just got approval to start human trials. I haven't followed their stuff, but I'm guessing it'd be several generations before they're ready to tap into the optic nerve. And it's anybody's guess if/when we can do that without having to sever the connection to your organic retinas.