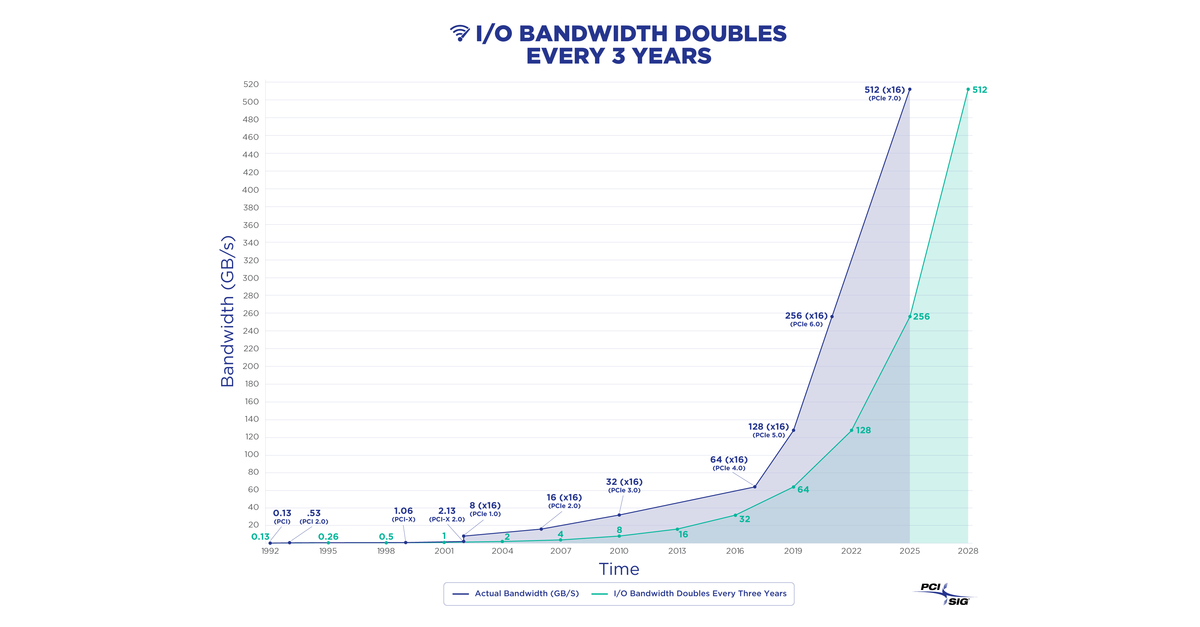

First draft specification of PCIe 7.0 is now available for members.

PCI Express 7.0 Draft Spec Released: 512 GB/s x16 Slot in 2027 : Read more

PCI Express 7.0 Draft Spec Released: 512 GB/s x16 Slot in 2027 : Read more

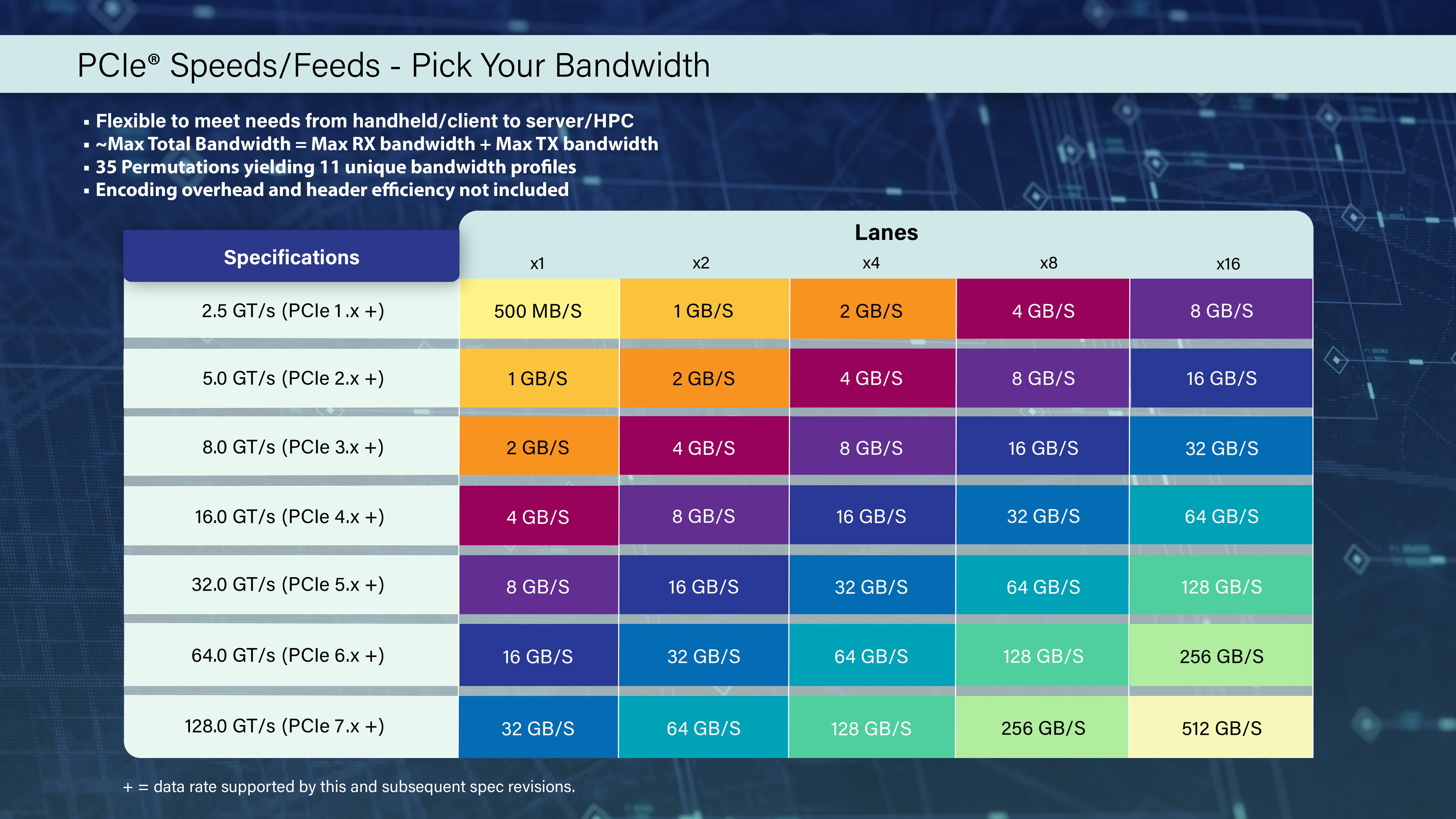

I doubt that stuff is going to make it to the consumer space, at least not in AIB format. 128Gbps per pin is going to take some deep voodoo to get to work through board-to-board/chip sockets. It may get into the prosumer space and possibly get used as the off-the-shelf link between chiplets/tiles/whatever on multi-chip packages in the consumer space.So based on the paper specs, even x1 PCIe 7.0 lane will be as fast as PCIe 4.0 x16 speeds (32 GB/s), so that most of the storage and other devices could be smaller and hog fewer resources.

No, it's just an 8:1 ratio (2^3). If you look closely, you can see that each generation roughly doubles the previous one.So based on the paper specs, even x1 PCIe 7.0 lane will be as fast as PCIe 4.0 x16 speeds (32 GB/s),

I wouldn't be.While we are sure that PCIe 7.0 will eventually end up in client PCs ...

There may be a benefit for the top-3%, though I doubt anything after 5.0 will go very far into the mainstream beyond the CPU-chipset link where the extra bandwidth could be necessary to feed downstream 5.0 devices with minimal uplink contention.Given that PCIe 5.0 is still overkill for desktop PCs, I can't imagine Intel or AMD would want to go through another round of such complaints, for a feature with virtually no practical benefits to end users.

There are still no GPUs with PCIe 5.0 (not that I care - they don't need it).There may be a benefit for the top-3%,

The irony of ironies is that one place where PCIe 5.0 could've actually delivered real value isn't something either Intel or AMD used it for!I doubt anything after 5.0 will go very far into the mainstream beyond the CPU-chipset link where the extra bandwidth could be necessary to feed downstream 5.0 devices with minimal uplink contention.

Let's save the fanfare until PCIe 7.0 is finalized.I'm just impressed that they've managed to push a ~25 years old physical spec to from 1.3GHz to 60+GHz and get that to work reliably enough to be practical.

You don't need to "saturate" it. All you need to have an IO bottleneck with macroscopically observable symptoms is a couple of things attempting to do large IO at the same time, causing latency spikes from queue length spikes. You may only need 1% of the bandwidth on average but you still feel the lag when everything happens everywhere all at once every few seconds. The more spare bandwidth you have, the less likely that worst-case scenario is to happen and the less severe it gets.tbh for normal users this seems like just to increase price of MB's.

even best gpu/ssd cant saturate 5.0

To which I'll respond with the same thing as always: low-end GPUs stand to benefit the most from fast PCIe to give them faster access to system memory and offset their limited local memory pool. Ironically, GPU manufacturers won't give budget buyers that either.There are still no GPUs with PCIe 5.0 (not that I care - they don't need it).

I look forward to seeing optical lanes integrated into motherboards. There must be a point where it becomes cheaper than maintaining the integrity of so many tightly packed copper lanes.There are still no GPUs with PCIe 5.0 (not that I care - they don't need it).

Yes, we finally got some PCIe 5.0 SSDs, in recent months, but they're hot, have enormous heatsinks, and I remain to be convinced they deliver user-perceivable benefits over fast PCIe 4.0 drives.

The irony of ironies is that one place where PCIe 5.0 could've actually delivered real value isn't something either Intel or AMD used it for!

Let's save the fanfare until PCIe 7.0 is finalized.

Regardless, I think we're pretty close to the crossover point for optical, as a system interconnect medium*.

* For servers.

But people in the market for lower-end GPUs don't usually have motherboards with bleeding edge I/O.To which I'll respond with the same thing as always: low-end GPUs stand to benefit the most from fast PCIe to give them faster access to system memory and offset their limited local memory pool. Ironically, GPU manufacturers won't give budget buyers that either.

What is "bleeding-edge" IO today will be standard even on lower-end platforms and devices some number of years down the line. The biggest kicker for people on such older platforms is that all recent entry-level GPUs have only an x8 interface, making low-VRAM boards (ex.: 4GB RX5500) almost unworkable on them.But people in the market for lower-end GPUs don't usually have motherboards with bleeding edge I/O.

x8 only and requiring the bleeding edge IO to not suffer that performance hit. Lower end hardware should stick with lower end requirements.What is "bleeding-edge" IO today will be standard even on lower-end platforms and devices some number of years down the line. The biggest kicker for people on such older platforms is that all recent entry-level GPUs have only an x8 interface, making low-VRAM boards (ex.: 4GB RX5500) almost unworkable on them.

I think what @hotaru251 meant was that the peak speeds of PCIe 5.0 SSDs can't get close to the max of PCIe 5.0 x4 speeds. If they can't get near it at peak speeds, then it's not going to do you a lot of good to go above PCIe 4.0.You don't need to "saturate" it. All you need to have an IO bottleneck with macroscopically observable symptoms ...

You seem to have the wrong idea about where the bottlenecks are. If you're worried about tail latencies, you're still barking up the wrong tree. Some Datacenter drives are all about that. Here's a PCIe 4.0 drive with consistently low tail latencies under conditions that would make most consumer PCIe 5.0 drives weep.is a couple of things attempting to do large IO at the same time, causing latency spikes from queue length spikes. You may only need 1% of the bandwidth on average but you still feel the lag when everything happens everywhere all at once every few seconds. The more spare bandwidth you have, the less likely that worst-case scenario is to happen and the less severe it gets.

You're looking at a bathtub curve, with this sort of thing. To a point, faster PCIe will help. However, if the card is swapping in stuff above a certain rate, what's going to happen is it'll chew up both system memory bandwidth and the card's own internal memory bandwidth, which low-end cards don't have in too much abundance.To which I'll respond with the same thing as always: low-end GPUs stand to benefit the most from fast PCIe to give them faster access to system memory and offset their limited local memory pool. Ironically, GPU manufacturers won't give budget buyers that either.

Even PCIe 4.0 motherboards require retimers, from what I've read. That means the lowest-end motherboards might never have it. Just look at the newly-released Alder Lake-N - the highest-spec variant has only PCIe 3.0:What is "bleeding-edge" IO today will be standard even on lower-end platforms and devices some number of years down the line.

I think you've fallen victim to sensationalist youtubers or something, because even a 4 GB card is supposedly fine @ PCIe 3.0, if you keep texture details down so it doesn't run out of VRAM.The biggest kicker for people on such older platforms is that all recent entry-level GPUs have only an x8 interface, making low-VRAM boards (ex.: 4GB RX5500) almost unworkable on them.

I'm still using a GTX1050 with 2GB of RAM... pretty sure the 3.0x16 is the only thing keeping it remotely usable for games today since practically everything will use more than 2GB even at lowest settings.I think you've fallen victim to sensationalist youtubers or something, because even a 4 GB card is supposedly fine @ PCIe 3.0, if you keep texture details down so it doesn't run out of VRAM.

We saw backlash at the price increases of new motherboards, when Intel and AMD introduced PCIe 5.0 support. Given that PCIe 5.0 is still overkill for desktop PCs, I can't imagine Intel or AMD would want to go through another round of such complaints, for a feature with virtually no practical benefits to end users.

For now.No need for PCIe 5.0 in any way shape or form in my SSDs or my GPU.

For now.

Between main PC, living room PC, backup PC, etc., I keep my PCs for ~20 years. Stuff I may not need today usually comes in handy 5-10 years down the line.