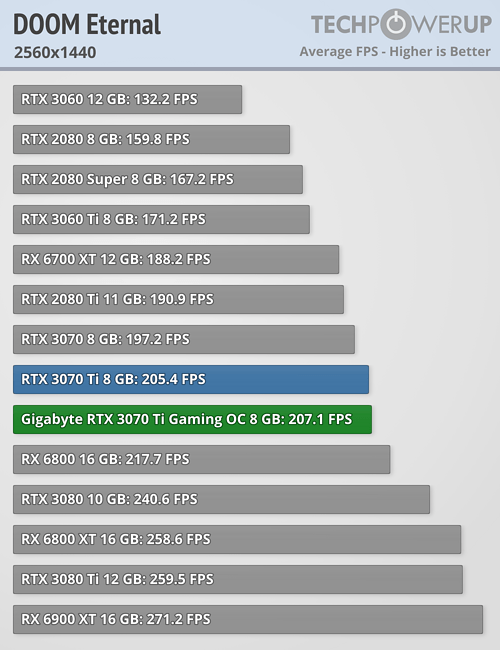

hello guys. i doubt between 3070 ti and 3080 for gaming. i watched youtube videos comparing there two in 1440p and the difference was between 10 to 20 fps. the 3080 is rare and expensive but 3070 ti is much cheaper and more available. but on the other sider the 8 gb vram of 3070ti made me doubt that will it be a problem in 2k gaming in the future or no? while the 3080 vram is 10gb and there is no problem.

whats your opinion? should i go for 3070 ti and pay more and buy 3080? in addition i play everything in ultra setting and want to get more that 60 fps

whats your opinion? should i go for 3070 ti and pay more and buy 3080? in addition i play everything in ultra setting and want to get more that 60 fps