That's nonsense for gaming.

The 13900k uses 25W more than the 7950x for gaming, even with power limits lifted even when using ddr 5-7400, that doesn't make any change for cooling.

...

(Power measurement has not been done in 720p ,but that's just a guess, they don't specify)

https://www.techpowerup.com/review/intel-core-i9-13900k/17.html

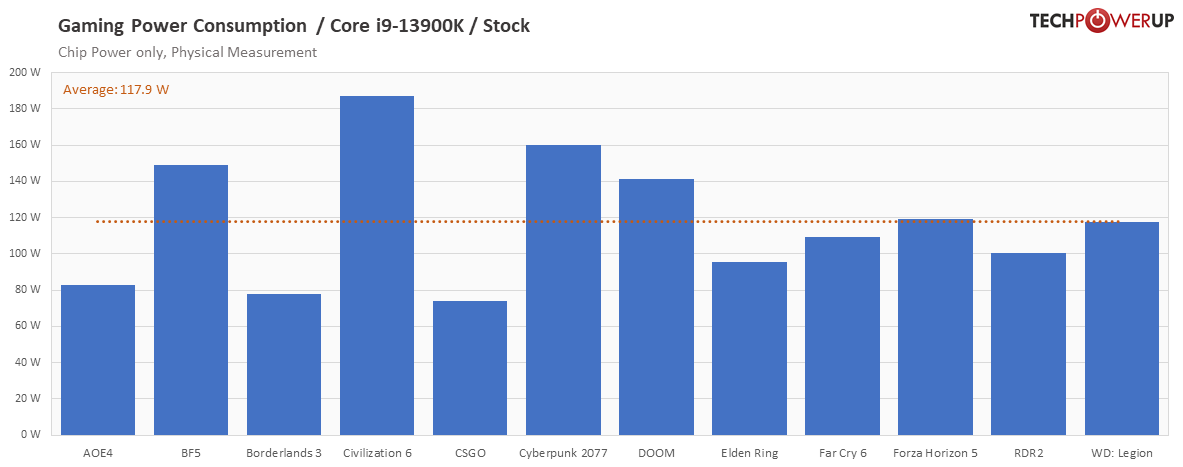

The key point about the data you cited is that it's a 12-game

average. That's the average per-game power (which can hide spikes that happen only some of the time) and averaged over multiple games. We all know that some games hit the CPU harder than others. If they play games at settings that aren't CPU-limited, then the additional power could be negligible. However, what games they play tomorrow are likely to differ from what they play today. And games that are GPU-limited won't be, if they upgrade their GPU to one that's much faster. So, the concern is relevant from a forward-looking perspective (which most buyers will have).

The relevance of this depends a lot on

why someone is concerned about power. If you're concerned about electricity and air conditioning costs, then average power (for the specific games you play) is a good metric to look at. However,

if you're concerned about what kind of cooling solution you'll need to avoid throttling during CPU-heavy periods or how high your CPU fan will spin up, then you really do care about the spikes, and averages obscure that.

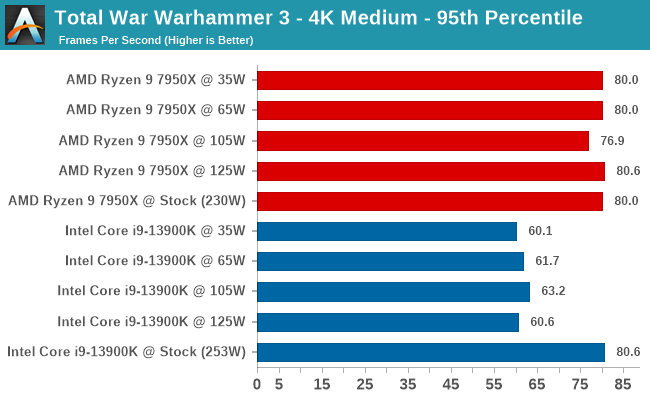

Anandtech wrote an interesting article in which they compared performance at different power limits. For the most part, gaming was unaffected - very good news. However, there were definitely some outliers. The most extreme outlier was Total War: Warhammer 3, where the i9-13900K bogged down badly in 95th percentile performance, at any settings below stock. Interestingly, the 7950X was unfazed.

Again, this is an outlier. Check the article for the rest of the games they tested, but we should keep in mind that as CPUs get faster, games tend to lean on them harder. So, the situation is only likely to get

worse, on this front.

Also, from the same TechPowerUp article that you cited, we can see further details on the power variations per-game. These are the 12 games included in their average. Note how some CPU-lite games like CSGO, Borderlands 3, and AOE4 can compensate for CPU-heavy games like Civ 6, Cyberpunk 2077, and BF5:

Also, don't forget that these are still averaged over their entire benchmark. So, there will be spikes within each game that are higher than these figures.

IMO, it's malpractice for these sites to publish bare averages. They ought to convey the distribution, like with box-and-whisker plots or something.