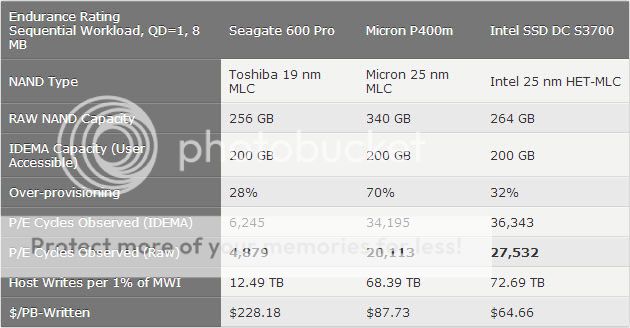

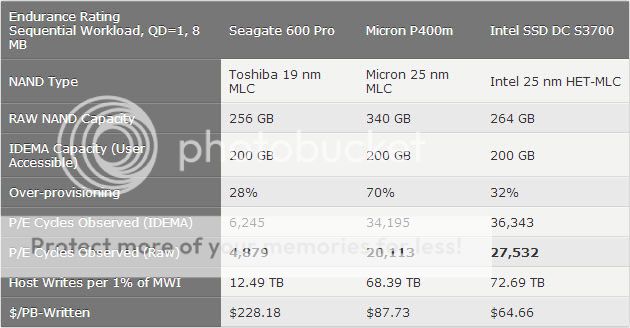

On Tom’s Hardware, we see charts like this one detailing SSD endurance:

This is great stuff, and it’s very useful for helping to establish deployment expectations and TCO estimates. But I've also never personally heard of someone in the SMB world wearing out an SSD. Ever.

I was speaking with a CTO yesterday who's in charge of about 60 seats, and he was telling me that he's held off on bringing SSDs into his org because of endurance worries, even though he's putting up a new SQL server that gets a lot of daily writes and would benefit from SSD speeds. "I mean, I've got 12-year-old HDDs still in service," he told me. "Is an SSD gonna do that for me? I really don't know."

I couldn't answer him. So I'm asking you guys. Are your business SSD getting hammered enough to raise actual endurance concerns? If so, what apps are generating all those petabytes within the SMB segment?

Thanks!

This is great stuff, and it’s very useful for helping to establish deployment expectations and TCO estimates. But I've also never personally heard of someone in the SMB world wearing out an SSD. Ever.

I was speaking with a CTO yesterday who's in charge of about 60 seats, and he was telling me that he's held off on bringing SSDs into his org because of endurance worries, even though he's putting up a new SQL server that gets a lot of daily writes and would benefit from SSD speeds. "I mean, I've got 12-year-old HDDs still in service," he told me. "Is an SSD gonna do that for me? I really don't know."

I couldn't answer him. So I'm asking you guys. Are your business SSD getting hammered enough to raise actual endurance concerns? If so, what apps are generating all those petabytes within the SMB segment?

Thanks!