Very good answer: thanks.

There is a feasible solution, particularly for workstation users (my focus)

in a PCIe 3.0 NVMe RAID controller with x16 edge connector, 4 x U.2 ports,

and support for all modern RAID modes e.g.:

http://supremelaw.org/systems/nvme/want.ad.htm

4 @ x4 = x16

This, of course, could also be implemented with 4 x U.2 ports

integrated onto future motherboards, with real estate made

available by eliminating SATA-Express ports e.g.:

http://supremelaw.org/systems/nvme/4xU.2.and.SATA-E.jpg

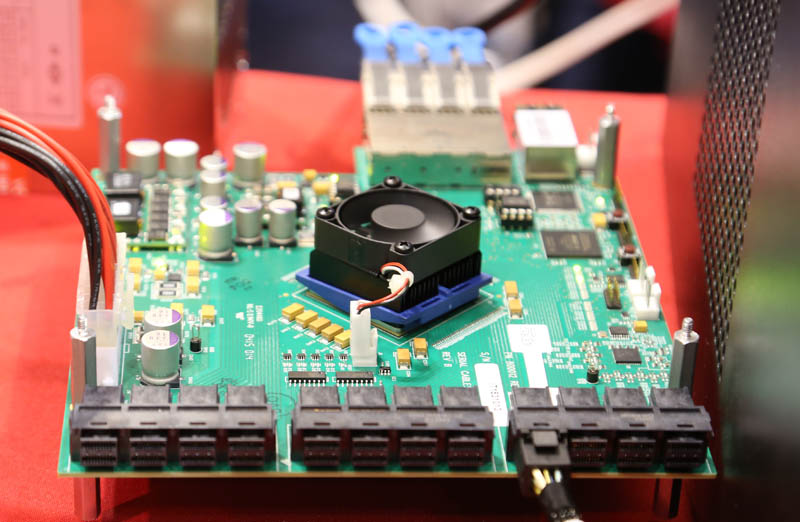

This next photo shows 3 banks of 4 such U.2 ports,

built by SerialCables.com :

http://supremelaw.org/systems/nvme/A-Serial-Cables-Avago-PCIe-switch-board-for-NVMe-SSDs.jpg

[

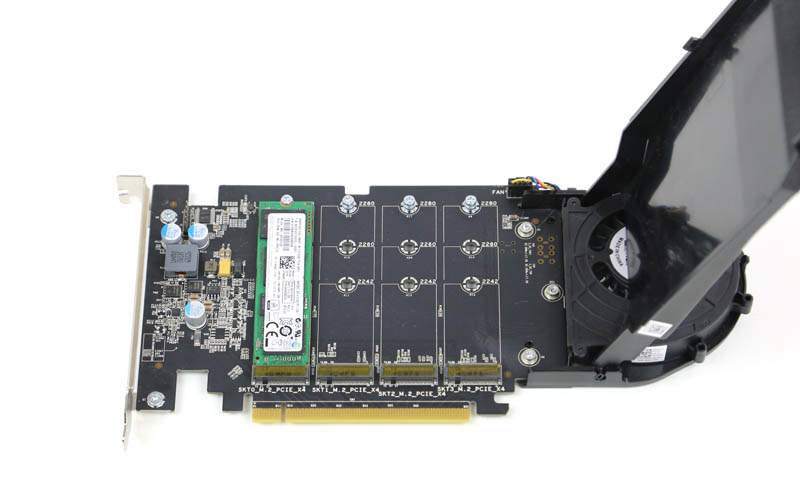

Dell and HP have announced a similar topology

with x16 edge connector and 4 x M.2 drives:

http://supremelaw.org/systems/nvme/Dell.4x.M.2.PCIe.x16.version.jpg

http://supremelaw.org/systems/nvme/HP.Z.Turbo.x16.version.jpg

Kingston also announced a similar Add-In Card, but I could not find

any good photos of same.

And, Highpoint teased with this announcement, but I contacted

one of the engineers on that project and she was unable to

disclose any more details:

http://www.highpoint-tech.com/USA_new/nabshow2016.htm

RocketStor 3830A –

3x PCIe 3.0 x4 NVMe and 8x SAS/SATA

PCIe 3.0 x16 lane controller;

supports NVMe RAID solution packages for Window and Linux storage platforms.

Thanks again for your excellent response!

MRFS