Previews of the RTX 4060 finds its overclocking performance to be... pretty bad.

To No One's Surprise, the RTX 4060 is an Unimpressive Overclocker : Read more

To No One's Surprise, the RTX 4060 is an Unimpressive Overclocker : Read more

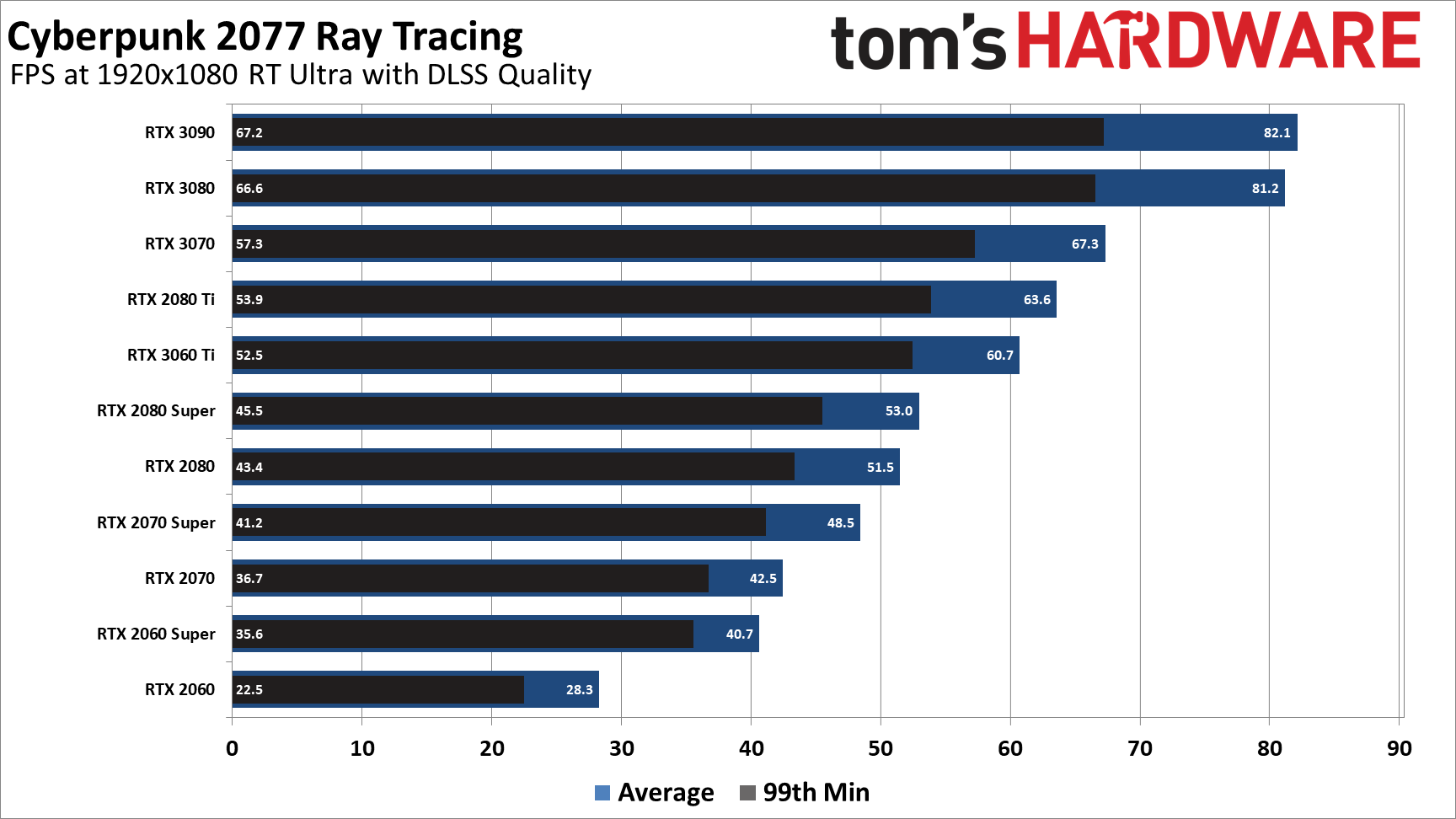

The card still performs well over its predecessor at stock settings, but don't plan on getting an extra boost with overclocking — at least, not in Cyberpunk 2077. The RTX 4060 is very efficient, but it wasn't able to take advantage of any additional power limits it might have access to (perhaps due to voltage limits).

To No One's Surprise, the RTX 4060 is an Unimpressive Overclocker

jay at least didn't shill.but they shilled so hard that Nvidia deemed them worthy of double-extra exclusive mega access for being the biggest shills of all.

he even stated the price was not good.

and thats ALL that matters about a gpu.

it ALL comes down to the price. Not the the hardware itself.

That's not my experience at all, at least if you're not running at settings that exceed your VRAM (e.g. 4K RT-Ultra on an 8GB GPU). Variance between runs for my Cyberpunk 2077 tests is usually something like 0.1%, with the 1% lows as usual showing slightly more of a difference between runs.First of all, the CP2077 benchmark is inconsistent, and like you mentioned, we can't really draw any rational conclusion based on a single game.

Definitely pisses me off that Nvidia gave several YouTubers an option to do a preview of performance two days early, though.

Definitely pisses me off that Nvidia gave several YouTubers an option to do a preview of performance two days early, though.

While searching YT, I found these two YouTubers as well:

but his voice was beyond annoying. I mean... annoying to the point I'd almost toss a hammer through my PC screen.

.....no duh?If a graphics card is not good enough for the games you wanna play, the fact that you bought it cheaply, will do little to console you.

its not.If that's the case, then it's just too bad. Hardware quality, should be consumers' top priority.

I'd argue on the opposite direction: nVidia is locking down the cards more and more and giving its AIBs even less room to get creative without people modifying the hardware directly for "proper" or even mild overclocking."The card still performs well over its predecessor at stock settings"

Would have been a proper headline. But no...Toms' looks for a negative in everything. 99% of people do not even overclock....CPU or GPU.

Except when is the 7900XTX vs the 4090.jay at least didn't shill.

he even stated the price was not good.

and thats ALL that matters about a gpu.

every single modern GPU is "good" at a hardware lvl.

the only thing that makes it good or bad to the consumer is the price.

is a 4080 bad because its less $ to performance than a 4090? No.

is a 4070 bad because its bad $ to performance of a 4080? No.

it ALL comes down to the price. Not the the hardware itself.

Yes, the 128bit bus on 4060/ti was a stupid mistake on nvidias part but it isnt always gonna be an issue (depends on what you are doing with it)

Dont forget the 2 Nvidia mandated points, RT and DLSS3 Fake Frames, sorry, real frames now."the RTX 4060 is an Unimpressive Overclocker"

And yet, whenever "certain" techie websites compare GPUs, they ALWAYS give NVidia extra points because their cards are allegedly are way over-clockable than AMD's....!

And I am sure this dubious drivel will continue.

Likewise and it doesnt surprise me that he was "allowed" to show the card early, since he hasnt stopped publishing videos after videos of this 4060, over hyping it like crazy.I had to unsubscribe from that guy... not only was every video he made whining about GPU prices but his voice was beyond annoying. I mean... annoying to the point I'd almost toss a hammer through my PC screen.

He lost credibility with me though in his Jedi Survivor review when he called a 5 year old 2700x a "fairly recent CPU."

That would be like calling my 2017 7700k build "fairly recent."

Thats just failure to study the product if that happens. Sure there are people impulse buying, statistically 40% and thats lowest estimate, but lets just use George Carlin: “Think of how stupid the average person is, and realize half of them are stupider than that.”Nothing could be further from the truth.

If a graphics card is not good enough for the games you wanna play, the fact that you bought it cheaply, will do little to console you.

A bad purchase is a bad purchase, regardless of how much you paid for it.

If that's the case, then it's just too bad. Hardware quality, should be consumers' top priority.