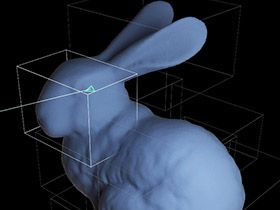

It oozes raytracing. Oh such satisfying raytracing.

Watch AMD's DirectX Raytracing Demo on Future RDNA 2 GPU : Read more

Watch AMD's DirectX Raytracing Demo on Future RDNA 2 GPU : Read more

Bingo. We'll see how it goes, but really anything below 2080 level right now takes a serious beating if you have a title that uses RT heavily. Hoping next-gen brings at least that level of RT performance down to the mid-tier cards.One thing we have learned from Nvidia's attempts to promote ray tracing over the past 18 months: It's very hard to come up with a 'must have' use case for the technology that doesn't tank performance on lesser GPUs.

I doubt it. If anything, the reduced framerates when raytracing will likely reduce demand over the PCIe bus, unless perhaps AMD is doing something like offloading a major part of the raytracing workload to the CPU, and transferring a lot of additional data in the process. Indications are that graphics cards are not coming close to the performance limitations of a PCIe 3.0 x16 slot though, and probably won't be for some years to come.What is the bandwidth requirements for something like this to run smoothly? (i.e. PCIe 4.0 suddenly making sense?)

It was kind of hard to tell if other raytraced effects were getting utilized with practically everything given a mirror-finish. Or maybe that was point. It's possible that each company's raytracing hardware might handle certain effects better than the other's, and they may be trying to showcase something that might not run as well on Nvidia's hardware.

What is the bandwidth requirements for something like this to run smoothly? (i.e. PCIe 4.0 suddenly making sense?)

Well, I'm pretty sure it will be capable of more than that. Microsoft has already been talking about how DXR will be offering "improved lighting, shadows and reflections as well as more realistic acoustics and spatial audio" on the new Xbox, so AMD's raytracing implementation should be more or less feature complete with Nvidia's, from the sound of it. The question comes down to performance though, as we have no real indication of how any of this hardware will perform compared to the first-gen RTX cards. It could be substantially faster, for all we know, but there's no way of telling until side-by-side comparisons can be made. And the same goes for the next generation of RTX cards. We'll likely know more later in the year.If the hardware is only capable of doing what's shown in this demo, then it's decidedly inferior to RTX.

The question comes down to performance though, as we have no real indication of how any of this hardware will perform compared to the first-gen RTX cards. It could be substantially faster, for all we know, but there's no way of telling until side-by-side comparisons can be made.

It oozes raytracing. Oh such satisfying raytracing.

Watch AMD's DirectX Raytracing Demo on Future RDNA 2 GPU : Read more

It was a good demo and technically superior to NVIDIA's Star Wars demo.

The Nvidia's Star Wars demo was close to photorealistic. This demo, meanwhile, proves the assertion that ray-tracing with primary rays alone is practically rendering.

Mirrors... Mirrors everywhere... I kind of think the demo would have had more of an impact had they started it in a room with limited reflective surfaces, instead focusing on effects like raytraced global illumination and shadows, before heading outside into mirror-land. And maybe reduce the number of mirrors out there a bit. ...

No, I'm pretty sure Nvidia's Star Wars demo used global illumination, which this demo seems to lack.It was a good demo and technically superior to NVIDIA's Star Wars demo.

Their reflections look very nice - I don't see any aliasing in them. Did you ever consider what it takes to avoid aliasing in ray-traced reflections off curved surfaces?Perfectly reflective surfaces aren't hugely taxing actually. One ray in, one ray out. When a ray spawns multiple secondary rays upon hit a surface that's when it gets hard.

Yes, but not for the reasons you cite. What concerns me is the apparent lack of Global Illumination. Nvidia's GTX 1660 can almost do raytraced reflections at a decent framerate, and that's with no hardware assist. So, let's hope AMD has more than just reflections up their sleeve.If the hardware is only capable of doing what's shown in this demo, then it's decidedly inferior to RTX.

Global illumination is relatively simple compared to complex reflections. Its just a series of bounding boxes for the element in question. The number of rays required dramatically drops off with bounding boxes.No, I'm pretty sure Nvidia's Star Wars demo used global illumination, which this demo seems to lack.

Not really. It requires a lot more rays and sophisticated denoising.Global illumination is relatively simple compared to complex reflections.

All accelerated ray tracing uses bounding-volume hierarchies. There's still geometry, underneath. BVH is just a data structure to accelerate the search-phase of intersection tests.Its just a series of bounding boxes for the element in question.

I was using POVRay in the early 90's. Before then, I didn't think ray tracing was possible, on a PC. Of course, I also hadn't considered tracing rays back from each image pixel.in 1994.

I wrote support code for POVRay back in college.

No, I'm pretty sure Nvidia's Star Wars demo used global illumination, which this demo seems to lack.

Not really. It requires a lot more rays and sophisticated denoising.

In GI, virtually every hit involves bounces - not just on reflective surfaces!

Performance data backs me up on this. Check out the benchmarks of games that support GI - it's the most compute-intensive (and therefore least-used) ray tracing feature supported by RTX.

All accelerated ray tracing uses bounding-volume hierarchies. There's still geometry, underneath. BVH is just a data structure to accelerate the search-phase of intersection tests.

I was using POVRay in the early 90's. Before then, I didn't think ray tracing was possible, on a PC. Of course, I also hadn't considered tracing rays back from each image pixel.

One of the very first things I tried, in POVRay, was an experiment to see if it did caustics. I think that's when I figured out how they got it so fast - by tracing backwards, instead of forwards.

That would work for explosions that happen in a pre-determined place, but not for moving light sources. It's not a very general solution.If it's not in the demo then it probably means the hardware isn't powerful enough. This is going to be a $200 GPU after all. The next-gen should be much better at faking GI though. As someone pointed out in another thread, inclusion of fast SSD storage means game engines will have access to a much larger pool of assets. Instead of simulating light directly, RT can be used to intelligently choose from large sets of pre-baked lightmaps. Such an approach would yield good performance and scenes that largely look right.

So, you're saying you don't get secondary illumination off a surface that's too far away? That doesn't seem right - what if the surface is very big, or the light source is particularly bright?GI puts a bounding box around the object. So the intersection test doesnt work if the secondary object is outside the box. Thus the number of hits dramatically drop off. Povray 1 didnt used bounding boxes. It was painfully slow.

So, you're saying you don't get secondary illumination off a surface that's too far away? That doesn't seem right - what if the surface is very big, or the light source is particularly bright?

I think it probably just uses the same BVH acceleration as all other use cases, but you just have a lot more rays (and bounces).

No, I'm pretty sure Nvidia's Star Wars demo used global illumination, which this demo seems to lack.

Cheating reflections is done with just casting a texture onto a surface. That tender comes from a viewport change draw call to a memory buffer.

GI puts a bounding box around the object. So the intersection test doesnt work if the secondary object is outside the box. Thus the number of hits dramatically drop off. Povray 1 didnt used bounding boxes. It was painfully slow.

True reflections as shown are computationally expensive because rays can keep bouncing at infinium. Imagine those infinity mirrors that face each other. Thus you have to calculate everything in the viewing fulstrum with a trace is the reflection depth > 1.

You can't say that for all light sources and surface sizes. If my primary light source is a candle, and my secondary light source is sunlight or an explosion, then even tertiary bounces could overpower direct lighting from the primary.secondary illumination is significantly weaker than the primary (direct light) Thus, as the distance increases between surfaces, the effect greatly diminishes and can be safely ignored

I don't know what you mean by "full rays". Do you mean a uniform sampling grid, relative to the incident ray?With multiple reflections, each surface has to be rendered in multiple passes, each requiring full rays on every point and not random sampling.

The effect of GI might be subtle, but once you're used to it, scenes without it have a very "fake" look to them. Even if you're not used to it, turning it on just gives a much more realistic feel.Reflection effects will generally override GI - so even if there was, you probably wouldn't see it with all those mirrors.

Yeah, but because GI rays are traced forward, you still need a lot more of them. And RTX doesn't simply blur them, but rather uses a sophisticated deep learning algorithm to infer the correct illumination from relatively few samples.GI and AO rays can be spaced quite a bit apart and blurred heavily, then applied over top of the other shaders and it still looks amazing. With a mirror reflection "spaced apart" rays is NOT an option - they have to be tight or they won't reflect properly. The tighter the rays have to be, the more of them you need. Its pretty simple.

Literally nobody here is saying that! All I'm saying is that GI is harder than reflections. Not (only) based on what I think, but also the data.So all this talk I keep hearing of "Reflections take no computations" is BS.

I think you're exaggerating. It does use fewer samples and maybe things like TAA. But one thing people like about ray tracing is that it involves way fewer hacks and optimizations than traditional rendering.Real time raytracing still has TONS of intense optimizations - it does not calculate accurately like a renderer in a 3D program at all -- -not even close.