Why does the personal opinion of a reviewer is called into question?

When I read reviews I want the data to be accurate, I don't give a rats ass about the reviewrs opinion

Reviewers opinion cannot be his verdict. The verdict has to be data based, and that verdict is NOT 4070 > 7900GRE, not according to the performance data.

What the author added to the data was not opinion but auxilliary data and circumstances such as:

(emphasis mine)

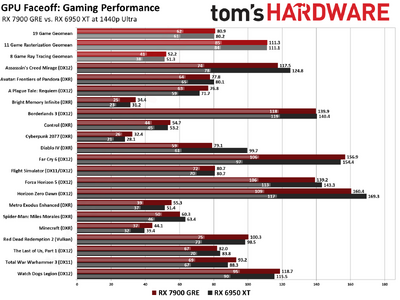

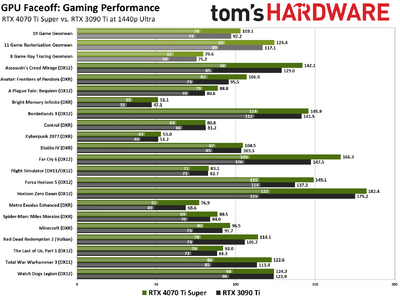

To be clear, 4K showed the largest performance gap, in favor of the 7900 GRE. Actually, it's slightly larger on rasterization (17% lead versus 16% lead), slightly lower in RT (13.5% loss versus 12% loss). When you look at all the data in aggregate, the lead of the 7900 GRE is 3.1% at 4K, 3.4% at 1440p, 1.7% at 1080p, and -1.3% at 1080p medium.

AMD wins in rasterization by a larger amount than it loses in RT.

However, and this is the part people are overlooking, outside of Avatar and Diablo, we didn't test with upscaling (those two games had Quality upscaling enabled).

And I know there are DLSS haters that will try to argue this point, but in all the testing I've seen and done, I would say DLSS in Quality mode is now close enough to native (and sometimes it's actually better, due to poor TAA implementations) that I would personally enable it in every game where it's supported. Do that and the

AMD performance advantage evaporates. In fact, do that and the 4070 comes out 10~15% ahead overall.

On the performance side, DLSS Quality beats FSR 2/3 Quality, so that comparing the two in terms of strict FPS isn't really "fair." Without upscaling in rasterization games, AMD's 7900 GRE wins. With upscaling in rasterization games, the 7900 GRE still performs better

This is why, ultimately, the performance category was declared a tie — if anything, that's probably being nice to AMD by mostly discounting the DLSS advantage.

Put another way:

Without upscaling, AMD gets a clear win on performance in the rasterization category, loses on RT by an equally clear margin. So: AMD+ (This is intentionally skewing the viewpoint to favor AMD, which is what many seem to be doing.)

The key point of departure is opinion on whether upscaling matters. The data is there and points to the 7900GRE performing better without upscaling.

It is the author's

opinion that DLSS should be enabled in every game, and if this is done then the 4070 is the winner.

What people objected to in this thread is

NOT THF favoring nvidia blindly, but the author claiming that:

4070 is the clear winner overall and tied on performance IF DLSS is enabled in every game

and

4070 is the clear winner overall and tied on performance

are the same thing. They are not.

AMD fanboys and trolls may annoy you but many gamers will disagree with the autor's

opinion that DLSS is always good and always on, and therefore forget the 7900GRE, 4070 is better.

It may well be, but for other reasons. The verdict is incongruous to say the least and grotesque and biased to say... well, more than least.

I wouldn't even say that the GPUs are tied on performance, though i accept the author's argument about the GRE's advantage being situational and overstated/skewed.

Likewise, i would not really consider testing at 4K being relevant to the verdict. It's handy to have the info but neither GPU is a 4K performer.

Reality is that there are

two verdicts. One that favors nvidia in a bs way, and one that favors AMD in a bs way as well.

We have to have this argument because the author chose one of them and called it a fair verdict.

Buy the GPU that fits your needs and budget. Neither of these is a worthy investment but between them i have to say the 4070 is completely pointless because the Ti is the better choice.

The 7900GRE also competes with the 7800XT which may just be better value depending on the price you pay and how high you can stretch your budget.

I would sit and anticipate the 50XX series, but that's really neither here nor there.