The CPU reviews here in Tom's have stock and OC results. They also have the test bench and the settings used listed in the review clearly. I cannot identify which model of the 4070 and the 7900GRE was used in this test.

The numbers dont lie, but metrics without the complete picture is not the whole truth either.

"The gaming performance gap is less than 5% even at 4K."

Both of these cards are not aimed at 4k gaming. They are 2k resolution cards. The above statement gives a wrong message to the readers. Experienced reviewers should not be doing this!

To be clear, 4K showed the

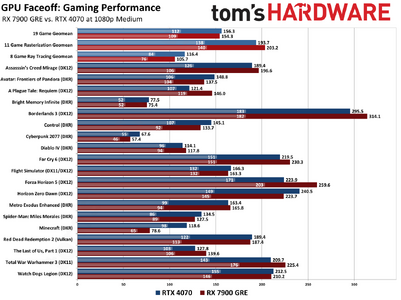

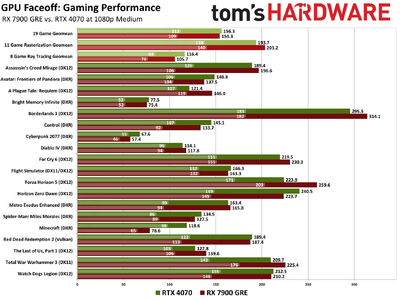

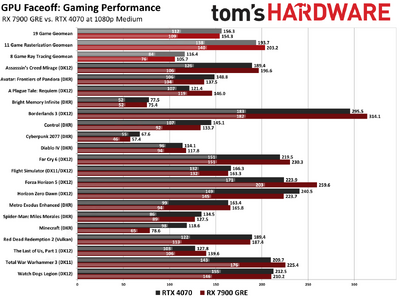

largest performance gap, in favor of the 7900 GRE. Actually, it's slightly larger on rasterization (17% lead versus 16% lead), slightly lower in RT (13.5% loss versus 12% loss). When you look at all the data in aggregate, the lead of the 7900 GRE is 3.1% at 4K, 3.4% at 1440p, 1.7% at 1080p, and -1.3% at 1080p medium.

That's why we called it a tie. Not because there aren't outliers, but taken just at face value, the numbers are very close. Pick different games and we could skew those figures, but that's an entirely different discussion. Yes, AMD wins in rasterization by a larger amount than it loses in RT. However, and this is the part people are overlooking, outside of Avatar and Diablo, we didn't test with upscaling (those two games had Quality upscaling enabled).

The more time passes, the more I fall into the camp of thinking anyone not using DLSS on an RTX GPU is basically giving up a decent performance boost with negligible loss of image quality. There are exceptions, and they mostly come down to games with a buggy/poor implementation — in which case swapping DLSS DLLs and using Nvidia Inspector to set mode E with the 3.7 DLL probably fixes the issues. In general, meaning in games where DLSS and FSR are both implemented well, right now DLSS is clearly superior on image fidelity, it's in more games, and

DLSS also factors into the overall performance.

FSR 2/3 at present does not match DLSS in image quality. (Note that I never mention framegen as a major win, because I also feel it's not nearly as useful as Nvidia — and now AMD! — like to pretend.) In fact, XeSS in DP4a mode (with version 1.2 and now 1.3) looks better than FSR 2/3 upscaling... well, at least if you stick with the old upscaling factors. XMX mode looks even better than DP4a mode. So, DLSS wins on image quality, XeSS XMX mode is second and relatively close to DLSS, XeSS DP4a is a more distant third (and has a higher performance hit!), and then FSR 2/3 upscaling is last in terms of image quality. There are things where FSR looks fine, but if you do a systematic look at all areas, there are many documented issues — transparency is a big one.

And I know there are DLSS haters that will try to argue this point, but in all the testing I've seen and done, I would say DLSS in Quality mode is now close enough to native (and sometimes it's actually better, due to poor TAA implementations) that I would personally enable it in every game where it's supported. Do that and the AMD performance advantage evaporates. In fact, do that and the 4070 comes out 10~15% ahead overall.

But wait, didn't we already give the feature category to Nvidia? Isn't it getting counted twice? Perhaps, but it really is a big deal in my book. On the features side, Nvidia has DLSS and the AI tensor cores, which also power things like Broadcast, Video Super Resolution, and likely plenty more things in the future. On the performance side, DLSS Quality beats FSR 2/3 Quality, so that comparing the two in terms of strict FPS isn't really "fair." Without upscaling in rasterization games, AMD's 7900 GRE wins. With upscaling in rasterization games, the 7900 GRE still performs better but it looks worse. This is why, ultimately, the performance category was declared a tie — if anything, that's probably being nice to AMD by mostly discounting the DLSS advantage.

Put another way:

Without upscaling, AMD gets a clear win on performance in the rasterization category, loses on RT by an equally clear margin. So: AMD+ (This is intentionally skewing the viewpoint to favor AMD, which is what many seem to be doing.)

Price remains a tie.

Features is massively in favor of Nvidia. So: Nvidia+++

Power and efficiency favors Nvidia a lot as well. So: Nvidia++

Based on that, it's five points to Nvidia and one point to AMD. If someone asks me which GPU to get, 4070 vs 7900 GRE, I will tell them to get the Nvidia card. Every time. I would happily give up 10% performance in native rasterization games to get everything else Nvidia offers in its ecosystem.