The RTX 3070 and 3060 Ti both have the GA104 chip except the one in the 3060 is cut down with 1024 less Cuda cores, which is just over a 17% difference, and 8 less RT cores.

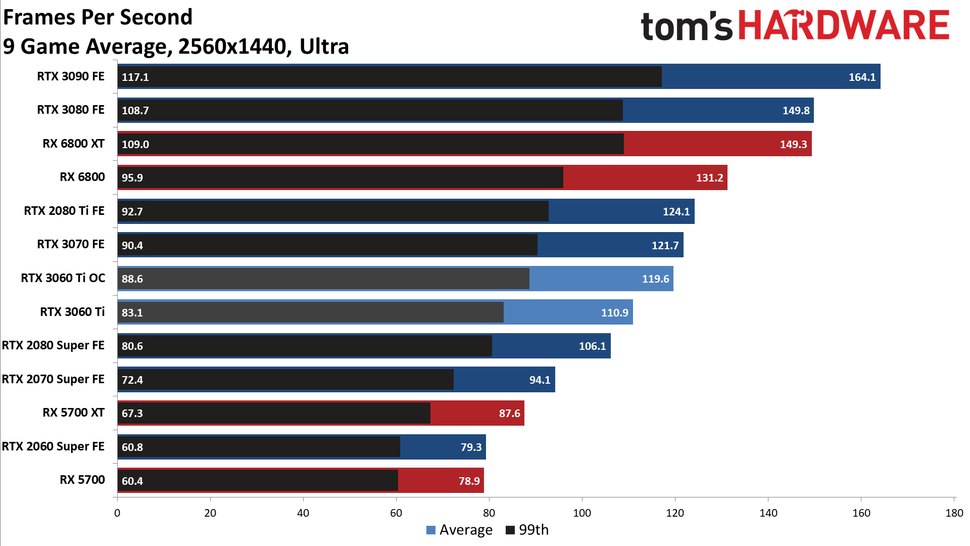

From benchmarks I've seen, the 3060 Ti is usually only 5 to 10 FPS behind the 3070. Is there any reason that warrants spending £100 more for a 3070?

And this brings me to my main question; with driver and game optimisations, will the extra 1024 CUDA cores prove to be useful in the future, ie will the gap between them increase?

From benchmarks I've seen, the 3060 Ti is usually only 5 to 10 FPS behind the 3070. Is there any reason that warrants spending £100 more for a 3070?

And this brings me to my main question; with driver and game optimisations, will the extra 1024 CUDA cores prove to be useful in the future, ie will the gap between them increase?

Last edited: